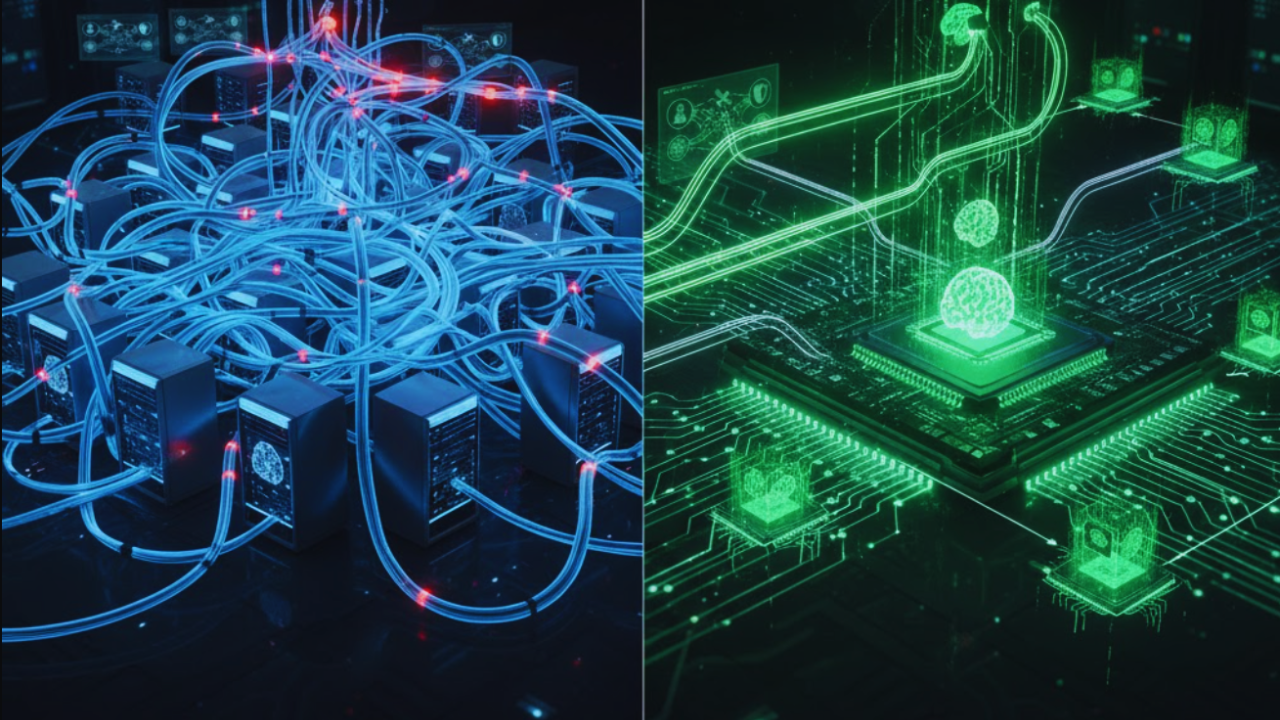

Most AI infrastructure still rests on an assumption that no longer holds. It assumes intelligence lives inside a single, oversized model running on dedicated hardware. That approach made sense when AI systems were simpler. Today, it drags down performance, inflates costs, and adds needless complexity.

As agentic AI becomes standard, this design breaks down fast. Modern systems rely on multiple models working together inside a single request. In that context, one-model-per-node stops looking like best practice and starts looking like technical debt.

Modern AI applications rarely depend on a single monolithic model. Instead, they coordinate several large language models, each tuned for a specific task. One model validates inputs. Another retrieves data. Others handle reasoning, tool selection, or synthesis.

These models often run sequentially or conditionally. Some run multiple times in a single workflow. When teams isolate each model on separate hardware, or worse, on different clusters, latency piles up. Costs rise. Operational complexity explodes.

Many teams respond by adding more GPUs or larger accelerators. That response misses the point. Raw compute is not the bottleneck. Architecture is. Agentic systems need infrastructure that treats multiple models as a single, co-resident workload, not as loosely connected services.

Model Bundling as a First-Class Architectural Primitive

Model bundling reverses the traditional deployment model. Instead of assigning hardware to one model, teams deploy multiple models on the same physical node. The system selects models dynamically at runtime as part of a single workflow.

In SambaStack, teams define this setup declaratively using Kubernetes manifests. These manifests specify which models to deploy together, including customer-owned checkpoints when needed. Each model exposes an OpenAI-compatible inference API, which simplifies integration with agent frameworks like LangGraph, LangChain, CrewAI, or custom orchestration layers.

The hardware architecture makes this approach practical. SambaNova’s Reconfigurable Dataflow Unit design stages models in DDR memory and swaps them into high-bandwidth memory on demand. Model switching happens in microseconds. GPU-based systems rarely achieve that speed without costly reloads or idle hardware.

A single SambaNova node consists of eight AI servers in a rack and supports up to one terabyte of HBM. That capacity allows teams to host multiple frontier-scale models at once. As a result, complex agentic workflows can run end to end on one node without distributed coordination overhead.

Why This Changes the Economics of Enterprise AI

For practitioners, model bundling removes a major systems challenge. Instead of routing requests across services and networks, workflows execute locally. This reduces tail latency, stabilizes performance, and simplifies debugging and observability.

For enterprises, the impact runs deeper. SambaStack supports bundled deployments on premises, in private clouds, in air-gapped environments, or as a managed service. Organizations keep operational control in every case. That control matters in regulated industries where compliance and data sovereignty remain non-negotiable.

Consider a healthcare deployment. An agentic workflow processes electronic health records stored in a GraphRAG system. The workflow coordinates three open-source LLMs, including a 120-billion-parameter model. All components run on a single SambaRack.

The system executes four LLM calls, queries a Neo4j graph database, generates custom Cypher queries, and completes the workflow in just over two seconds. No data leaves the environment. No external services participate.

The same pattern applies to finance, defense, cybersecurity, and other sensitive domains. Wherever proprietary data demands multi-step reasoning, bundled models change what is feasible.

From GraphRAG Experiments to Production-Scale Systems

Agentic GraphRAG combines retrieval-augmented generation with graph databases. Instead of reasoning over isolated documents, systems reason over entities and relationships.

In the demonstrated workflow, LangGraph coordinates several specialized agents. One agent validates input. Another selects tools and reasons over context. A third translates natural language into Cypher queries for Neo4j.

Each agent relies on a different model. All models run together as a single bundled unit on SambaStack. This setup highlights the platform’s strengths. Teams manage infrastructure independently through SambaRack Manager. Kubernetes-native deployment supports fast iteration and controlled scaling.

Even when one rack handles an entire application, additional racks scale the system horizontally to support higher concurrency. Because the inference APIs match OpenAI’s format, teams reuse existing applications and LLMOps tooling with minimal changes. Tools like LangSmith provide visibility into agent behavior and model usage.

One-model-per-node belongs to an earlier phase of AI. Agentic systems demand a different assumption set. They assume collaboration, minimize distance between models, and treat orchestration as core infrastructure.

Model bundling is not a minor optimization. It represents a necessary redesign. As agentic workloads become the norm, architectures built for collaboration will define the next generation of enterprise AI.