Goldman Sachs Research has predicted a 160% surge in data center power demand by 2030. This is just one indication of how AI is poised to reshape future data centers.

What other profound impacts will AI have on cloud and data center infrastructure?

I caught up with Vance Peterson, who is a Global Solution Architect at Schneider Electric, and he gave me his take on the shifting AI landscape. For the past 20 years, Vance has seen and driven transformative changes in technology, from the rise of virtualization to the current shift towards decentralized, high-performance compute clusters. Now, he helps global clients navigate complex challenges around sustainability, reliability, and resilience in the age of AI. Here’s what he had to say…

AI Clusters Deployment: the Challenges

Vance highlights three primary challenges that clients face when deploying AI clusters:

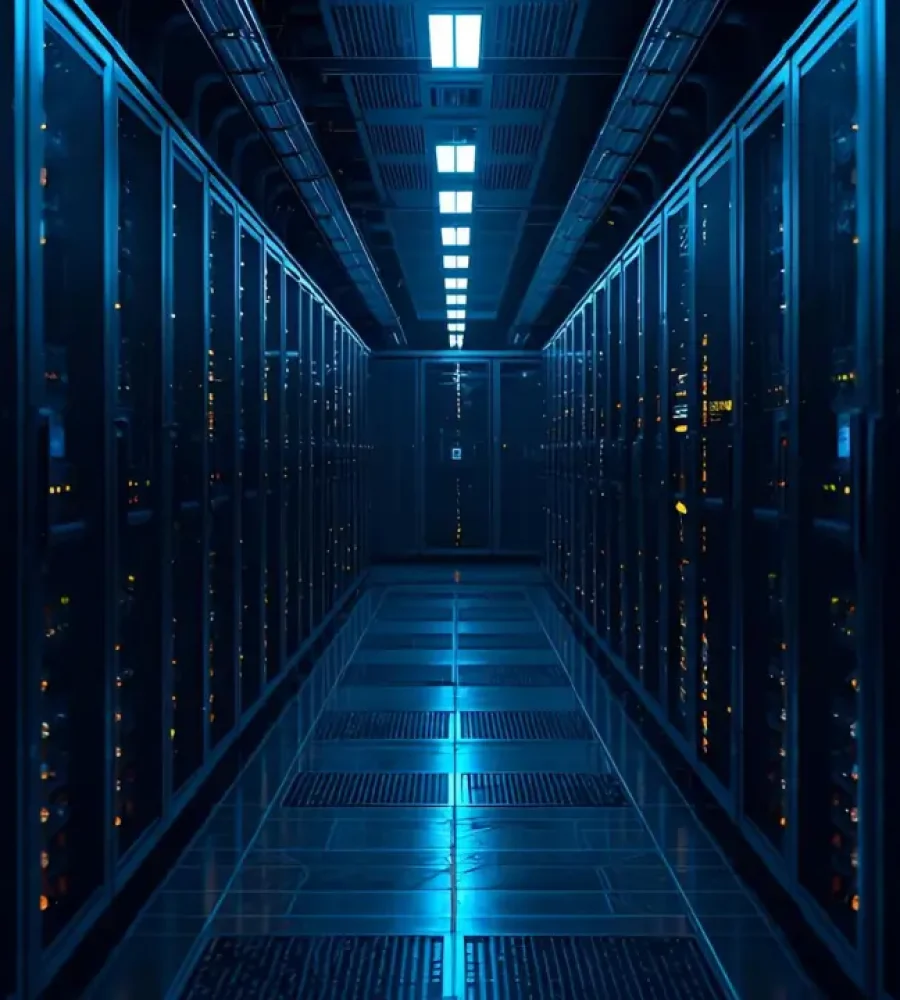

Scalability and Infrastructure Management: AI workloads, particularly those using accelerated parallel compute (considered the backbone of AI), require vast amounts of power and cooling. Ensuring that data centers can handle these increasing demands—by validating hardware, cooling systems, and network capabilities—is critical for future-proofing.

Energy Efficiency and Sustainability: AI clusters are power-hungry, but they also operate more efficiently than previous systems. Schneider Electric helps clients optimize energy usage, improve cooling efficiency, and minimize carbon footprints to meet sustainability goals.

Data Integration and Security: AI systems often require access to vast amounts of sensitive data, creating integration challenges across different systems. Ensuring secure, compliant, and seamless data flow between AI systems, legacy systems, and IoT devices is crucial for maintaining performance and safeguarding sensitive information.

We also touched on the technical demands of integrating AI clusters. Petersen explains that setting up these clusters involves more than merely purchasing and installing GPUs. Schneider’s EcoStruxure platform, for example, offers real-time energy monitoring, predictive maintenance, and optimization tools to ensure AI clusters receive uninterrupted power with minimized downtime. Their UPS systems provide robust, scalable backup solutions critical for AI, safeguarding workloads from grid fluctuations and potential outages.

Vance also shared a key challenge Schneider’s customers face—keeping up with the rapid evolution of AI GPUs. “The roadmaps from manufacturers are changing so fast that it’s hard to keep pace,” he says. “Many of the facilities we work with are using GPUs like the Hopper series, including models like the H100 and H200. These setups are already running at rack densities between 20 kW and 40 kW, which means complex infrastructure and power requirements.“

These systems are typically air-cooled but the next-generation GPUs, such as Blackwell, will push these limits further, with densities reaching 132 kW per rack. For example, a 1,152 GPU cluster could consume 2.3 megawatts and occupy 560 square feet, costing millions of dollars!

Looking ahead, Vance expects the next GPU generation to push densities beyond 260 kW per rack. “AI and high-performance computing environments are going to need even more power,” he says. “These workloads require massive parallel processing, necessitating advanced cooling solutions like liquid cooling. Even in edge environments, rack densities of 30 to 60 kW are becoming standard to support AI processing closer to the data source.“

Is AI living up to the hype?

Vance believes it is. “Absolutely. We’re already seeing huge strides in sectors like autonomous driving, healthcare, financial services, and manufacturing,” he shares. “These industries are seeing tangible benefits from AI investments, with improvements in automation, operational efficiency, and decision-making speed.“

The growing volume of data is a key factor driving this progress. As data production surges, the infrastructure supporting AI needs to evolve. For instance, data centers, which were once content with a two-megawatt power capacity, are now seeing projects that demand gigawatt-level energy.

One example he gives is: “a 10,000 square-foot data hall, provisioned with over four megawatts of power and cooling, designed to support AI clusters. Once operational, this facility will house 3,000 square feet of AI infrastructure, highlighting the rising density requirements for AI workloads.“

When it comes to data center design, Schneider Electric is focusing on both building new facilities and retrofitting existing ones to accommodate AI. The company has collaborated with NVIDIA to develop reference designs that support high-density, GPU-based AI workloads. These designs cater to both new builds and the retrofit of existing facilities, with the latter presenting unique challenges. Retrofitting involves optimizing traditional IT infrastructure to meet the higher density and cooling requirements of AI workloads, ensuring efficiency and sustainability.

Cooling is a huge part of the equation. “Air cooling can handle densities up to 40-60 kW per rack,” Vance notes. “But beyond that, we’ll need liquid cooling. For GPUs like NVIDIA’s Hopper architecture, as much as 85% of cooling needs to come from liquid, with air cooling only handling 15%.“

Schneider Electric’s solution? “We’re offering advanced liquid cooling systems and hybrid solutions that balance both air and liquid cooling, optimizing performance while ensuring sustainability.“

Schneider Electric is also leveraging digital twin technology through platforms like EcoStruxure, enabling predictive analytics and real-time modeling to optimize the infrastructure. “As AI workloads continue to grow,” Vance explains, “the cost of supporting this infrastructure is actually becoming a smaller portion of the total cost of AI clusters—clusters that can run into millions of dollars per rack.“

Looking ahead, Vance anticipates that the evolution of AI will lead to racks that deliver up to 540 kilowatts. “We’re talking about racks with more computing power than today’s entire data halls,” he says. While that’s an exciting prospect, it also comes with challenges, particularly around power demands and energy consumption. “Schneider Electric is already developing innovative solutions to meet these challenges and make sure AI can continue to thrive sustainably,” Vance assures.