Introduction: The Shift Toward Emerging AI Architectures

Large language models (LLMs) have defined the current generation of artificial intelligence, supporting applications across research, enterprise operations, code generation, and knowledge retrieval. As AI workloads become more complex, organizations are analyzing architectures that extend beyond language-only systems. These advancements focus on multi-modal inputs, distributed decision-making, and specialized models capable of interacting both with each other and with real-time data sources. This article examines emerging AI architectures that build on and move beyond LLM-based foundations.

Why Emerging AI Architectures Matter in the Post-LLM Landscape

Evolving Requirements for Multi-Modal Understanding

Traditional LLMs interpret and generate text, but organizational workflows increasingly require integrated processing across images, audio, signals, graphs, spatial coordinates, and sensor data. Multi-modal architectures address this requirement by aligning diverse data formats within unified model frameworks. Common components include:

- Vision encoders

- Audio transcription and embedding modules

- Sensor fusion models

- Cross-modal attention mechanisms

These systems support use cases across robotics, enterprise automation, industrial monitoring, and scientific research where textual data alone is insufficient.

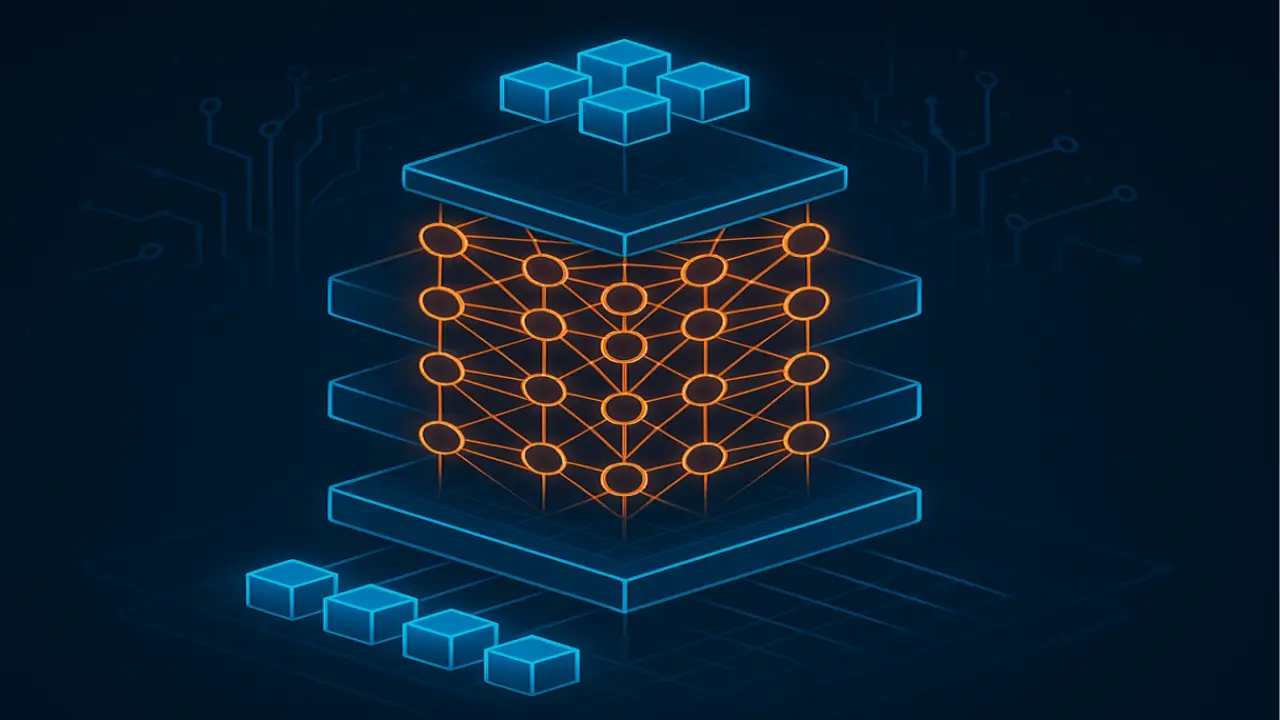

From Single-Model Systems to Multi-Agent Collaboration

A central characteristic of emerging AI architectures is the shift from single monolithic models to coordinated groups of specialized agents. Multi-agent systems distribute tasks among multiple AI components. Examples include:

- Task-decomposition agents

- Retrieval agents for structured information

- Planning agents coordinating sequences of actions

- Verification agents validating outputs

This structure improves modularity and reduces the computational overhead required to scale a single model across all tasks.

Architectural Trends Shaping the Next Phase of AI

Modular AI Systems Replacing Monolithic Workflows

Organizations are adopting modular architectures to support scaled AI operations. Instead of relying on a single generalized model, workloads are divided into smaller, purpose-built systems that interact via defined interfaces. Benefits include:

- Improved resource utilization

- Faster development cycles

- Lower retraining requirements

- Modular upgrades without full-model replacement

This approach aligns well with distributed compute infrastructures and modern data center deployment patterns.

Retrieval-Augmented Architectures for Real-Time Knowledge Integration

Retrieval-augmented generation (RAG) remains central to information-rich AI workloads. These architectures combine model outputs with structured or semi-structured data retrieved from enterprise knowledge bases, vector databases, or operational environments. Because retrieval references verifiable data, organizations benefit from improved accuracy and tighter alignment with internal information governance frameworks.

Retrieval modules often run on separate compute clusters optimized for search performance, enabling scaling independent of model inference clusters.

Agentic Models and Workflow-Oriented AI

Agentic architectures enable AI systems to perform actions, reason over multi-step workflows, and dynamically allocate tasks to subsidiary systems. For example:

- A planning agent defines tasks

- A computation agent performs calculations

- A monitoring agent evaluates output consistency

Such systems rely on defined communication protocols and internal orchestration layers. This structure reduces reliance on a single large model and strengthens operational reliability.

The Rise of Multi-Modal Foundation Models

Expanding Input Capabilities Across Data Types

Multi-modal foundation models support text, images, audio, and sometimes physical measurements within unified model structures. They use shared embedding spaces and cross-modal attention to align data types. Typical components include:

- Multi-modal encoders

- Shared latent representations

- Decoders specific to each output domain

Foundational multi-modal systems are particularly relevant for fields such as biomedical research, robotics, material science, and operational analytics.

Implications for Data Center Compute and Storage

Support for multi-modal training and inference requires substantial GPU clusters, high-bandwidth fabrics, and expanded storage infrastructure. Organizations increasingly evaluate:

- GPU cluster utilization

- High-bandwidth memory (HBM) requirements

- Interconnect throughput

- Co-location of training and inference nodes

These trends are reshaping data center design and accelerating the adoption of liquid cooling and modular AI pod structures.

Multi-Agent Systems: A Key Direction Among Emerging AI Architectures

Distributed Autonomy and Task Allocation

Multi-agent systems reflect a distributed approach in which multiple AI models collaborate to complete complex tasks. Each agent may specialize in retrieval, planning, analysis, or error detection. This leads to:

- Reduced reliance on extremely large models

- Enhanced flexibility in pipeline design

- Alignment with distributed compute clusters

These architectures support scalable, multi-stage workflows within enterprise environments.

Verification and Safety Layers Within Agentic Designs

Agentic systems often introduce verification modules that validate outputs from other agents. These modules check consistency, evaluate data source relevance, and ensure alignment with structured rules. This type of architecture strengthens reliability and reduces the risk associated with singular model outputs.

Infrastructure Requirements for the Next Wave of Emerging AI Architectures

Compute Implications for Multi-Modal and Multi-Agent AI

Multi-modal architectures and multi-agent systems depend on:

- High-performance GPU clusters

- Distributed compute nodes

- Fast interconnect fabrics to reduce latency

- Storage systems optimized for high-throughput workloads

Workloads may require both training clusters and inference clusters operating concurrently. Organizations also evaluate energy consumption, thermal design, and workload orchestration across heterogeneous compute resources.

Data Center Designs Supporting Future AI Architectures

To support next-generation systems, data centers integrate:

- High-density rack deployments

- Liquid cooling systems

- Modular data hall designs

- Direct-to-chip cooling for sustained workload intensity

These facility characteristics reduce thermal overhead and improve infrastructure efficiency when deploying emerging AI architectures.

Data Governance Considerations for Post-LLM Architectures

Managing Multi-Modal Data Pipelines

Multi-modal systems require well-structured pipelines for image, video, text, and sensor data. Governance models typically include:

- Data lineage

- Access control structures

- Anonymization frameworks

- Quality checks for multi-modal datasets

Maintaining consistency across input modalities is essential for reliable model behavior.

Transparency and Traceability in Multi-Agent Workflows

Because multi-agent systems break tasks into interconnected components, organizations benefit from transparent reporting on:

- Agent handoff structures

- Task decision pathways

- Data retrieval provenance

Clear traceability supports compliance requirements and reduces ambiguity in multi-step workflows.

Operationalizing Emerging AI Architectures in Enterprise Environments

Training, Fine-Tuning, and Inference Coordination

Enterprises typically coordinate multiple processes, including:

- Pre-training on large datasets

- Fine-tuning for domain-specific tasks

- Distillation into lightweight models

- Real-time inference across distributed agents

Each activity may occur on separate compute clusters designed for specific workload types. Coordinated scheduling tools and workload orchestration layers reduce inefficiencies.

Monitoring and Optimization Across Distributed Agents

Monitoring for multi-agent workflows includes:

- Real-time performance analytics

- Agent-to-agent communication logs

- Utilization metrics for GPU clusters

- Error propagation tracking

These processes help organizations refine architectures over time.

Conclusion: A Clear Direction for Emerging AI Architectures

Organizations developing advanced AI systems are increasingly evaluating architectures that extend beyond LLMs. Multi-modal models, retrieval-augmented systems, and multi-agent frameworks represent core components of the next generation of emerging AI architectures. These approaches emphasize specialization, modularity, coordination, and the integration of diverse data types. As AI workloads evolve, infrastructures, data governance frameworks, and operational strategies will adapt to support these architectures without relying solely on large monolithic language-based systems.