Shaping the New World of Liquid Cooling

The modern AI era no longer revolves around raw compute alone because infrastructure efficiency now defines the real competitive edge. Across hyperscale data centers, edge deployments, and enterprise environments, thermal constraints increasingly dictate how far AI systems can scale.

As model complexity grows and inference workloads multiply, traditional air-cooling architectures struggle to sustain performance without escalating cost and operational friction.

The industry has begun to reassess not only how compute is deployed but also how it is cooled and sustained over time.

Liquid cooling has moved from experimental engineering to strategic infrastructure, signaling a deeper shift in how organizations design AI capacity. This shift reflects a broader realization that thermal architecture now shapes the future of compute as much as silicon innovation itself.

Why Cooling Has Become the Strategic Bottleneck in AI Infrastructure

The rise of AI inference workloads has fundamentally altered the thermal profile of modern compute environments. Unlike traditional enterprise workloads, AI inference demands sustained, high-density processing that pushes hardware far beyond historical thermal thresholds. Air-based cooling systems often fail to deliver stable performance under these conditions because they rely on airflow dynamics that scale poorly with density. The energy overhead associated with air cooling increases disproportionately as compute density rises, which erodes efficiency gains from advanced hardware.

Cooling has transformed from a background operational concern into a core architectural constraint that influences infrastructure strategy. As a result, organizations now treat thermal design as a first-order decision rather than a secondary optimization. Traditional facility cooling models evolved in an era when compute density grew incrementally rather than exponentially. These models depend on centralized HVAC systems, raised floors, and airflow optimization, which work effectively at moderate power densities but falter at extreme loads.

As GPU clusters become denser and more distributed, the distance between compute and cooling infrastructure introduces inefficiencies that compound over time. Scaling traditional cooling systems often requires extensive facility upgrades that slow deployment timelines and inflate capital expenditure.

In contrast, AI-driven workloads demand rapid scaling, flexible deployment, and predictable thermal performance across diverse environments.The mismatch between legacy cooling architectures and modern AI requirements continues to widen with every new generation of hardware.

Liquid Cooling as an Architectural Shift Rather Than a Tactical Upgrade

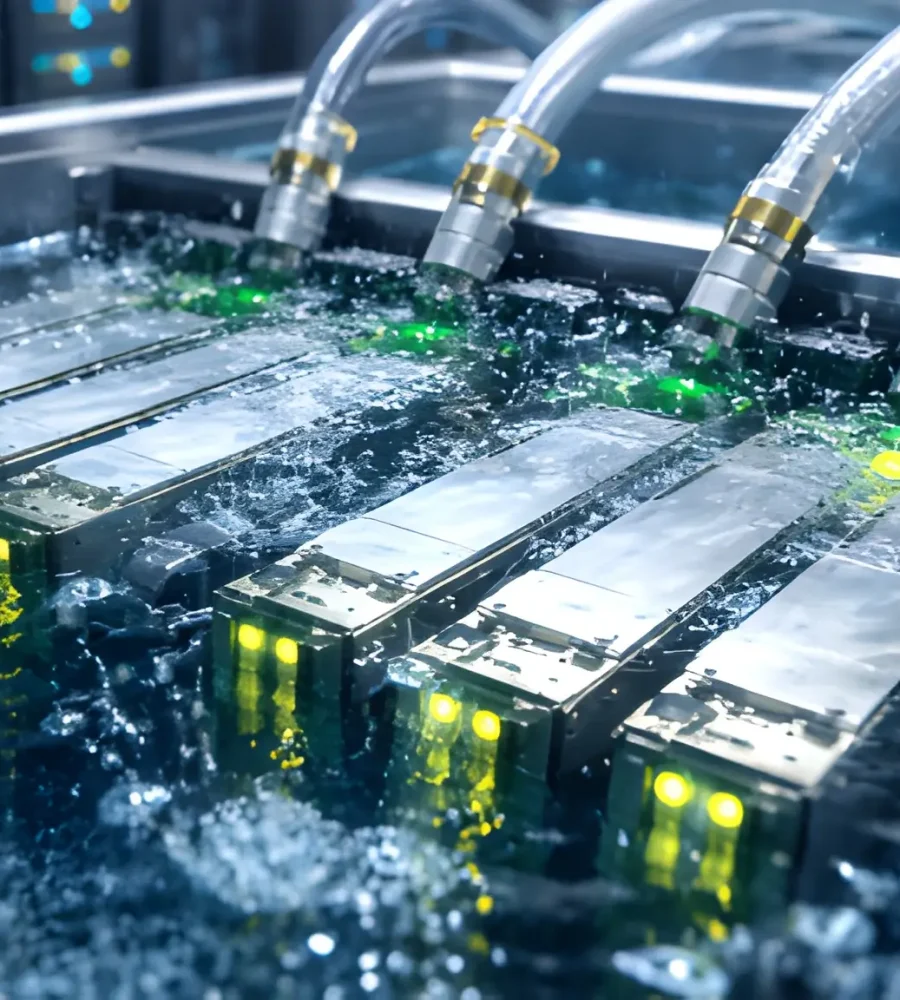

Liquid cooling does not simply replace air with fluid because it fundamentally redefines how heat is managed at the system level. By transferring heat directly from components to liquid mediums, these systems bypass the inefficiencies inherent in air-based thermal transfer.

This approach enables higher compute density without proportional increases in energy consumption or spatial footprint.

Additionally, liquid cooling allows modular deployment models that align more closely with distributed AI architectures and edge computing strategies.

As organizations seek to deploy inference closer to users and data sources, localized cooling solutions become essential rather than optional.

Thus, liquid cooling emerges not as an incremental improvement but as a structural transformation in compute infrastructure design.

The Strategic Question Behind High-Density AI Deployment

Every organization building AI infrastructure eventually confronts a fundamental question that transcends hardware specifications and performance benchmarks.How can high-density AI inference capacity be deployed without inheriting the massive overhead associated with traditional facility cooling systems.

This question reflects a deeper tension between computational ambition and physical constraints that define the modern AI landscape. When compute density increases faster than cooling capability, organizations face diminishing returns on infrastructure investment.

Consequently, the search for closed-loop, self-contained cooling ecosystems has become a priority for architects designing next-generation AI environments. In this context, liquid cooling represents not merely a technical solution but a strategic response to structural limitations in legacy infrastructure.

The Emergence of Closed-Loop Cooling Ecosystems

Closed-loop cooling ecosystems represent a departure from centralized facility-based thermal management toward localized, self-contained solutions. These systems integrate cooling, compute, and power management into a unified architecture that operates independently of traditional data center infrastructure.

By isolating thermal processes within dedicated units, closed-loop systems reduce reliance on external cooling resources and minimize environmental variability. This architecture enables consistent performance across diverse deployment environments, including edge locations with limited facility support. As AI workloads increasingly move beyond centralized hyperscale data centers, closed-loop cooling ecosystems provide a scalable foundation for distributed compute.

Therefore, the rise of closed-loop liquid cooling reflects a broader shift toward modular and autonomous infrastructure design in the AI era.

Tongai and the Emergence of Modular Liquid-Cooled Infrastructure

Tongai recently introduced the Tongai Liquid-Cooled QuadBox as a response to the growing demand for high-density AI inference without traditional cooling overhead. The system reflects a design philosophy centered on modularity, efficiency, and environmental resilience rather than dependence on large-scale facility infrastructure.

By housing multiple servers within a self-contained liquid-cooled enclosure, the QuadBox reimagines how compute units can operate outside conventional data center environments.

Its architecture aligns with the broader industry trend toward decentralized AI deployment, where inference capacity must exist closer to data sources and users.

The QuadBox demonstrates how liquid cooling can integrate seamlessly with modular compute design, creating infrastructure that scales without linear increases in operational complexity.

Although Tongai represents only one example in this evolving landscape, its approach illustrates the strategic direction of next-generation AI infrastructure.

Technical Characteristics of Modular Liquid-Cooled Systems

Modular liquid-cooled systems typically support multiple high-performance servers within compact enclosures optimized for thermal efficiency. These systems often rely on closed-loop water circuits that circulate coolant directly around heat-generating components, enabling precise thermal control. Negative pressure mechanics enhance safety by reducing the risk of leaks and maintaining system integrity under variable operating conditions. Advanced sealing technologies further strengthen reliability, ensuring that liquid cooling systems can operate in environments previously unsuitable for high-density compute.

Additionally, ruggedized designs enable deployment beyond pristine data center settings, expanding the geographic and operational scope of AI infrastructure. These technical characteristics position modular liquid-cooled systems as a foundational element in the future architecture of AI deployment.

Energy efficiency has become one of the most compelling arguments for liquid cooling in high-density AI environments. As compute density increases, the proportion of energy consumed by cooling systems rises sharply in traditional air-cooled architectures.

Liquid cooling disrupts this pattern by enabling more efficient heat transfer, which reduces the overall energy footprint of AI infrastructure.

This efficiency gain directly impacts operational costs, carbon emissions, and long-term Improved energy efficiency enhances system stability, which is critical for inference workloads that require consistent performance under continuous load. Consequently, liquid cooling increasingly serves as both an economic and environmental strategy rather than a purely technical optimization.

Liquid Cooling and the Future of Edge AI Deployment

Edge AI deployment introduces unique challenges that traditional data center architectures cannot adequately address. Unlike centralized environments, edge locations often lack robust cooling infrastructure, stable power supply, and controlled environmental conditions.

Liquid-cooled modular systems offer a practical solution by integrating thermal management directly into compute units, reducing dependence on external facilities. This integration enables organizations to deploy high-density AI inference capabilities in geographically distributed and operationally constrained environments.

Moreover, edge AI applications demand low latency and real-time processing, which further amplifies the need for localized, high-performance compute solutions. Thus, liquid cooling plays a critical role in enabling the expansion of AI capabilities beyond centralized data centers into the broader digital ecosystem.

Infrastructure Design Shifts Driven by Liquid Cooling Adoption

Infrastructure design has begun to shift as organizations recognize that cooling architecture directly influences compute scalability and deployment speed. Rather than expanding centralized facilities, many enterprises now prioritize modular systems that integrate cooling at the unit level.

This shift enables faster deployment cycles because teams can bypass lengthy facility upgrades and regulatory approvals associated with traditional data centers. Additionally, modular liquid-cooled systems allow organizations to experiment with distributed compute strategies without committing to large-scale infrastructure investments. As AI workloads diversify across training, inference, and real-time analytics, infrastructure flexibility becomes a decisive factor in long-term competitiveness. Liquid cooling adoption increasingly reflects strategic infrastructure planning rather than isolated engineering decisions.

The economic implications of liquid-cooled AI infrastructure extend beyond energy savings and operational efficiency. By reducing reliance on centralized cooling systems, organizations can lower capital expenditure associated with facility expansion and mechanical infrastructure upgrades.

The modular liquid-cooled systems improve asset utilization because compute resources can operate closer to optimal performance thresholds.This optimization reduces the hidden costs of underutilized hardware that often arise in air-cooled environments constrained by thermal limits. Distributed liquid-cooled deployments enable organizations to align infrastructure investment with incremental demand rather than speculative capacity planning. As a result, liquid cooling reshapes not only technical architecture but also financial models underlying AI infrastructure investment.

The Role of Liquid Cooling in AI Infrastructure Resilience

Resilience has become a critical requirement for AI infrastructure as organizations depend increasingly on continuous inference and real-time decision systems. Traditional cooling architectures often introduce single points of failure because centralized systems affect multiple compute clusters simultaneously.

In contrast, closed-loop liquid-cooled systems distribute thermal management across independent units, reducing systemic risk. This distributed resilience aligns with broader trends in cloud-native architecture and microservices, where decentralization enhances reliability.

Liquid-cooled modular systems maintain stable performance even under fluctuating environmental conditions, which strengthens operational continuity. Therefore, liquid cooling contributes not only to efficiency but also to structural resilience in modern AI infrastructure.

Liquid Cooling and the Redefinition of Data Center Boundaries

Liquid cooling challenges the traditional definition of what constitutes a data center by enabling compute deployment beyond conventional facilities. When cooling becomes embedded within modular units, the distinction between data centers and edge environments begins to blur.

Organizations can deploy high-density compute capabilities in locations previously considered unsuitable for advanced AI workloads.

This capability expands the geographic footprint of AI infrastructure and accelerates digital transformation across industries. Embedded cooling systems reduce dependence on centralized infrastructure providers, which alters competitive dynamics within the cloud and colocation markets. Liquid cooling contributes to a broader redefinition of data center boundaries in the AI-driven economy.

Strategic Implications for Hyperscalers and Enterprises

Hyperscalers and enterprises face different strategic implications as liquid cooling reshapes AI infrastructure design. Hyperscalers must balance the efficiency gains of liquid cooling with the complexity of integrating new thermal architectures into massive centralized facilities.

Enterprises, on the other hand, often view liquid-cooled modular systems as an opportunity to regain control over compute deployment and infrastructure strategy. This divergence highlights a growing tension between centralized hyperscale models and decentralized enterprise-led AI architectures.

Furthermore, liquid cooling enables enterprises to deploy high-performance inference capabilities without fully relying on hyperscale cloud providers.

Therefore, liquid cooling plays a subtle but significant role in redistributing power across the AI infrastructure ecosystem.

Liquid Cooling as an Enabler of Sustainable AI Growth

Sustainability concerns increasingly influence decisions about AI infrastructure design and deployment.As AI workloads expand, organizations face mounting pressure to reduce the environmental impact of compute-intensive operations.

Liquid cooling supports sustainability objectives by improving energy efficiency and reducing the overall carbon footprint of AI infrastructure. Moreover, modular liquid-cooled systems minimize waste associated with oversized facilities and underutilized cooling capacity. This alignment between efficiency and sustainability strengthens the business case for liquid cooling beyond purely technical considerations. Consequently, liquid cooling emerges as a critical enabler of sustainable AI growth in an era of escalating computational demand.

The adoption of liquid cooling often triggers organizational transformation because infrastructure teams must rethink traditional operational models. Engineering teams increasingly collaborate with data science, cloud architecture, and sustainability teams to design integrated AI infrastructure strategies.

This cross-functional collaboration reflects the growing complexity of AI systems and the interconnected nature of compute, cooling, and deployment decisions. Procurement and finance teams must adapt to new cost structures associated with modular liquid-cooled systems.

As organizations embrace liquid cooling, they often develop new governance frameworks to manage distributed compute environments effectively. Liquid cooling influences not only technical architecture but also organizational culture and decision-making processes.

Competitive Differentiation Through Advanced Cooling Architectures

Advanced cooling architectures increasingly serve as a source of competitive differentiation in the AI-driven economy.Organizations that deploy efficient liquid-cooled systems can scale AI capabilities faster and more reliably than competitors constrained by legacy cooling models.

This advantage translates into faster innovation cycles, improved customer experiences, and stronger data-driven decision-making capabilities.

Moreover, efficient cooling architectures enable organizations to experiment with advanced AI models without incurring prohibitive infrastructure costs. As AI becomes a core driver of business strategy, cooling architecture evolves into a strategic asset rather than an operational utility.Thus, liquid cooling contributes directly to competitive positioning in industries where AI capabilities define market leadership.

The long-term evolution of liquid cooling will likely mirror the broader trajectory of AI infrastructure toward decentralization and modularity. As hardware continues to evolve, cooling systems must adapt to increasingly diverse deployment scenarios and performance requirements.

Liquid cooling architectures will likely become more intelligent, integrating real-time monitoring and adaptive thermal management capabilities. Additionally, industry standards and interoperability frameworks may emerge to facilitate widespread adoption of modular liquid-cooled systems.

These developments will further integrate cooling architecture into the core design of AI infrastructure rather than treating it as an auxiliary component. Therefore, liquid cooling appears poised to shape the structural foundation of next-generation AI systems for years to come.

Strategic Outlook for Liquid Cooling in the Compute Ecosystem

The strategic outlook for liquid cooling reflects a convergence of technological, economic, and organizational forces shaping the future of compute. As AI workloads continue to expand across industries, the limitations of traditional cooling architectures will become increasingly visible. Liquid cooling offers a pathway to overcome these limitations while enabling scalable, efficient, and resilient AI infrastructure. However, its adoption requires organizations to rethink infrastructure strategy, investment models, and operational frameworks.

Those that embrace liquid cooling early may gain structural advantages in deploying and scaling AI capabilities across diverse environments. Therefore, liquid cooling stands not as a niche innovation but as a defining element of the next phase of compute infrastructure evolution.