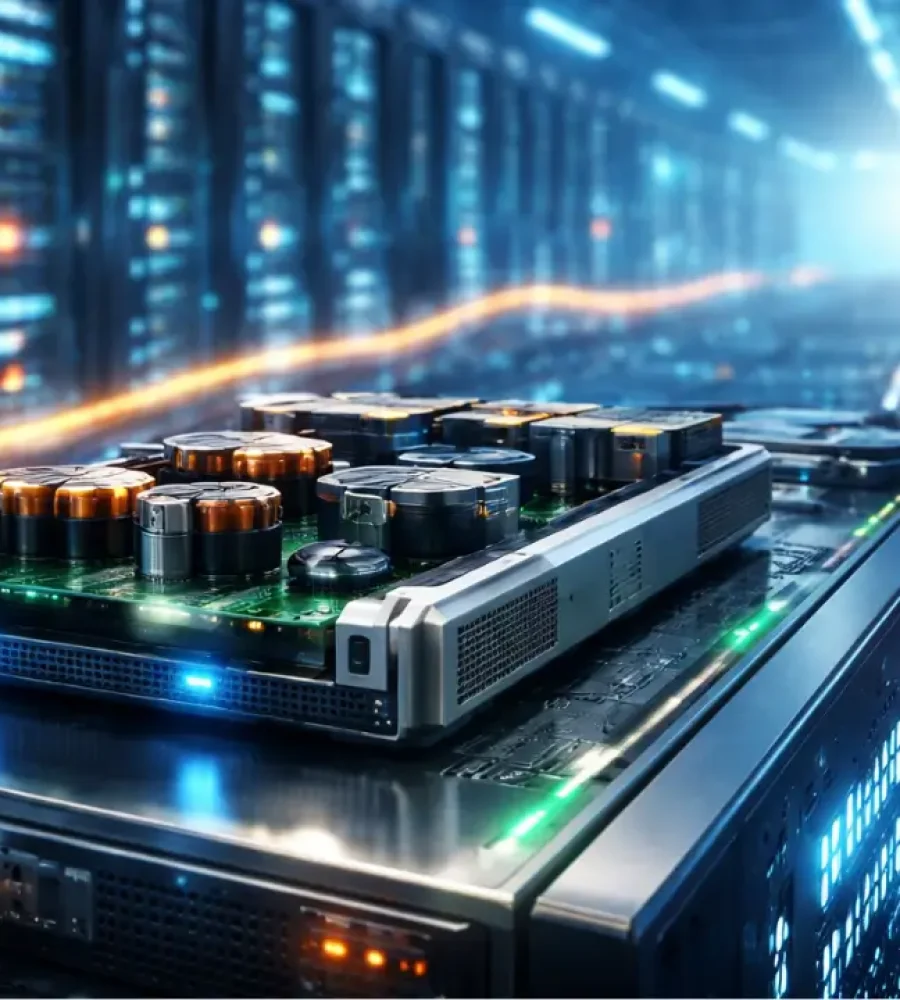

Cold plate design for AI cooling is now shaping the limits of modern computing. As artificial intelligence systems drive chip power beyond 500 watts and rack densities past 80 kilowatts, traditional air-based approaches are being left behind. Increasingly, it is being recognized that performance gains in AI depend as much on thermal engineering as on silicon advances. Consequently, direct-to-chip liquid cooling has become the baseline for AI accelerators, CPUs, and dense compute platforms.

At the center of this transition sits the cold plate. It is where heat, power, and physics intersect. While models evolve quickly, thermal constraints remain fixed. As a result, cold plate design is being treated less as a component choice and more as a strategic infrastructure decision.

Cold Plate Design for AI Cooling Sets Performance Boundaries

Cold plates act as precision interfaces between processors and liquid coolant. Heat is extracted at this point and carried away before temperature thresholds are crossed. At high power densities, even small inefficiencies can be costly. Uneven coolant flow has been shown to create hotspots, trigger throttling, and accelerate long-term wear.

Industry data illustrates the stakes clearly. GPU performance typically remains stable below 85°C. However, once junction temperatures rise into the 90-95°C range, clock speeds are reduced and performance losses of 5 to 20 percent are commonly observed. Beyond that, especially near 100°C, output can fall sharply and failure risks increase. For this reason, cold plate efficiency is increasingly viewed as a direct driver of usable compute capacity.

When cold plates are designed well, more headroom is created. Higher power envelopes can be sustained, hardware lifespans are extended, and total cost of ownership is reduced. As a result, thermal design is now being pulled into early-stage AI system planning.

Engineering Tradeoffs Inside Cold Plate Design for AI Cooling

Designing a cold plate involves balancing geometry, fluid flow, and manufacturability. Internal channel layouts determine how evenly coolant spreads across the chip surface. Straight, parallel channels are easier to produce and offer predictable pressure behavior. However, without careful manifold design, flow imbalance can occur.

More complex structures, such as crossflow paths or pin-fin regions, are often used to improve uniformity and target high-heat zones. At the same time, surface features that enhance heat transfer can increase sensitivity to particulates. Therefore, performance gains must be weighed against reliability risks.

Pressure drop adds another constraint. While aggressive geometries improve heat removal, they also increase demands on pumps and seals. Excessive pressure can lead to material deformation or long-term leakage. For this reason, cold plate design for AI cooling is often optimized around balance rather than extremes.

Cooling Architectures and the Path Forward

Cold plates generally operate in either single-phase or two-phase cooling systems. Single-phase designs rely on liquids that remain in one state, such as water or dielectric fluids. These systems are simpler to manage and are widely deployed today. Two-phase approaches introduce controlled boiling, allowing more heat to be absorbed per unit mass. However, tighter control of pressure and flow is required.

In practice, most near-term AI deployments continue to favor single-phase systems due to their robustness. Still, as power densities rise, two-phase designs are being evaluated more seriously.

Manufacturing methods are also evolving. Additive manufacturing is being adopted where complex internal geometries are needed. By producing monolithic cold plates, leak paths are reduced and flow control is improved. Conventional machining remains relevant for simpler designs, but hybrid approaches are becoming more common.

As AI hardware changes rapidly, cooling solutions are being built for iteration. Modular interfaces and faster design cycles are being prioritized. While often overlooked, cold plates are quietly defining how far AI systems can scale.