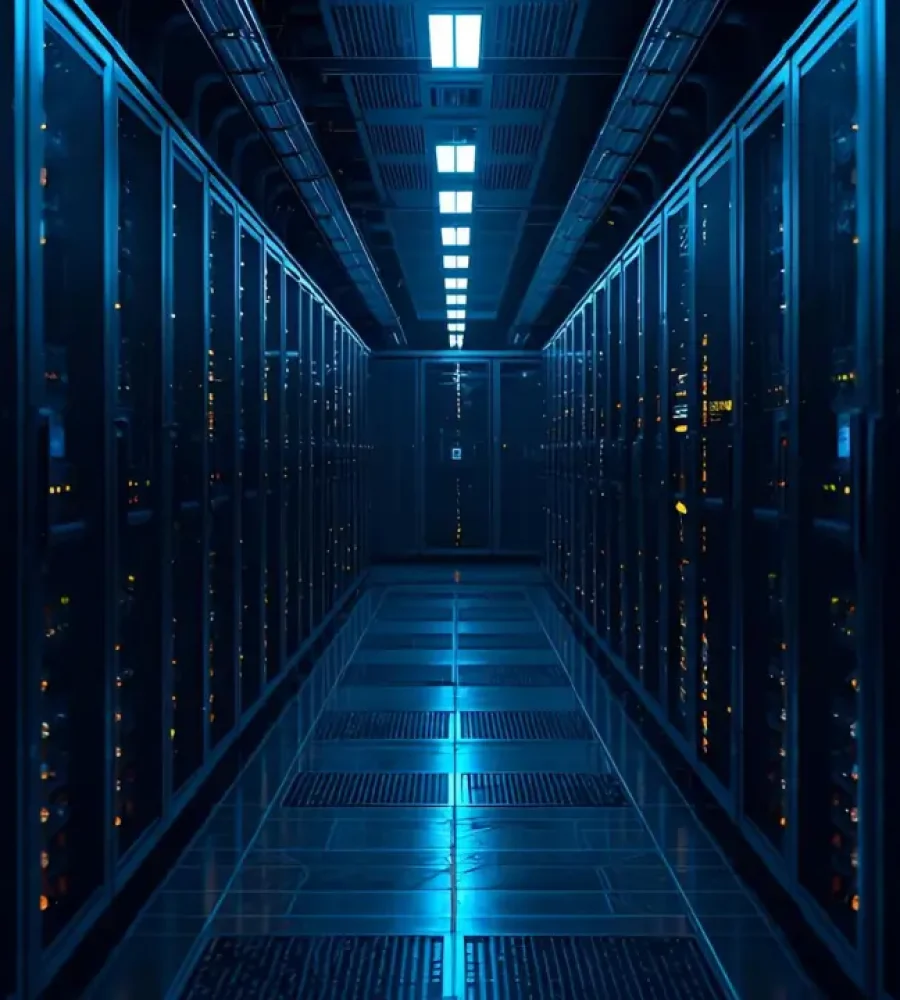

The expansion of always-on digital infrastructure has introduced a structural sustainability challenge that persists regardless of utilization levels. Across global compute environments, large portions of installed capacity remain powered continuously, even when workloads fluctuate or decline. This baseline energy consumption, driven by architectural design choices rather than real-time demand, has become a significant contributor to operational emissions and long-term energy inefficiency.

This article examines how idle capacity, non-negotiable baseline power draw, and infrastructure design assumptions have locked digital systems into permanent energy consumption.

Idle capacity as a structural condition

Idle capacity is not an operational anomaly but a structural feature of modern digital infrastructure. Cloud platforms, enterprise compute environments, and hyperscale facilities are designed to accommodate peak demand scenarios, not average utilization. According to data published by the International Energy Agency, global data center utilization rates often remain below 40 percent, even as total installed capacity continues to grow.

This imbalance is rooted in service-level expectations. Latency-sensitive applications, redundancy requirements, and availability guarantees require excess compute, storage, and networking resources to remain online. As a result, servers, networking switches, and supporting systems draw power continuously, regardless of workload intensity. Even when utilization drops sharply, energy consumption declines only marginally.

The sustainability impact of idle capacity becomes more pronounced at scale. As digital infrastructure footprints expand, the absolute amount of underutilized but powered equipment increases, compounding baseline energy demand across regions.

Baseline power draw and non-linear energy behavior

A defining characteristic of always-on infrastructure is the non-linear relationship between utilization and power consumption. Servers and networking equipment consume a significant portion of their peak power even when performing minimal work. Industry studies indicate that many enterprise-class servers draw between 50 and 70 percent of peak power at idle.

This baseline power draw extends beyond IT equipment. Cooling systems, power distribution units, uninterruptible power supplies, and monitoring systems are engineered to remain active at all times. Mechanical and electrical systems cannot scale down proportionally with workload reductions, particularly in facilities designed for high-density compute.

Cooling and power overheads can account for nearly half of total facility energy consumption, even during periods of low IT utilization. These fixed energy costs effectively lock facilities into a minimum consumption threshold that persists around the clock.

Architectural decisions that reinforce permanence

The sustainability cost of always-on infrastructure is closely tied to architectural decisions made during design and deployment. Traditional infrastructure models prioritize resilience through redundancy, geographic distribution, and continuous availability. While these attributes support reliability, they also entrench constant energy use.

Redundant power paths, mirrored storage systems, and active-active site configurations require duplicate systems to operate simultaneously. Even when failover capacity is not actively serving workloads, it remains fully powered and cooled. These design patterns, established during earlier phases of digital growth, were optimized for uptime rather than energy proportionality.

Virtualization and cloud abstraction have improved workload flexibility but have not eliminated baseline consumption. While virtual machines and containers can be shifted dynamically, the physical hosts beneath them remain energized. Hardware refresh cycles have delivered incremental efficiency gains, but architectural inertia continues to limit sustainability improvements.

The compounding effect of global scale

The sustainability implications of always-on infrastructure are magnified by global scale. Digital services increasingly operate across multiple regions to support latency, resilience, and regulatory requirements. Each regional deployment introduces its own baseline energy footprint, independent of local demand variability.

Global data center electricity consumption exceeded 460 terawatt-hours in recent reporting periods, with baseline loads accounting for a substantial share. As new facilities come online to support artificial intelligence workloads, content delivery, and enterprise digitization, baseline demand grows in parallel.

This dynamic challenges assumptions that efficiency improvements alone can offset growth. Even as power usage effectiveness metrics improve, absolute energy consumption continues to rise because baseline requirements expand with infrastructure footprint.

Energy proportionality as an unresolved constraint

Energy proportionality, the ability of systems to consume power in direct proportion to workload, remains an unresolved constraint in digital infrastructure. While processors have become more efficient under load, idle-state power reductions have not kept pace. Networking equipment, storage arrays, and accelerators often lack deep sleep states compatible with rapid workload scaling.

Cooling systems face similar limitations. Thermal inertia, humidity control, and airflow management require continuous operation to maintain stable environments. Rapid shutdown and restart cycles introduce operational risks that many operators avoid, reinforcing always-on configurations.

These technical constraints highlight a gap between sustainability objectives and infrastructure realities. Without architectures designed explicitly for energy proportionality, idle capacity will continue to carry a high energy cost.

Hidden sustainability costs beyond electricity

The sustainability impact of always-on infrastructure extends beyond electricity consumption. Continuous operation accelerates equipment wear, increasing replacement cycles and embedded carbon associated with manufacturing and supply chains. Shorter hardware lifespans contribute to higher material throughput and electronic waste volumes.

Water usage also becomes a factor. Cooling systems in many regions rely on evaporative processes that consume water continuously, regardless of IT load. Some large facilities consume millions of liters annually, driven largely by baseline cooling requirements.

These secondary impacts reinforce the need to evaluate sustainability across the full lifecycle of always-on systems, not solely through operational energy metrics.

Emerging pressures on the always-on model

Several industry trends are beginning to challenge the assumptions underlying always-on infrastructure. Workload diversity is increasing, with batch processing, inference, and intermittent analytics workloads exhibiting different availability requirements than traditional enterprise applications.

Edge computing and distributed architectures introduce opportunities for more granular scaling, but they also risk multiplying baseline footprints if deployed without proportional energy controls. Similarly, specialized accelerators improve performance efficiency but can increase idle power if not effectively managed.Most production environments continue to favor availability and predictability over dynamic energy scaling.

Reframing sustainability through utilization awareness

Addressing the sustainability cost of always-on infrastructure requires a shift toward utilization-aware design. This does not imply sacrificing reliability but rather aligning availability strategies with actual workload behavior. Techniques such as workload consolidation, intelligent scheduling, and hardware-level power management can reduce idle energy draw, but only when supported by architectural change.

Software-defined power management, deeper idle states, and modular infrastructure designs offer potential pathways. However, these approaches require coordination across hardware vendors, operators, and software platforms. Incremental efficiency gains are unlikely to offset the structural energy cost of permanently powered systems on their own.

The industry faces a trade-off between simplicity and sustainability. Always-on architectures simplify operations and guarantee performance, but they embed a persistent energy cost that scales with infrastructure growth.

Conclusion

The sustainability cost of always-on digital infrastructure is not a byproduct of inefficiency but a consequence of deliberate architectural choices. Idle capacity, baseline power draw, and redundancy-driven designs have locked global compute systems into continuous energy consumption, independent of utilization.

As digital infrastructure continues to expand, these baseline demands will shape sustainability outcomes as much as renewable energy sourcing or efficiency metrics. Addressing this challenge requires rethinking how availability, resilience, and energy proportionality intersect at the architectural level.

Understanding and quantifying the sustainability implications of always-on systems is a critical step toward more balanced digital growth, where performance requirements are met without permanently embedding excess energy consumption into the foundation of global infrastructure.