When Heat Becomes the Headline

At first, the warning signs arrive quietly. A rack hums louder than expected. A temperature curve refuses to flatten. An airflow model breaks down under real-world load. Engineers notice these anomalies long before markets do, yet they signal a deeper shift. AI data center cooling has moved from a background utility into the defining constraint of modern digital infrastructure.

The rise of 100kW racks did not emerge from reckless ambition. It followed a predictable trajectory driven by accelerated computing, dense silicon packaging, and AI workloads that push hardware toward continuous maximum utilization. Each step forward in performance carried a thermal cost. Today, that cost dominates design conversations across continents.

This long read examines how AI data center cooling evolved into the primary limiter of scale, why traditional thermal assumptions no longer hold, and how engineering teams now confront physics rather than software abstractions. The focus stays firmly on infrastructure reality, not promotional narratives. Cooling has become the bottleneck because heat respects no roadmap, ignores capital commitments, and resists negotiation.

From Manageable Warmth to Relentless Heat Density

For decades, data centers treated heat as a solvable nuisance. Engineers balanced airflow, raised floor tiles, and hot aisle containment to maintain stability. Power densities climbed gradually, giving thermal strategies time to adapt. That equilibrium ended when AI workloads changed utilization patterns.

Unlike enterprise servers that idle between requests, AI accelerators operate near peak load for extended periods. Training runs stretch across days or weeks. Inference pipelines run continuously at scale. The result places unbroken thermal stress on every component. AI data center cooling must now address sustained heat rather than short-lived spikes.

Rack density climbed accordingly. Early GPU deployments crossed 20kW, then 40kW, without triggering existential concern. At 60kW, airflow margins narrowed. At 100kW, convection-based cooling approaches their practical limit. Engineers cannot simply push more air through racks without incurring prohibitive energy penalties and mechanical instability.

Heat density, not compute demand, now defines expansion timelines.

Why 100kW Racks Break Conventional Cooling Logic

Air cooling relies on a simple principle: move enough cooler air across hot surfaces to absorb and remove heat. That principle fails gracefully until it fails abruptly. At extreme densities, airflow velocity must increase sharply to maintain thermal equilibrium. Fans consume more power, create turbulence, and introduce uneven cooling zones.

AI data center cooling at 100kW racks confronts these nonlinear effects. Hot spots form despite nominal airflow targets. Components age faster. Failure rates rise unpredictably. Noise, vibration, and maintenance burdens increase. Engineers face diminishing returns with every incremental adjustment.

The challenge does not stem from poor design. It arises because air lacks sufficient heat capacity at these densities. Physics, not engineering discipline, sets the boundary.

Thermal Load Becomes a Systemic Constraint

Once racks exceed 80kW, cooling stops being a rack-level problem. It becomes a facility-wide constraint. Chilled water loops, heat exchangers, and cooling towers must scale in parallel. Electrical infrastructure must support both compute and cooling loads without destabilizing operations.

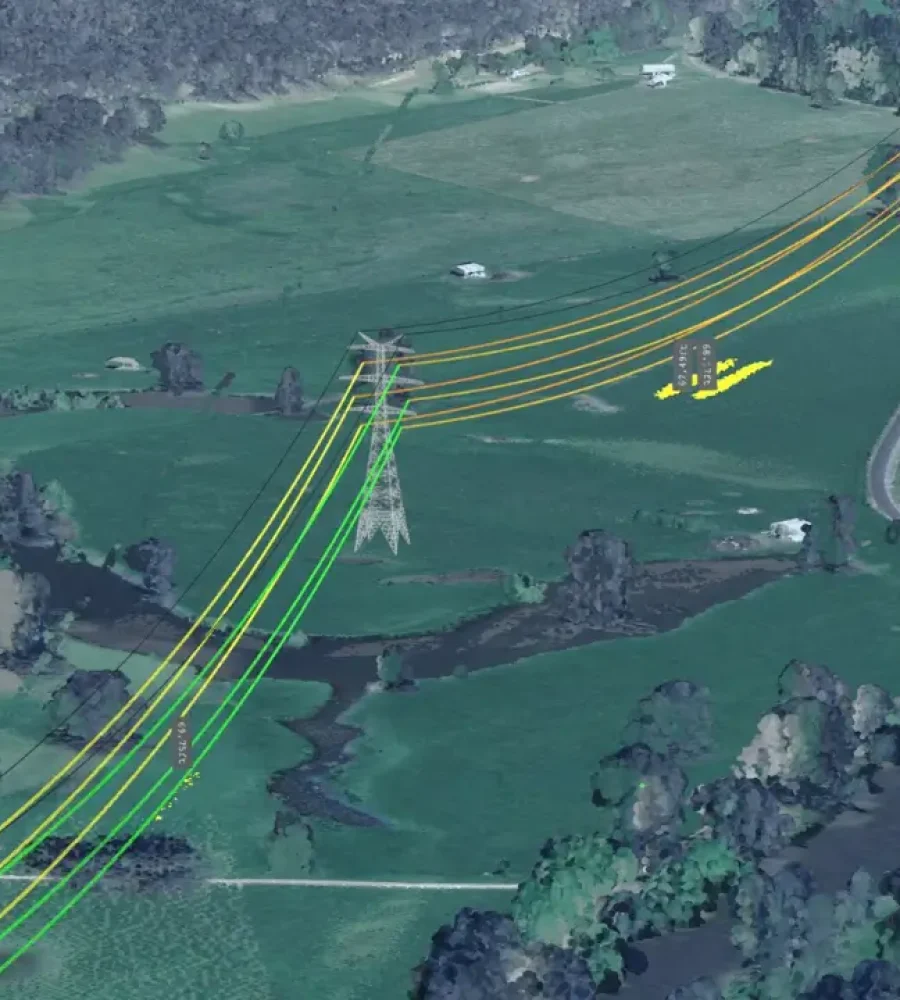

AI data center cooling reshapes site selection as well. Facilities gravitate toward regions with favorable ambient conditions, reliable water access, and robust power grids. Geography reasserts influence in an industry that once promised location independence.

These constraints ripple outward. Project timelines stretch. Capital requirements rise. Deployment schedules depend as much on cooling readiness as on silicon availability. Thermal engineering now dictates pace.

Liquid Cooling Moves From Option to Necessity

As air cooling strains under load, liquid-based solutions move into the mainstream. Direct-to-chip cold plates transfer heat more efficiently by circulating liquid across processors. Immersion cooling submerges hardware in dielectric fluids, eliminating air as an intermediary altogether.

AI data center cooling increasingly depends on these methods, not because they offer marginal gains, but because they unlock feasibility. Liquid removes heat at densities air cannot manage. It stabilizes temperature gradients and reduces mechanical stress on components.

However, liquid cooling introduces complexity. Leak prevention, materials compatibility, maintenance protocols, and operational expertise demand new skill sets. Facilities must redesign layouts, safety procedures, and monitoring systems. The transition carries operational risk even as it solves thermal limits.

Engineering Trade-Offs Inside Liquid Systems

Liquid cooling does not represent a single solution. Engineers choose between cold plate systems, single-phase immersion, and two-phase immersion based on workload profiles, cost tolerance, and operational maturity. Each approach carries trade-offs.

Cold plates integrate with existing rack designs but still rely on some airflow for secondary components. Immersion systems achieve superior thermal performance but complicate hardware servicing. Fluid selection influences heat transfer efficiency, environmental considerations, and long-term stability.

AI data center cooling strategies therefore vary widely across facilities. No universal blueprint exists. Engineers must align thermal design with business objectives, risk tolerance, and regional constraints.

Cooling Infrastructure Redefines Facility Architecture

As cooling systems grow more complex, they reshape physical layouts. Piping networks replace raised floors. Heat exchangers occupy prime real estate. Structural reinforcements support heavier racks filled with liquid-cooled hardware.

AI data center cooling now influences building height, floor loading, and mechanical zoning. Designers consider maintenance access, redundancy paths, and failure isolation at earlier stages. Cooling no longer adapts to architecture; architecture adapts to cooling.

This inversion marks a fundamental shift. Thermal engineering leads design conversations that once prioritized IT density or network topology.

Energy Efficiency Gains Meet Practical Limits

Liquid cooling improves energy efficiency by reducing fan power and enabling higher operating temperatures. Facilities reclaim waste heat for secondary uses in some regions. Power usage effectiveness improves on paper.

Yet efficiency gains encounter diminishing returns. Pumps consume energy. Heat rejection still depends on external systems that face environmental constraints. AI data center cooling reduces overhead but cannot eliminate thermodynamic costs.

The industry now recognizes that efficiency alone cannot offset scale. Absolute energy consumption rises with demand, regardless of per-unit improvements. Cooling mitigates impact but does not neutralize it.

Water Use and Environmental Pressure

Cooling at scale often requires water, either directly or indirectly. Evaporative cooling towers consume significant volumes, particularly in arid regions. Liquid cooling reduces airflow dependence but does not always reduce water use.

AI data center cooling therefore intersects with local environmental realities. Water availability, regulatory scrutiny, and community impact shape deployment decisions. Engineers must balance thermal performance against sustainability constraints without relying on abstract offsets.

These pressures intensify as facilities cluster in power-rich regions that may already face resource stress.

Reliability Risks Rise With Thermal Complexity

Every additional component introduces potential failure points. Pumps fail. Valves stick. Sensors drift. AI data center cooling systems demand rigorous monitoring and predictive maintenance to avoid cascading outages.

Thermal excursions at 100kW densities occur rapidly. Response windows shrink. Automated controls must act decisively without introducing instability. Human intervention arrives too late in many scenarios.

Reliability engineering now integrates tightly with thermal design. Cooling failures rank among the highest-risk events in AI-native facilities.

Supply Chains Shape Cooling Outcomes

Cooling infrastructure depends on specialized components with long lead times. Heat exchangers, industrial pumps, and custom piping assemblies face manufacturing constraints. Global supply chains strain under concurrent expansion.

AI data center cooling timelines increasingly hinge on mechanical procurement rather than server delivery. Delays in cooling components idle expensive compute assets. Project managers recalibrate expectations accordingly.

This dependency underscores how physical infrastructure governs digital ambition.

Skills Gaps and Operational Learning Curves

Operating liquid-cooled facilities requires new expertise. Mechanical engineers collaborate more closely with IT teams. Training programs evolve. Incident response protocols adapt to unfamiliar failure modes.

AI data center cooling exposes workforce gaps that software-driven industries long overlooked. Knowledge transfer takes time. Early adopters absorb operational lessons that shape industry norms.

The learning curve remains steep, especially for regions scaling rapidly.

Cooling Economics and Capital Allocation

Cooling infrastructure commands a growing share of capital budgets. High-density designs concentrate cost into fewer square meters. Financial models adjust to reflect longer payback periods and higher upfront investment.

AI data center cooling decisions influence pricing structures and capacity commitments. Operators must ensure utilization aligns with capital intensity. Idle capacity carries heavier penalties when cooling systems scale beyond immediate demand.

These economic pressures reinforce cautious expansion strategies.

Global Variations in Cooling Strategy

Cooling approaches differ by region due to climate, regulation, and grid characteristics. Northern climates leverage free cooling. Tropical regions invest heavily in liquid systems. Water-scarce areas pursue closed-loop designs.

AI data center cooling therefore resists standardization. Multinational operators tailor designs to local conditions while maintaining operational consistency. This balancing act complicates scale but reflects reality.

The Feedback Loop Between Silicon and Cooling

Chip designers respond to thermal limits by optimizing efficiency, but performance gains often outpace cooling improvements. Each new generation promises better performance per watt, yet total wattage rises with deployment scale.

AI data center cooling remains locked in a feedback loop with silicon evolution. Advances on one side push constraints on the other. Neither discipline can decouple from physics.

This dynamic defines the next phase of infrastructure development.

Failure Scenarios and Thermal Risk Planning

Worst-case scenarios drive design decisions. Engineers model pump failures, power interruptions, and extreme weather events. Redundancy layers multiply. Safety margins shrink as densities rise.

AI data center cooling strategies incorporate probabilistic risk assessment rather than deterministic assumptions. Operators plan for unlikely but catastrophic thermal events. Insurance frameworks adapt to reflect these risks.

Preparation replaces optimism.

Regulatory Oversight Enters the Thermal Domain

Authorities increasingly scrutinize data center cooling due to environmental and grid impacts. Permitting processes examine water use, heat rejection, and resilience planning. Compliance requirements shape design choices.

AI data center cooling thus operates within a tightening regulatory landscape. Transparency and documentation gain importance. Engineering decisions carry policy implications.

Innovation Without Illusion

Research continues into advanced fluids, heat reuse, and alternative architectures. Some concepts promise incremental gains. None repeal thermodynamics. Engineers pursue realism rather than miracle solutions.

AI data center cooling innovation focuses on manageability, reliability, and integration rather than radical breakthroughs. Progress arrives through disciplined iteration.

Cooling as the New Scaling Law

In earlier eras, Moore’s Law guided expectations. Today, cooling capacity defines the slope of expansion curves. Facilities scale until heat removal reaches feasible limits.

AI data center cooling now functions as an implicit scaling law. It governs how fast and how far infrastructure can grow. Strategic planning incorporates thermal constraints from the outset.

Human Factors in Thermal Engineering

Behind every system stand engineers making judgment calls under uncertainty. They interpret sensor data, respond to anomalies, and refine designs through experience. Cooling challenges test institutional knowledge.

AI data center cooling elevates the role of human expertise in an industry enamored with automation. Judgment matters when margins disappear.

Looking Ahead Without Speculation

Current trajectories suggest continued pressure on cooling systems as AI workloads expand. Densities may stabilize temporarily as infrastructure adapts, yet demand shows no sign of retreat.

AI data center cooling will remain the limiting factor not because engineers lack creativity, but because physics imposes boundaries. Understanding those boundaries defines responsible expansion.

Cooling Shapes the Tempo of Global AI Expansion

Across regions racing to deploy AI infrastructure, schedules increasingly bend around thermal readiness. Power contracts may be signed, silicon shipments may arrive on time, and network capacity may sit idle while cooling systems undergo commissioning. AI data center cooling dictates when compute becomes usable, not the other way around.

Developers now stage projects in phases that align with mechanical completion rather than IT installation. Commissioning teams test fluid flow rates, pressure tolerances, and failover responses long before workloads go live. These steps add months to deployment cycles but reduce catastrophic risk. Heat punishes haste.

This reality changes how expansion plans are communicated to investors and regulators. Timelines stretch, contingencies multiply, and buffers become standard practice rather than conservative exceptions.

Thermal Telemetry Becomes Mission Critical

Sensors once served as diagnostic tools. Today, they function as operational lifelines. AI data center cooling relies on dense telemetry networks that track temperature, pressure, flow, and vibration in real time. Data streams feed automated control systems that adjust cooling behavior within seconds.

Granularity matters. Averages hide danger at high densities. Engineers monitor micro-variations across racks, manifolds, and chips. Machine learning models increasingly assist by detecting early signs of imbalance before thresholds breach.

Despite automation, human oversight remains essential. Engineers interpret anomalies, validate responses, and refine parameters. Cooling intelligence blends software insight with mechanical intuition.

The Cost of Overcooling

Cooling too aggressively introduces its own problems. Excessive flow rates erode components. Rapid temperature swings stress materials. Energy consumption spikes without proportional benefit. AI data center cooling requires precision rather than brute force.

Engineers aim for thermal equilibrium, not maximum cooling capacity. That balance grows harder as workloads fluctuate and models evolve. Adaptive cooling strategies adjust to workload profiles, reducing unnecessary strain while preserving headroom.

This nuanced approach challenges legacy assumptions that colder always equals safer. Stability now outranks minimal temperature targets.

Maintenance in a Liquid-Cooled World

Maintenance practices evolve alongside cooling systems. Draining loops, inspecting seals, and managing fluids demand specialized procedures. Downtime planning becomes more complex when racks integrate tightly with cooling infrastructure.

AI data center cooling shifts maintenance from reactive replacement to predictive intervention. Operators analyze wear patterns and fluid chemistry to anticipate failures. Scheduled servicing replaces emergency response whenever possible.

These practices reduce risk but increase operational sophistication. Smaller operators face steeper learning curves than hyperscale peers with deep mechanical expertise.

Insurance and Risk Assessment Adapt

As cooling systems grow central, insurers reassess exposure. Policies scrutinize thermal redundancy, monitoring protocols, and incident history. Premiums reflect the severity of cooling-related failures at high densities.

AI data center cooling thus influences financial risk models. Facilities with robust thermal design and operational discipline secure more favorable terms. Poorly documented systems face higher costs or limited coverage.

Risk management now intersects directly with engineering quality.

Standardization Efforts and Their Limits

Industry groups pursue standards to guide liquid cooling adoption. Interface specifications, safety guidelines, and testing protocols aim to reduce fragmentation. These efforts improve interoperability and confidence.

Yet AI data center cooling resists full standardization. Site-specific variables dominate outcomes. Climate, water chemistry, regulatory requirements, and workload mix defy uniform solutions. Standards provide guardrails, not prescriptions.

Engineering judgment fills the gaps left by documentation.

Cooling and the Question of Scale Efficiency

High-density designs promise efficiency by concentrating compute. Cooling challenges complicate that promise. At extreme densities, marginal efficiency gains shrink while complexity rises.

AI data center cooling reveals that scale efficiency follows a curve, not a straight line. Beyond certain thresholds, operational risk and capital intensity offset density benefits. Some operators explore moderate densities to balance performance and manageability.

This recalibration reflects maturity rather than retreat.

Workforce Safety and Operational Culture

Liquid systems introduce safety considerations unfamiliar to traditional data centers. Handling fluids, managing pressure systems, and responding to leaks require rigorous training. Safety protocols expand accordingly.

AI data center cooling influences workplace culture by elevating mechanical safety alongside cybersecurity. Cross-disciplinary collaboration becomes routine. Mechanical engineers, electricians, and IT staff coordinate closely during operations.

This integration strengthens resilience but demands cultural adaptation.

Cooling as a Competitive Differentiator

Facilities that master thermal management gain strategic advantage. Faster deployment, higher reliability, and predictable performance attract demanding workloads. Cooling expertise becomes a selling point rather than an internal detail.

AI data center cooling capabilities differentiate operators in an increasingly crowded market. Customers evaluate not only compute availability but also thermal resilience. Transparency around cooling design builds trust.

Competition shifts toward infrastructure competence.

Heat Reuse and Its Practical Constraints

Some facilities capture waste heat for district heating or industrial use. These projects demonstrate ingenuity and local benefit. However, integration depends on proximity, demand consistency, and regulatory alignment.

AI data center cooling supports heat reuse where conditions allow, but such schemes remain supplemental rather than transformative. Heat quality, timing, and location limit scalability.

Reuse enhances efficiency without redefining fundamentals.

Climate Variability Adds Uncertainty

Rising ambient temperatures and extreme weather events strain cooling systems. Heat waves reduce cooling efficiency precisely when demand peaks. Flooding threatens mechanical infrastructure.

AI data center cooling strategies incorporate climate resilience through elevated equipment placement, redundant heat rejection paths, and conservative design margins. Long-term climate models inform site selection.

Adaptation becomes integral to thermal planning.

Lessons From Early Adopters

Facilities that pioneered 100kW racks provide valuable lessons. Success depends on integrated design, thorough testing, and operational discipline. Failures often trace back to underestimated complexity or rushed deployment.

AI data center cooling benefits from shared experience across the industry. Conferences, technical papers, and informal networks disseminate hard-earned knowledge. Collective learning accelerates maturity.

Progress emerges through transparency rather than secrecy.

Cooling and the Pace of Innovation

Thermal limits influence how quickly new hardware generations deploy. Operators delay adoption until cooling systems adapt. Chip designers consider thermal envelopes more carefully.

AI data center cooling thus moderates innovation pace without halting it. Constraints encourage efficiency improvements and architectural creativity. Boundaries shape progress.

Innovation continues within physical limits.

The Infrastructure Reality Check

The narrative of limitless digital growth meets its counterweight in thermal engineering. Servers cannot escape heat. Data centers cannot ignore the environment. AI data center cooling grounds ambition in material reality.

This grounding does not diminish technological achievement. It clarifies the conditions under which progress remains sustainable and reliable.

Engineering at the Edge of Feasibility

Cooling the impossible does not mean defeating physics. It means operating skillfully at its edge. Engineers balance performance, risk, and responsibility in environments where margins vanish.

AI data center cooling stands as the quiet determinant of AI scale. Not algorithms, not capital, but the ability to move heat safely defines what comes next. As racks push toward and beyond 100kW, cooling remains the real limiter, shaping the future of digital infrastructure with unwavering authority.