Artificial intelligence now supports much of our global economic infrastructure. Consequently, the electricity requirements of hyperscale data centers are undergoing an exponential shift. Corporate sustainability mandates historically emphasized a transition to 100 percent renewable energy. However, the physical realities of AI workloads reveal a growing mismatch. Wind and solar power have a stochastic nature. In contrast, next-generation compute clusters have deterministic and high-intensity demands. This discrepancy represents more than a policy hurdle. It is a systemic challenge involving the laws of physics and power electronics. Furthermore, the architectural inertia of 20th-century electrical grids limits rapid adaptation.

The Baseload Problem: Physics vs. Policy

The fundamental disconnect between AI development and renewable energy lies in firmness. AI data centers require 24/7 power to support training for multi-trillion-parameter models. They also serve the real-time inference demands of global users. Traditional cloud workloads follow predictable diurnal cycles. In contrast, AI training runs are sustained and high-utilization events. These runs can last for months without pause. They draw near-maximum power with almost no variation. This creates a load profile with a 99 percent capacity factor requirement. This stands in stark contrast to solar power, which has a 20 percent capacity factor. Wind power offers slightly better results at 30 to 45 percent.

The reliance on intermittent resources creates a reliability gap. Existing lithium-ion battery technology cannot bridge this gap. Modern utility-scale battery systems provide short-duration frequency regulation. They also offer four-hour energy shifting. For a 1-gigawatt AI campus, backing up just 24 hours would require 24 gigawatt-hours of storage. A single 1-gigawatt-hour installation requires 700,000 tons of raw material. It also has a manufacturing energy footprint 450 times its rated capacity. Scaling this to meet multi-day periods of low wind and sun would be impossible. It would require capital and minerals that exceed global production.

Technical Implications of High-Density Power Electronics

The quality of power demanded by AI clusters introduces significant risks. Modern AI servers interface with the grid through high-frequency power converters. Traditional electromechanical loads provide physical inertia to stabilize grid frequency. However, power electronics are inertia-less systems. They respond to grid conditions with extreme speed. Sometimes this response exacerbates local instabilities.

AI training workloads are notoriously bursty and variable. Power fluctuations of hundreds of megawatts can occur within seconds. These rapid transients can trigger frequency deviations. Furthermore, the concentration of power supplies creates massive harmonic distortion. Harmonics are non-sinusoidal current pulses. They do not contribute to useful work. Instead, they cause excessive heating in utility transformers and distribution lines. This electrical vibration degrades the physical integrity of the backbone of the grid.

The Harmonic Crisis and IEEE 519 Standards

Data centers function as concentrated sources of nonlinear electrical loads. They draw power in sharp and non-sinusoidal pulses. Grids were not designed for these patterns. This behavior creates Total Harmonic Distortion. Utilities monitor this metric closely to prevent equipment damage. IEEE 519 standards limit this distortion to 8 percent for systems under 1,000V. However, AI clusters often push past these boundaries.

Infrastructure Impacts of Harmonic Distortion:

- Utility Transformers: High-frequency currents drive up eddy current losses. This leads to accelerated insulation aging and premature failure.

- Capacitor Banks: Harmonics cause excessive heating and voltage stress. This results in dielectric breakdown or catastrophic failure.

- Protective Relays: Distortion can cause false circuit breaker tripping. This leads to unscheduled outages for the facility and its neighbors.

- End-User Motors: Harmonics cause pulsating and reduced torque. This leads to mechanical vibrations and shortened operating lives.

When transformers operate beyond their limits, they deliver distorted waveforms. A recent event in Northern Virginia illustrated this risk clearly. A milliseconds-long voltage disturbance occurred on the grid. This caused several data centers to switch to backup generation simultaneously. The resulting drop of 1,500 megawatts in demand nearly caused a regional blackout. The grid operator struggled to shed supply as fast as the centers shed load.

The Storage and Duration Gap

The physics of battery storage reveals a fundamental inefficiency. Many manufacturers cite round-trip efficiencies of 85 percent. However, field data suggests that real-world efficiency is closer to 70 percent. This takes into account inverter losses and thermal management. For a 1-gigawatt data center, a 30 percent loss means something significant. Operators must build an additional 300 megawatts of generation just to cover the loss. This creates a compounding requirement for land and materials. Renewables alone struggle to satisfy these needs.

The Density Explosion: From 120kW to 600kW

The energy challenge of AI intensifies because of a radical shift in hardware. Traditional CPU-based server racks used 5 to 12 kilowatts. The arrival of NVIDIA’s Blackwell architecture has changed this. It pushed per-rack power requirements toward 100 kilowatts. Future clusters may target 600 kilowatts per rack. This density explosion creates a soda straw effect. A single data center building now consumes as much electricity as a city. However, this demand is concentrated in a tiny footprint.

NVIDIA’s Blackwell Ultra GPU features a thermal design power of 1,400W per chip. These systems generate immense heat when they aggregate into racks. Operators must remove this heat instantaneously. Failure to do so leads to immediate hardware damage. The energy required to move data adds further to the thermal load. High-speed interconnects like NVLink 5 contribute to this envelope. This concentration of heat necessitates a transition to liquid cooling. This includes direct-to-chip and immersion technologies.

Thermodynamics and the Cooling Overhead

Liquid cooling is more efficient than air cooling at the chip level. However, the system-level energy overhead remains substantial. Liquid cooling can reduce node-level consumption by roughly 16 percent. It keeps GPUs at 46 to 54 degrees Celsius. In contrast, air cooling keeps them at 55 to 71 degrees . Nevertheless, the absolute power draw of AI hardware is rising. The total facility load continues to climb regardless of cooling efficiency. A single cluster draws 4.8 kilowatts for the GPUs alone. Total system power reaches 7 kilowatts when factoring in auxiliary components .

The parasitic load of a data center is measured by Power Usage Effectiveness. This is the ratio of total facility energy to IT equipment energy. Leading hyperscalers achieve a PUE as low as 1.15. However, maintaining thermal stability in a 1-gigawatt campus is difficult. It can exceed 150 megawatts of non-compute power. This creates a scaling problem. As compute density increases, the infrastructure also grows. Transformers and switchgear require lead times of 18 months.

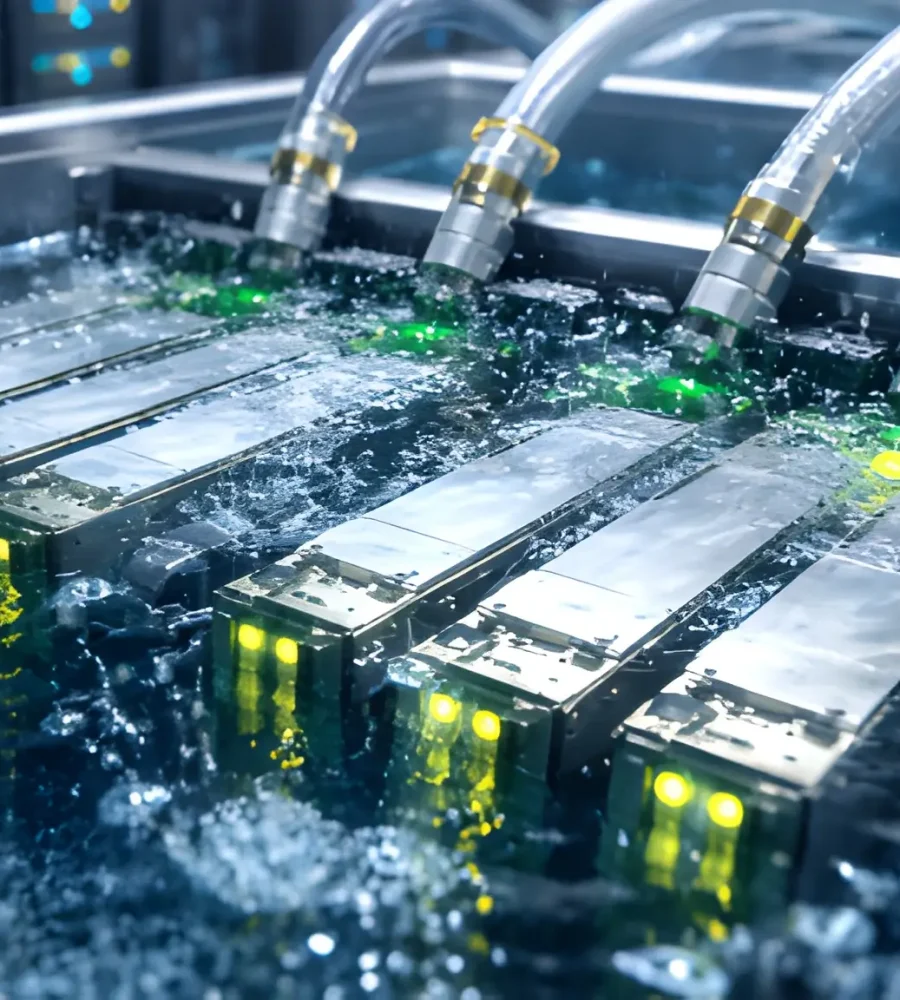

The Physics of Liquid Immersion Cooling:

- Heat Transfer Coefficient: The heat transfer coefficient of water is 3,500 times greater than air. This enables cooling for 100-kilowatt racks that fans cannot handle .

- Dielectric Fluids: Immersion cooling submerges servers in non-conductive fluids. These liquids dissipate heat via convection or phase change.

- Efficiency Limits: Immersion cooling can achieve a PUE as low as 1.02. However, it requires massive capital expenditure for specialized tanks.

- Space Savings: Immersion-cooled systems take up one-third the space of air-cooled equivalents. Yet, the weight of the fluid requires reinforced flooring.

The Evolution of Computational Efficiency

Companies are looking toward architectural optimizations to counter rising costs. Google’s TPU v6e and AWS Trainium3 claim significant improvements. However, the Jevons Paradox suggests a different outcome. As compute becomes more efficient, the total demand for it will increase. This leads to higher overall energy consumption.

AI Accelerator Specifications and Efficiency:

- NVIDIA H100: This model uses 700W and 80 gigabytes of memory. It serves as the current baseline for high-performance training.

- NVIDIA Blackwell Ultra: This chip reaches 1,400W and 288 gigabytes of memory. It offers up to 25 times more inference efficiency .

- Google TPU v5p: This custom ASIC uses more than 600W. It is optimized specifically for Transformer architectures .

- AWS Trainium3: This chip uses an estimated 1,400W. It delivers a 40 percent efficiency gain over previous models .

The trend toward larger models requires massive amounts of high-bandwidth memory. Every additional gigabyte of memory contributes to the idle power draw. For instance, an 8x B200 cluster draws 140 watts per GPU when idle. This represents a significant vampire load. Operators must power this load 24/7 regardless of activity .

The Failure of Renewables to Scale

The central ambition of the tech sector is hitting a wall. Tech firms want to power AI solely with wind and solar. However, they are colliding with the reality of grid interconnection. Nearly 2,300 gigawatts of capacity are waiting in U.S. queues. This is nearly double the total current capacity of the entire U.S. grid. A staggering 95 percent of this backlog consists of renewable energy and storage projects .

The interconnection queue crisis creates a major delay. A hyperscaler might sign a power agreement today for a new solar farm. Yet, it may take seven years for that power to reach the grid. In contrast, a data center is built in as little as 16 months. This timeline disparity creates a structural shortage. Operators must rely on existing fossil-fuel power to meet their immediate needs.

Land Use and the Transmission Bottleneck

Renewable energy projects require vast amounts of land. Solar requires roughly 5 to 10 acres per megawatt. These farms are often located far from urban centers. Connecting a remote wind farm to an AI hub requires high-voltage lines. These projects face intense NIMBY (“Not In My Back Yard”) opposition.

In Virginia, residents have organized against the construction of 500-kilovolt lines. These projects feature towers up to 185 feet tall . These projects often involve multi-state permitting processes. Legal challenges can delay them for a decade. Public skepticism remains very high. A recent poll found that only 44 percent of Americans welcome a data center nearby. They are less popular than gas plants or wind farms.

Comparison of Transmission Infrastructure Challenges:

- Standard Regional Lines: These systems use 138 to 230 kilovolts. They have moderate visual impact but limited capacity for 1-gigawatt campuses .

- High-Voltage Lines: These 500-kilovolt systems use 185-foot towers. They face massive opposition due to community disruption .

- Super-High-Voltage Lines: These 765-kilovolt lines were used for coal transport. Now AI hubs need them for bulk power .

- Underground HVDC: These lines have low visual impact. However, they cost up to 50 times more than overhead lines.

The carbon-negative commitment of tech firms is becoming a mirage. Microsoft contracted for 19 gigawatts of new renewable energy in 2024. Despite this, carbon emissions are rising. Microsoft reported a 29 percent increase in total emissions from its 2020 baseline. Scope 3 emissions from construction and hardware are the primary drivers. Google reported a 48 percent increase in emissions over five years. It cited data center energy consumption as the main cause.

The Institutional Inertia of Grid Modernization

The mismatch between digital growth and infrastructure is compounded by regulatory inertia. A data center moves from site selection to operation in 24 months. The average timeline for a new transmission line is 15 years. This gap is a systemic risk to AI leadership. Some jurisdictions are considering lower queue positions for data centers. This would apply if they do not meet onsite generation requirements .

The Return to Firm Power: Nuclear and Gas

The AI industry is orchestrating a revival of traditional firm power. Faced with the limits of renewables, nuclear energy is now a centerpiece. Nuclear was once considered a legacy technology. Now it is a vital part of the tech energy strategy. Microsoft signed a 20-year agreement with Constellation Energy. They will restart Unit 1 of the Three Mile Island nuclear station. This facility will provide 835 megawatts of carbon-free power starting in 2027.

Amazon Web Services also moved to secure nuclear power. It secured 1.92 gigawatts from the Susquehanna plant through Talen Energy. These deals represent a fundamental shift in procurement. Hyperscalers are moving away from virtual offsets. They now seek the direct offtake of physical and reliable electrons.

The Bridge of Natural Gas

Nuclear is the long-term preference for carbon goals. However, natural gas has emerged as the unavoidable bridge fuel. AI expansion is happening faster than nuclear restarts. In regions like Northern Virginia, utilities are adding gas-fired capacity . Dominion Energy is proposing a four-unit gas complex in Chesterfield County. This will maintain grid reliability despite state mandates to decarbonize.

In Ireland, a moratorium on new data center connections was recently lifted. Any new facility must now meet strict conditions . They must install on-site generation or battery systems. These systems must meet the full demand of the facility. This forces centers to feed power back into the grid during peak times. Proliferation of on-site gas turbines is the result. Data centers are effectively turning into distributed power plants.

Small Modular Reactors and the 2030 Timeline

The tech industry is also investing in Small Modular Reactors. These are a future solution for localized power. AWS is collaborating with utilities in Washington state. They are exploring deployments totaling 320 megawatts . Google has partnered with Kairos Power for its own development. However, these technologies will not reach commercial scale until the early 2030s.

Strategic Firm Power and SMR Projects:

- Crane Clean Energy: This project provides 835 megawatts of capacity. It targets a 2027 start date with Microsoft.

- Susquehanna PPA: This existing nuclear project provides 1,920 megawatts. It targets a full ramp-up by 2032 with AWS .

- Chesterfield Plant: This natural gas project has multiple units. It targets 2028 to bridge data center demand .

- Kairos Power: This SMR project offers up to 500 megawatts. It targets a 2030 start date with Google .

- Oklo Aurora: This aggressive SMR timeline targets 2027. It will serve early data center operations.

Propane and On-Site Resiliency

Some operators are exploring propane as a lower-emission alternative. This replaces traditional diesel backup generators. Propane has portable storage and an indefinite shelf life. It is suitable for bridging needs when grid connections are delayed . These fuels offer a pathway to resilient data centers. They adapt well to site-specific energy constraints.

Geopolitics and the AI Arms Race

The race to dominate AI has transformed energy policy. It is no longer a domestic concern but a national security issue. Countries with cheap and reliable power gain a competitive advantage. This advantage helps them attract AI infrastructure. AI energy diplomacy is creating new geopolitical alignments. The United States leads the world in capacity today. However, the domestic energy crunch is threatening this leadership. U.S. companies may build hyperscale facilities abroad if they cannot find power at home. This potentials compromises U.S. digital sovereignty .

China’s Dual-Track Strategy

China presents a unique model for fueling AI growth. It achieved its 2030 renewable targets six years ahead of schedule. At the same time, it continues to build coal-fired power plants. China approves these plants at a rate of 66.7 gigawatts per year. China’s strategy is one of calculated redundancy. It uses renewables to meet growth but maintains a massive coal fleet. This fleet ensures energy security during periods of intermittency .

This hybrid approach allows Chinese AI developers to scale infrastructure. They do not face the interconnection delays seen in Western markets. China accounted for 93 percent of global construction starts for coal in 2024. Many projects are justified as a regulating function for the grid. They support high-tech industrial parks and AI clusters . State planning and manufacturing capacity reduce decision-making time. This allows sustained growth without fragmentation .

Digital Sovereignty and the Energy Trilemma

Policymakers must balance the Energy Trilemma. This involves energy security, equity, and sustainability. In Virginia, demand for data center power will double within ten years. This could increase residential electricity bills by $37 per month by 2040. This cost shift happens as utilities pass grid upgrade expenses to consumers.

Data centers have become a policy powerhouse. They drive decisions on tax incentives and pipeline projects. Some regions are prioritizing data centers based on efficiency. Others require onsite generation for new connections .

Regional AI Energy Strategies:

- United States: This region combines deep capital markets with nuclear restarts. It also uses emergency orders to expedite transmission permits .

- European Union: This region is moving toward the EuroStack vision. It aims to triple capacity while facing fragmented networks .

- United Kingdom: Officials are establishing AI Growth Zones. These zones expedite infrastructure development on public land .

- Gulf States: These nations leverage abundant gas and nuclear power. They target 10 percent of global AI-optimized compute .

- Ireland: This nation serves as a systemic warning signal. Data center consumption exceeds 20 percent of national electricity .

Proposed Solutions and Future Outlook

Solving the AI energy paradox requires a multi-faceted approach. This involves integrating technological innovation with policy reform. Relying on a single energy source is no longer feasible. The future of AI infrastructure will be defined by energy campuses. These are self-contained ecosystems that co-locate compute with generation .

Engineered Carbon Removals

Hyperscalers are investing billions in engineered carbon removal. This helps them maintain net-zero pledges while using firm power. Microsoft is the largest corporate buyer of carbon removal credits. It has contracted for over 34 million tonnes . A key focus is Enhanced Rock Weathering. This involves spreading crushed silicate rock over farmland. The rock reacts with atmospheric CO2 to create stable ions. This locks carbon away for thousands of years .

Strategies for Sustainable AI Scaling:

- Liquid Immersion Cooling: This technology has a two-year adoption timeline. It provides 20 percent gain in thermal efficiency .

- SMR Deployment: This provides reliable and carbon-free baseload power. It is constrained by regulatory scale and licensing pathways.

- Enhanced Rock Weathering: This provides large-scale carbon offset. Credits range from $230 to $400 per tonne .

- Efficiency ASICs: These chips deliver 4 times more performance per watt. They are constrained by semiconductor supply chains .

- Direct Air Capture: This technology targets costs of $600 per tonne by 2030. It requires gigawatts of power to be effective .

Efficiency Gains and Demand Flexibility

The industry is moving toward load flexibility on the demand side. Companies are exploring continuous batching and temporal shifting. They move non-urgent AI training tasks to times when renewables are abundant. Furthermore, Small Language Models offer a promising alternative. These models use far fewer parameters. They can run locally on edge devices . They consume up to 75 percent less energy than Large Language Models for certain tasks.

Strategic Assessment: The 2030 Landscape

The AI-Energy Nexus will fundamentally reshape the global grid by 2030. Renewables will remain a critical part of the mix. However, their role will evolve. They will become a fuel saver that supplements firm and reliable generation. The most successful AI hubs will be those that integrate nuclear restarts. They will also use gas-to-grid partnerships and storage technologies.

The reliance on renewables alone has proven insufficient. This is not due to a lack of environmental will. Instead, the physics of 100-kilowatt racks demand firmness. Current intermittent technology cannot provide this firmness at scale. The transition to AI-driven economies necessitates a pragmatic energy strategy. This strategy must prioritize grid stability and speed-to-power. Carbon reduction remains important but cannot be the only goal. Organizations that view energy as a strategic asset will secure the lead. They will manage and optimize energy at the rack level. They will become the winners in the next era of human intelligence.

Institutional Challenges in Grid Modernization

- Site Selection: This takes 2 to 6 months for data centers. It takes up to 5 years for grid infrastructure .

- Permitting: Data centers finish in 9 months. Grid infrastructure requires up to 7 years .

- Construction: Hyperscale centers finish in 20 months. Grid projects require 10 years .

- Interconnection: This adds 2 years for centers but 7 years for the grid .

Ultimately, the sector is experiencing an investment supercycle. It requires up to $3 trillion by 2030 . Roughly 100 gigawatts of new capacity will come online globally. This will effectively double the size of the sector. Speed to power has replaced latency as the primary criteria for site selection. Developers are looking toward green energy parks in regional locations. They are also seeking behind-the-meter generation partners . The organizations that survive this shift will be those that innovate at the intersection of energy and compute.