The industrialization of artificial intelligence marks a major turning point in global computing history. This transition moves the industry from a software-driven digital paradigm into a hardware-constrained physical reality. As 2030 approaches, the engineering of high-density physical infrastructure will dictate the ability of firms to train advanced models. This report provides a comprehensive strategic roadmap for investors and policymakers. It outlines the systemic shifts necessary to support next-generation AI workloads through the end of the decade.

The Physical Engineering Challenge

Building sustainable infrastructure for AI has become a complex multi-domain engineering challenge. This task now transcends the traditional boundaries of a simple IT rollout. The core thesis of this analysis suggests that physical constraints currently limit the scaling of AI. These constraints include power availability, thermal management, and supply chain resilience. Furthermore, the industry will encounter several critical bottlenecks between 2026 and 2030. Consequently, global data center power demand will likely surge by up to 175 percent compared to 2023 levels.

Infrastructure and Transmission Bottlenecks

This expansion requires the global electric grid to support an additional 100 gigawatts of capacity. However, utility planning timelines often span five to ten years for high-voltage transmission lines. This creates a structural mismatch between digital innovation and physical construction. Accordingly, strategic imperatives for the next five years prioritize unprecedented rack density. Rack-level power consumption is climbing toward a future standard of 300 to 600 kilowatts.

Transitioning to New Standards

Managing these massive loads requires a total overhaul of facility design. Operators are phasing out air cooling in favor of direct-to-chip and immersion systems. Additionally, high-voltage direct current architectures are replacing traditional alternating current distribution. This shift minimizes conversion losses and material costs. For investors, the financial landscape is shifting toward capital-intensive models. These models must reflect the rapid depreciation cycles of modern GPU hardware.

Investment and Sustainability Imperatives

Total annual spending on data center infrastructure will likely surpass 1 trillion dollars by 2030. Furthermore, the industry requires a cumulative 3 trillion dollars in financing by 2029. Policymakers must simultaneously navigate economic competitiveness and environmental sustainability. Emerging regulations regarding water usage and carbon emissions will dictate the geographic distribution of AI megafacilities. Success requires a holistic understanding of how physical infrastructure determines the competitive upper bound of artificial intelligence.

Timeline of Primary Infrastructure Bottlenecks

- 2025 to 2026: Advanced Packaging (CoWoS) serves as the primary constraint. Accordingly, the strategic imperative involves diversifying assembly partners to mitigate limits on accelerator availability.

- 2026 to 2027: High-Bandwidth Memory (HBM) supply acts as the main bottleneck. Therefore, operators should lock in multi-year contracts to manage rising costs.

- 2027 to 2029: Grid Interconnection becomes the central constraint. The strategic imperative involves deploying on-site generation and microgrids to avoid campus build delays.

- 2029 to 2030: Environmental regulations act as the primary constraint. Operators must adopt sustainable cooling fluids to ensure global site selection viability.

Defining High-Density AI Infrastructure

High-density AI infrastructure involves the extreme concentration of compute power and thermal flux. This evolution represents a qualitative shift in how mechanical and electrical systems support computational work. In practical terms, high-density refers to server racks that exceed the thermal limits of air-based cooling. This threshold typically begins at 20 kilowatts per rack and extends into the 300 to 600 kilowatt range.

Redefining Computational Concentration

The physical architecture of modern accelerators drives this shift toward higher densities. Large-scale AI training requires operators to place massive clusters of GPUs in close proximity. This arrangement maintains low-latency communication through high-speed interconnects like InfiniBand. However, this clustering creates a massive heat density. Standard methods would require hurricane-force airflow to cool these environments. Specifically, high-density infrastructure focuses on bringing cooling and power delivery directly to the silicon.

Mechanical and Networking Specifications

Mechanical specifications are also changing rapidly. Traditional data centers once supported distributed weight loads of 150 pounds per square foot. In contrast, a fully loaded AI rack can weigh several thousand pounds. This requires reinforced floor slabs and specialized material handling systems. Floor loading requirements now reach 400 to 600 pounds per square foot. Furthermore, high-density clusters demand a higher ratio of networking switches to compute nodes. Operators often utilize 800G optical interconnects to prevent data bottlenecks between racks.

Footprints and Site Selection

The transition to high-density environments has major implications for site selection. Physical footprints can shrink by a factor of twenty when rack densities climb to 300 kilowatts. However, the electrical requirements of the hall remain the same. This means that the density of power per square foot increases exponentially. Power density can now exceed 2,000 watts per square foot facility-wide. Therefore, proximity to high-capacity electrical substations becomes far more important than land cost.

The Rise of Integrated Rack-Scale Systems

The fundamental building block of this next generation is the rack-scale system. Rather than individual servers, operators engineer the entire rack as a single integrated unit. Google’s Project Deschutes and Nvidia’s Kyber architecture represent the early stages of this trend. These designs consolidate power conversion, battery backup, and cooling manifolds at the rack level. This modularity allows for the rapid deployment of complete “AI Factories” where the infrastructure arrives pre-validated.

Evolution of Data Center Generations and Physical Constraints

- Cloud 1.0: Focused on enterprise apps and storage. Racks used 5 to 15 kW with forced air cooling. The primary physical constraint was the real estate footprint.

- Cloud 2.0: Focused on big data and early ML. Densities reached 15 to 50 kW using hybrid air cooling. Power availability served as the main constraint.

- AI Factory: Current facilities focus on large model training. Racks use 100 to 300 kW with liquid cooling. Thermal management is the primary constraint.

- Ultra-Density: Future sites will focus on AGI scale training. Densities will reach 600 kW to 1 MW. The material supply chain will act as the key constraint.

The Energy Imperative: Power and Grid Limits

Energy has emerged as the definitive constraint on the trajectory of artificial intelligence. Current projections show data center power demand increasing by as much as 175 percent by 2030. Global data center capacity stood at approximately 59 gigawatts in 2024. However, experts expect this capacity to grow to 122 gigawatts or more by the end of the decade. This surge stems from the intensification of power use per rack. Analysts forecast this to rise from 162 kilowatts to 176 kilowatts per square foot by 2027.

The Gigawatt-Scale Planning Challenge

The grid infrastructure required to support this growth currently faces an unprecedented planning challenge. Unlike incremental load growth, today’s AI loads arrive in gigawatt-scale steps. In many regions, the current grid cannot handle this level of concentrated demand. In the United States, data center energy consumption could double by 2030. Eventually, this sector could account for 12 percent of total electricity demand.

Regional Grid Stress Episodes

Regional grid stress is most visible in hubs like Northern Virginia and the Permian Basin. In Virginia, data centers already account for 25 percent of the state’s total electricity consumption. This concentration leads to transmission congestion and lengthy interconnection queues. Therefore, utilities must prioritize network upgrades over new connections. In Texas, the local grid operator predicts that data centers and crypto mining will drive 58 percent of new demand in the Permian Basin.

Managing Interconnection Shortfalls

The MISO interconnection queue provides a stark example of these pressures. Heading into 2025, the queue exceeded 300 gigawatts before several developers dropped out. As of late 2025, the queue still totals 174 gigawatts across 944 projects. MISO expects to sign only 25 gigawatts of agreements annually. This creates a backlog that delays project timelines by several years. Consequently, developers are seeking alternative regions with “power-ready” land and positive substation capacity.

Regional Grid Status and Strategy through 2030

- Northern Virginia: Currently saturated with high congestion. Planners expect transmission limits by 2030. The primary response strategy involves advanced nuclear and small modular reactors (SMRs).

- Texas (ERCOT): Currently reliable but storage limited. Faces regional reliability events by 2030. The primary response strategy includes geothermal power and long-duration storage.

- Nordics: Currently possesses a surplus of renewable energy. Analysts expect secondary market growth by 2030. The primary response strategy focuses on waste heat recovery and district heat integration.

- Southeast Asia: Currently land and energy constrained. Faces high selective growth by 2030. The primary strategy involves tropical cooling standards and green power procurement.

Stranded Potential and On-Site Generation

The timeline for utility expansion imposes a hard limit on deployment. Constructing high-voltage transmission lines typically takes between five and ten years. In contrast, builders can finish an AI data center in two years or less. This mismatch creates “stranded potential” where land remains unpowered for years. To mitigate this, developers are turning to behind-the-meter solutions. These include natural gas turbines, large-scale battery storage, and even dedicated small modular reactors.

The Role of Fuel Cells and Microgrids

Modular fuel cell systems are emerging as a critical bridge. These systems can be deployed in less than a year, compared to five years for gas turbines. They are 10 to 30 percent more efficient and operate with much lower noise levels. Companies like Bloom Energy are already supplying over 400 megawatts of generation to data centers worldwide. These microgrids allow data centers to operate completely off-grid in load-following mode while waiting for utility connections.

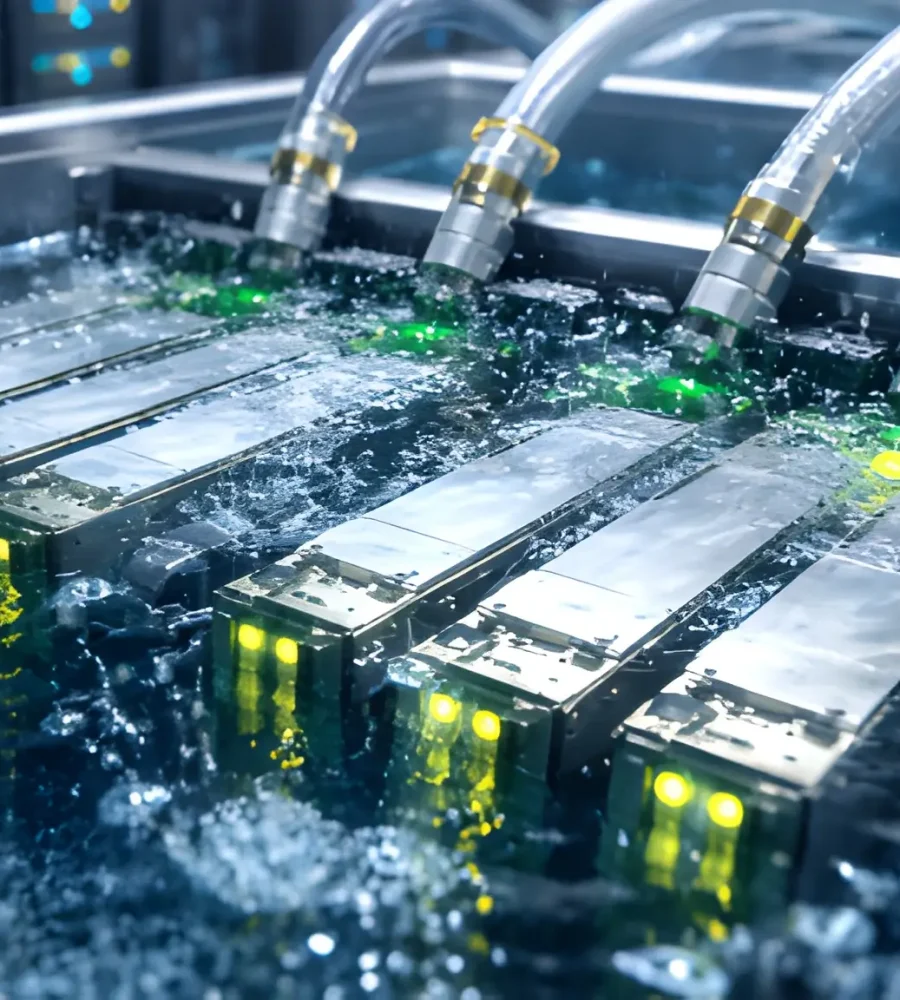

Cooling Logistics: Beyond Airflow

Thermal management has transitioned from a utility to a strategic differentiator. Chip power envelopes now exceed 1,000 watts per processor. Accordingly, the physics of air cooling have reached an absolute threshold. Moving heat from a 40 kilowatt rack with air requires 4,000 cubic feet per minute of airflow. This generates 95 decibels of noise and consumes 25 kilowatts just for fan power. Specifically, air carries 3,300 times less heat per unit volume than water.

Transitioning to Liquid Efficiency

Liquid cooling is the only viable path forward for 2030 densities. Water has a specific heat capacity roughly 4,000 times greater than air per unit volume. This allows a modest flow rate of 10 gallons per minute to remove 100 kilowatts of heat load. Consequently, the market for data center cooling will reach 45 billion dollars by 2030. Liquid cooling will account for nearly half of that total.

Cold-Plate and Immersion Systems

Direct-to-chip systems currently hold nearly 47 percent of the market share. These systems circulate coolant through a plate mounted directly on the GPU or CPU. This captures 80 percent of the server heat load while air cooling handles the rest. In contrast, immersion cooling submerges entire servers in dielectric fluid. This method eliminates the need for fans and enables densities of 400 kilowatts to 1 megawatt per rack.

Comparative Efficiency and Volume Benefits

- Direct-to-Chip: Achieves efficiency (PUE/pPUE) of 1.15 to 1.20. It enables a 2x increase in chip density. Notably, operators can retrofit this technology to existing sites.

- Single-Phase Immersion: Achieves efficiency (PUE/pPUE) of 1.05 to 1.10. It enables a 10x increase in chip density. The system eliminates fans and protects hardware from dust and humidity.

- Two-Phase Liquid: Achieves efficiency (PUE/pPUE) below 1.05. It provides maximum thermal flux. The technology uses phase-change physics and eliminates the need for pumping.

Sustainability and Heat Reuse

Water usage remains a significant sustainability challenge. The absolute scale of the industry could drive global consumption to 1,200 billion liters annually by 2030. To address this, operators are shifting toward closed-loop systems. These technologies rely on air-cooled heat exchangers for secondary facility loops, reaching zero water usage efficiency. Innovation is also focusing on heat reuse. High-density systems produce waste heat at temperatures high enough for district heating. Stockholm’s system recovers heat to warm 30,000 apartments, providing a model for circular infrastructure.

Power Distribution & Electrical Architecture

The electrical architecture of the data center is undergoing a major transformation. Legacy systems based on 415-volt or 480-volt alternating current are no longer efficient for megawatt-scale deployments. The reversal of the ratio between “grey space” and “white space” has forced a move toward compact power models. Traditional 54V distribution would require 64U of power shelves for high-density racks, leaving no room for compute.

Adopting 800V HVDC Backbones

Next-generation AI factories are adopting 800-volt high-voltage direct current (HVDC) distribution. By converting grid power directly to 800-volt DC at the facility perimeter, operators eliminate multiple conversion stages. This approach offers a 5 percent improvement in end-to-end efficiency. Furthermore, the shift to 800-volt DC addresses material constraints. High-voltage distribution reduces current demand for a given load. This allows for a 45 percent reduction in copper wire thickness.

Semiconductor and Material Innovation

Innovations in wide-bandgap semiconductors are enabling these high-voltage systems. Materials like Gallium Nitride (GaN) and Silicon Carbide (SiC) allow components to operate at higher frequencies. This further improves overall power density and speed. Additionally, microgrid integration is becoming essential for managing workload volatility. Integrated energy storage, treated as an active component of the 800-volt backbone, allows for load smoothing and provides a buffer against utility price spikes.

Comparison of Traditional AC vs. 800V HVDC Architectures

- Conversion Stages: Traditional 415V AC requires multiple AC/DC and DC/DC stages. 800V HVDC utilizes a streamlined single-step process, leading to lower energy loss.

- Conductor Size: Traditional AC uses heavy gauge copper. 800V HVDC allows for up to a 45 percent reduction in conductor size, lowering cost and weight.

- Power per Conductor: 800V HVDC architecture delivers 157 percent more power per conductor than 415V AC. This allows for more power within the same conduit.

- Maintenance: Traditional systems have high maintenance needs due to multiple power supply units. 800V HVDC offers up to a 70 percent reduction in maintenance, improving uptime.

In-Rack Distribution Shifts

The distribution of power within the rack is also changing. Racks are moving away from individual power supply units in every server toward centralized rack-level power shelves. These shelves accept 800-volt DC and convert it to 48-volt or 54-volt DC for the busbar. This method ensures that the conversion from data center power is only done once per rack. The final step-down to processor voltage is handled by high-ratio 64:1 converters placed immediately adjacent to the GPU.

Supply Chain and Critical Inputs

The global supply chain for AI infrastructure faces structural limits. These bottlenecks shape pricing and availability through 2027. Specifically, these constraints are concentrated in advanced packaging, high-bandwidth memory, and electrical components.

Structural Limits in Manufacturing

TSMC provides the majority of the advanced packaging capacity for the industry. However, the company reports that its Chip-on-Wafer-on-Substrate (CoWoS) lines are sold out into 2026. Substrate tooling lead times currently run between 12 and 18 months. Similarly, high-bandwidth memory (HBM3E) is fully booked through 2026. Major cloud providers are locking in multi-year allocations, which leaves smaller buyers with limited options.

Critical Materials and Steel Squeeze

Data center infrastructure depends on critical minerals and materials. Copper is a primary constraint because it is essential for transmission lines and distribution. Microsoft’s 80 MW Chicago site used about 2,100 tonnes of copper, or 26 tonnes per megawatt. High-density facilities also require specialized components like high-capacity transformers. These components rely on grain-oriented electrical steel (GOES). Limited output of this steel has created a global bottleneck in transformer production.

Critical Supply Chain Inputs and Strategic Outlook for 2030

- Semiconductor Advanced Packaging: The primary risk is CoWoS capacity acting as a fabrication ceiling. The outlook involves diversifying partnerships with OSAT partners.

- Memory (HBM3E / HBM4): The primary risk is the scalability of bit demand. High contract pricing will likely persist through at least 2026.

- Electrical (GOES Steel / Copper): The primary risk involves transformer production lead times. Global demand is projected to increase by 5 to 10 percent.

- Cooling Fluids: The primary risk involves regulatory bans on PFAS. The industry outlook focuses on the transition to synthetic dielectric oils.

- Magnets (Rare Earths): The primary risk is the geopolitical concentration of elements like Neodymium. The 2030 outlook focuses on recycling and source diversification.

Rare Earth Elements and Magnets

Rare earth elements play a foundational role in digital infrastructure. Neodymium and dysprosium are essential for high-density permanent magnets in hard drives and cooling fans. A mid-scale facility requires less than 100 tonnes of rare earth oxides annually. However, China controls 85 to 90 percent of the processing capacity. This concentration creates a single-point-of-failure risk for the global supply chain. Operators are now exploring recycling programs for magnets to improve resilience.

Geographic Strategy: AI Megafacilities

Resource availability now dictates the geography of high-density AI infrastructure. Power and water access take precedence over proximity to end-users. As traditional hubs reach saturation, operators are seeking “emerging” and “secondary” markets.

Shifting from Tier 1 Hubs

Northern Virginia remains the global anchor for capacity. However, transmission congestion is restricting its growth. This forced major tech companies to invest in regions like Texas and the Pacific Northwest. Specifically, Texas leverages its independent ERCOT grid to attract gigawatt-scale campuses. The Permian Basin serves as a notable example of this shift. Infrastructure originally built for oil extraction now supports data centers.

The Nordic Model and Sovereign AI

In Europe, the Nordic countries are emerging as a primary destination. Sweden and Norway offer large areas of land and cheap renewable electricity from hydro and wind. Crucially, the integration of data centers into district heating systems provides a model for high-efficiency infrastructure. Simultaneously, governments are treating AI infrastructure as a national priority. This leads to the development of indigenous capacity in regions like the Asia-Pacific.

Global Geographic Tiers and Strategic Drivers

- Mature Tier 1 (e.g., Northern Virginia, Amsterdam): Driven by existing fiber and ecosystem density. Primary risks include power congestion and highoperational costs.

- Energy Hubs (e.g., Texas, Permian Basin): Driven by abundant land and deregulated grid access. Primary risks include reliability events and water scarcity.

- Sustainability Leaders (e.g., Sweden, Norway, Finland): Driven by zero-carbon grids and waste heat reuse. Primary risks involve latency and regulatory complexity.

- Strategic Hubs (e.g., Singapore, Jurong Island): Driven by regional sovereignty and connectivity. Primary risks involve extreme land and power constraints.

Expansion into Emerging Markets

Geopolitical implications for sovereignty over compute are also driving infrastructure into Latin America. Chile and Brazil are becoming important expansion destinations due to their renewable resources. Chile positions itself as a “green digital leader,” aiming for eight times today’s level of demand by 2032. However, these regions face challenges like community backlash over water usage. Google’s Chile proposal recently drew backlash over 7.6 million liters per day in water-scarce areas.

Business Models & Investment Pathways

Successful commercial strategies for 2030 must navigate massive capital requirements. Total investment in data centers will likely reach 1 trillion dollars annually by the end of the decade. Alphabet, Microsoft, Amazon, and Meta invested nearly 200 billion dollars in CapEx in 2024 alone.

The Rise of Specialized Neoclouds

A new category of cloud provider has emerged to address specific AI needs. These neoclouds specialize in GPU-as-a-Service. They provide access to high-performance accelerators at competitive prices. Pricing can be as low as one-third of the rates charged by hyperscalers. Neocloud revenue will likely reach 180 billion dollars by 2030, growing at 69 percent per year. Firms like CoreWeave and Nebius often complement hyperscalers by providing ready-to-go capacity during demand surges.

Nuanced Financing Models

Financing these projects requires a departure from traditional rulebooks. Unlike static infrastructure, AI hardware must be replaced every three to five years. This reality has led to a split in financing. Project finance funds long-term grid infrastructure and sustainable water systems. In contrast, asset-based lending and private equity fund high-velocity GPU replacement cycles.

AI Infrastructure Business Models and Investment Strategies

- Hyperscaler (Self-Build): Targets internal SaaS and broad cloud services. Utilizes balance sheet funding to maintain control over the full stack and scale.

- Neocloud (GPUaaS): Targets AI startups and specialized enterprises. Uses asset-backed lending and venture capital to provide low-cost, specialized infrastructure.

- Sovereign Cloud: Targets government and local industry. Utilizes public-private partnerships to ensure data sovereignty and national security.

- Colocation Provider: Targets a diverse range of enterprise and AI clients. Uses project finance and REIT structures to provide flexibility and power access.

Public-Private Partnerships (PPPs)

PPPs are becoming a crucial model for grid and cooling infrastructure. The scale of investment often exceeds the capability of the private sector alone. Governments provide policy formulation and institutional support while the private sector focuses on implementation. This blended-finance approach is particularly prevalent in regions where private capital cannot meet the necessary investment scale.

Policy, Regulation, & Sustainability

The regulatory environment for AI infrastructure is evolving toward specific mandates. Policymakers are using data center permits as a lever to achieve national goals.

Codifying Environmental Performance

Carbon targets and renewable portfolio standards are the primary drivers of reform. To balance these needs, states are reevaluating grid planning. They are now incentivizing the development of advanced nuclear and geothermal energy. These sources provide the consistent “clean firm” energy required by AI without the intermittency of solar or wind.

Water Stewardship and Disclosure

Water usage has become a flashpoint for regulatory tension. In Arizona, conditional permits for new data centers now hinge on third-party hydrology studies. Microsoft and Amazon have made water-positive pledges to return more water to basins than they consume. Similarly, Singapore has set a median water usage efficiency benchmark of 2.2 cubic meters per megawatt-hour. This forces operators to adopt more efficient cooling technologies.

Key Regulatory Standards and Impacts on AI Infrastructure

- Energy Efficiency (e.g., German EnEfG Act): Requires a PUE of 1.2 or lower for new sites. This results in the mandatory implementation of waste heat reuse systems.

- Chemical Safety (e.g., EU PFAS Ban): Targets the phase-out of harmful cooling fluids. This necessitates a redesign of two-phase cooling architectures.

- Water Stewardship (e.g., Singapore SS 715:2025): Mandates tropical temperature operation up to 35°C to reduce overall cooling energy demand.

- Hardware Standardization (e.g., OCP Open Rack V3): Requires modular and interchangeable parts. This improves vendor interoperability and reduces lock-in.

- Resource Management (e.g., EU Critical Raw Materials Act): Sets a 25 percent recycling target for minerals. This focus helps diversify the material supply chain.

Hardware Standardization and Interoperability

Standardization is playing a critical role in the regulatory landscape. The Open Compute Project (OCP) creates efficient, open-source designs for high-density racks. The OCP Open Rack V3 specification defines the interfaces needed for direct liquid cooling and 48-volt DC power shelves. This standardization reduces costs for operators and prevents vendor lock-in with proprietary cooling manifolds.

The Strategic Roadmap to 2030

The transition to high-density AI infrastructure involves a fundamental reimagining of the data center. Success through 2030 will require the successful integration of advanced cooling and high-voltage power. The winners of the next decade will align their digital ambitions with physical realities.

Key Inflection Points

The strategic timeline begins in 2025 with the peaking of packaging bottlenecks. Widespread adoption of 800-volt architectures will likely follow in 2027 to coincide with Nvidia Kyber systems. By 2028, the industry will face a grid interconnection cliff in Tier 1 markets. Finally, by 2030, environmental regulations regarding PFAS and water will become the primary filters for facility siting.

Roadmap to 2030: Summary

- Technical: Transition to liquid cooling (direct-to-chip/immersion) and 800V HVDC power delivery as the global standard.

- Economic: Adopt hybrid financing models that account for short hardware lifecycles and long facility lifecycles.

- Geopolitical: Diversify site selection to regions with renewable surplus and secure mineral supply chains.

- Sustainability: Achieve water-positive and carbon-neutral targets through closed-loop systems and heat reuse.

High-density AI infrastructure is now the physical foundation of the global digital economy. Aligning the incentives of investors, planners, and policymakers is the only way to ensure that the infrastructure of the future can meet the demands of the intelligence age.