The honeymoon phase of generative AI is ending. For the past two years, the world has been captivated by large language models that can write poetry or summarize emails. Now, as we move deeper into 2026, the industry is shifting toward something far more consequential: Agentic AI.

Instead of chatbots that wait for prompts, we are building agents that act autonomously. These systems plan workflows, negotiate with software, and execute real-world tasks. However, a major physical constraint stands in the way. A cloud-first mentality cannot support true agency. To move from AI that talks to AI that acts, compute must shift from distant cores to the localized edge.

The End of the Chatbot Era

Until now, we have treated AI like a digital oracle living far away. You send a question to a massive data center in Northern Virginia or Dublin. After a short delay, an answer arrives. This hub-and-spoke model worked well for chat-based systems because humans tolerate conversational lag.

Agentic AI operates under different rules. An agent does not just speak; it acts. Consider an autonomous supply chain agent rerouting delivery drones after sudden weather changes. Or imagine a retail agent managing inventory and security in real time. If these agents must check in with a centralized cloud for every micro-decision, latency does more than slow them down. It destroys their ability to act independently.

The core thesis is simple. For AI to evolve from assistant to executor, compute must function like a local utility, not a distant service.

Latency as the Enemy of Agency

In autonomous systems, the perception, reasoning, and action loop defines performance. An agent must ingest data, decide what to do, and then execute.

When this loop runs through a centralized cloud, physics becomes the bottleneck. Data can only travel so fast across fiber optic cables. A 200 millisecond delay feels trivial to a human reader. For an agent performing multi-step reasoning, those delays quickly accumulate.

Now consider an agent that requires ten sequential steps to solve a problem. If each step triggers a cloud round trip, the delay stretches into seconds. In 2026, responsiveness defines user experience. Latency undermines agency.

By moving inference closer to the user, round-trip times drop below 10 milliseconds. The difference is profound. Systems stop feeling clunky and start feeling intuitive.

The Rise of the Inference Zone

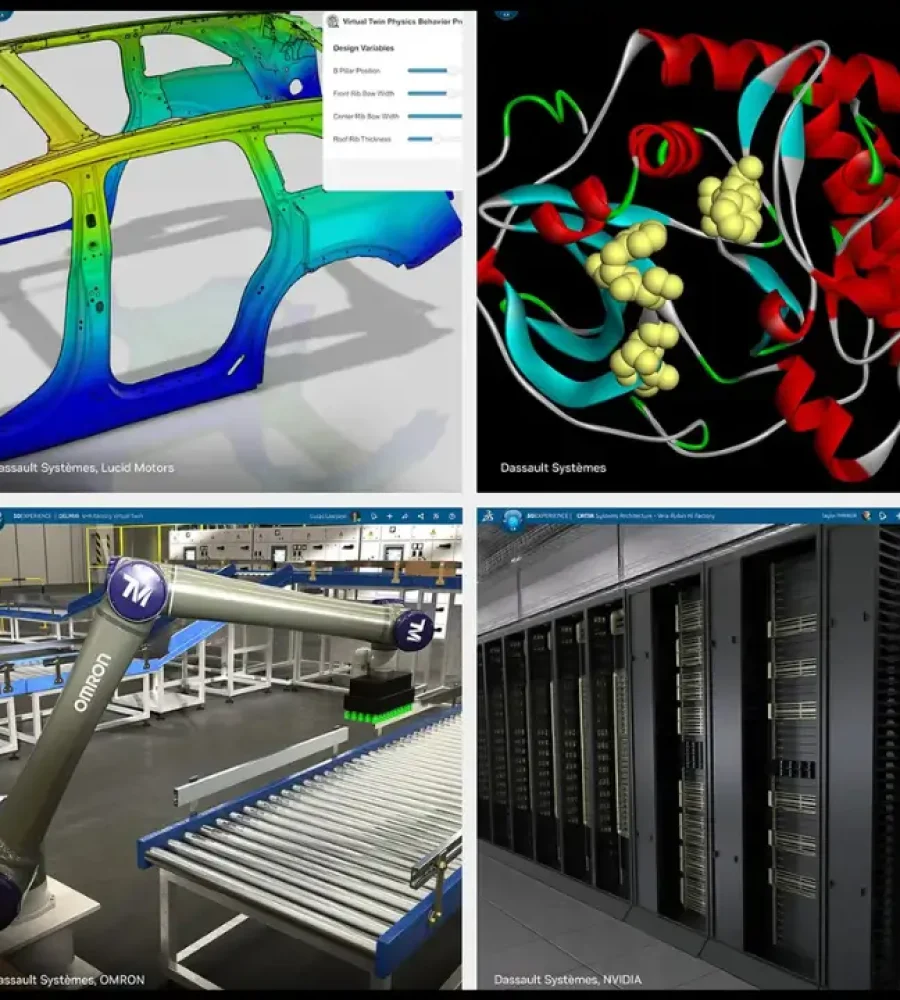

To address this challenge, the industry is adopting Inference Zones. Training still demands massive centralized campuses. Running agents requires something closer to a nervous system.

As a result, investment is shifting toward Tier-2 and Tier-3 data centers. These facilities are smaller, denser, and positioned in regional hubs. They act as regional brains that process local data locally.

Speed is not the only benefit. Resource efficiency matters just as much. Streaming terabytes of raw sensor data or high-resolution video to a central cloud strains bandwidth and raises costs. Processing that data inside a localized Inference Zone and sending only the results upstream makes large-scale agent networks viable.

Europe’s Unexpected Advantage

Ironically, the region once seen as lagging may emerge as the leader. For years, critics pointed to Europe’s fragmented data center landscape when comparing it to the massive hubs of the United States.

In the age of Agentic AI, that fragmentation becomes an advantage. Europe already operates a dense network of regional facilities, from Frankfurt and Warsaw to Marseille and Milan. This structure forms the physical mesh required for localized compute.

Regulation reinforces this advantage. Data sovereignty laws such as GDPR and the AI Act have pushed companies to build sovereign clouds. When an AI agent processes sensitive medical or financial data in Munich, that data stays local. Europe is already decentralized by design, which makes it an ideal testing ground for a distributed AI backbone.

AI as a Utility Business

Economic data reinforces this shift. Synergy Research Group reports that cloud spending reached $419 billion in 2025. However, the spending pattern reveals a deeper change.

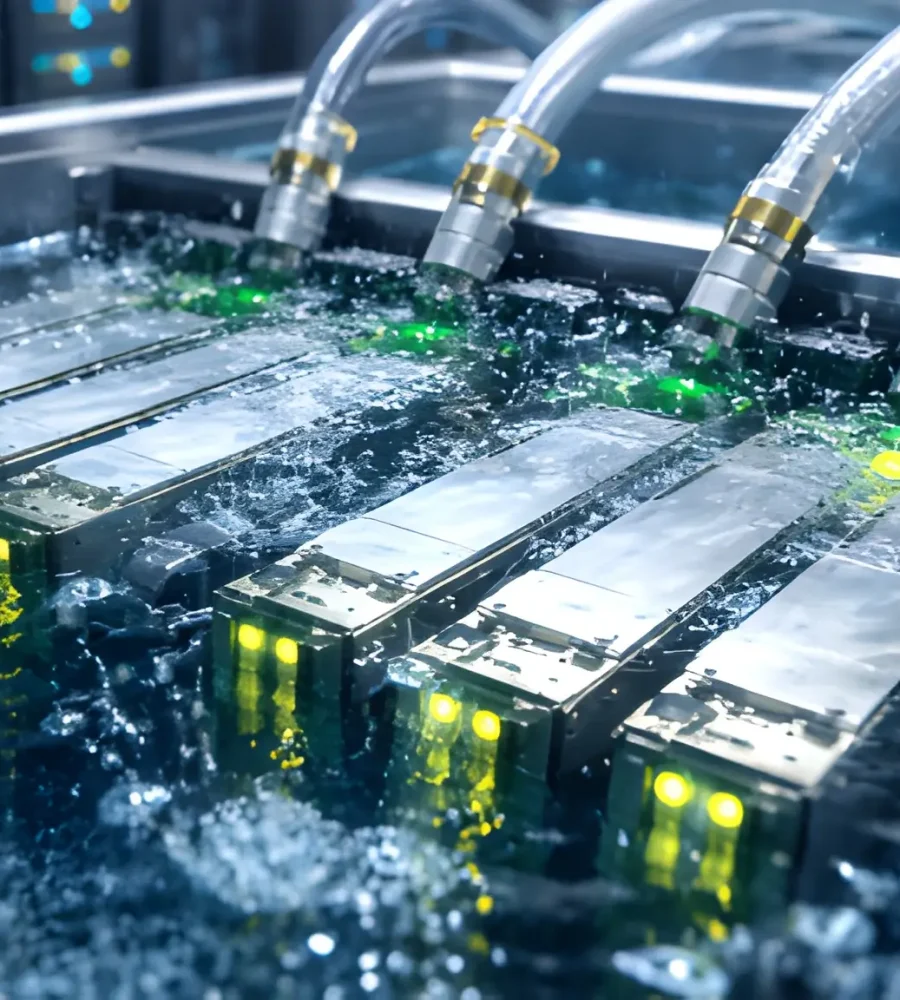

Hyperscalers like Amazon, Google, and Microsoft are no longer focused only on scale. They are becoming utility providers. Investment now flows into micro-centers and localized energy solutions. In the United States, data centers are even being paired with local nuclear reactors.

The projected $600 billion in capital expenditure for 2026 reflects this reality. The money is not just for chips. It funds land, power, and infrastructure that place compute as close to users as possible.

Building a Live Web

As 2026 unfolds, the map of the internet is changing. We are leaving behind a world where we visit the web. In its place emerges a world where autonomous agents live alongside us.

Localized compute represents more than a technical upgrade. It reshapes how intelligence spreads across the planet. If compute is the oxygen of AI, localized inference provides the lungs. It enables systems to breathe, respond, and act in the physical world.

The cloud is not disappearing. It is descending. It is becoming a fog: distributed, localized, and highly responsive. This fog will power the everyday actions of Agentic AI and finally allow it to move from theory into lived reality.