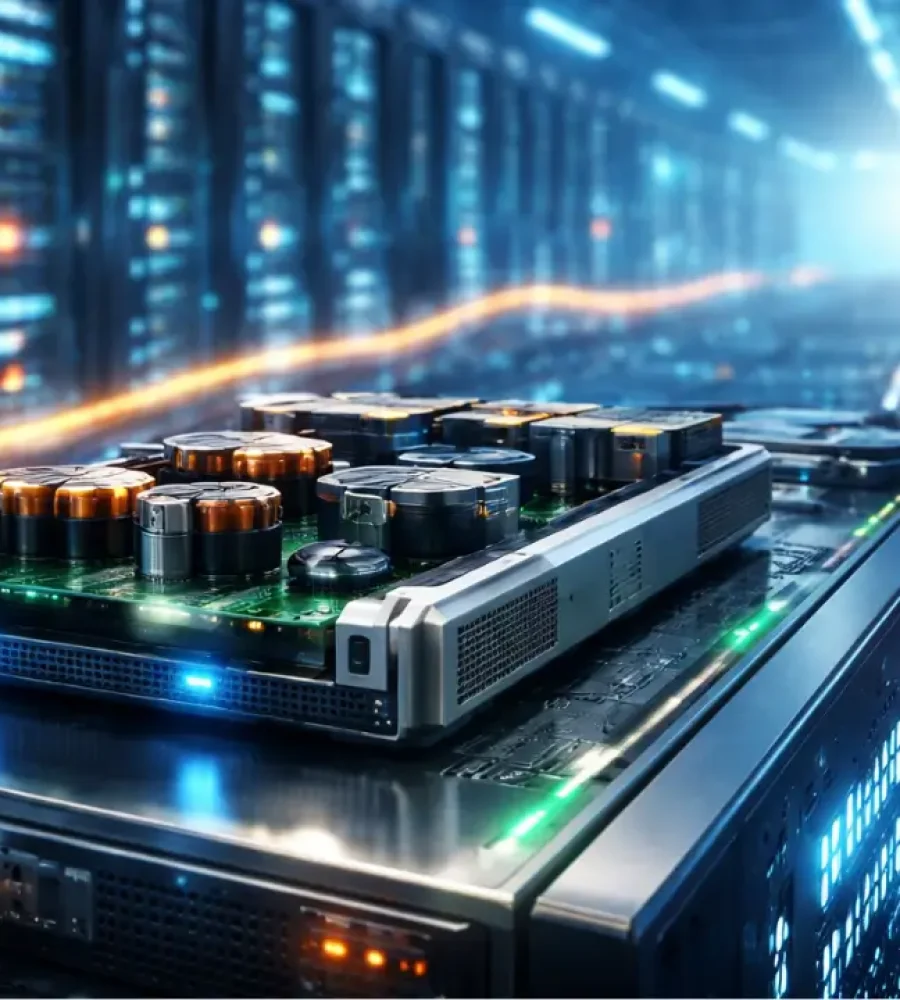

The era when central processing units stood at the unquestioned center of data center growth has passed. Today, infrastructure expansion is fueled by the rapid adoption of artificial intelligence, and accelerators sit at the heart of that demand. Graphics processing units, custom AI ASICs, and other specialized silicon have evolved from performance enhancers into foundational elements of modern compute infrastructure. According to a Dell’Oro Group forecast, the global data center accelerator market is projected to reach approximately $446 billion by 2029, with accelerators accounting for about one third of total data center capital expenditures.

This structural shift has reshaped where organizations invest, how data centers are designed, and which capabilities define competitive advantage. As AI workloads expand across industries, data centers are being rearchitected to maximize throughput and efficiency from accelerator-driven compute. Understanding this transformation is critical for infrastructure leaders, architects, and investors preparing for the next phase of growth.

How Accelerators Changed the Game

Accelerators have gained prominence in AI workloads because of clear technical advantages. Unlike general-purpose CPUs, GPUs and AI ASICs are designed for massive parallelism, which is essential for training and running large language models. They execute many operations simultaneously and deliver performance per watt that general-purpose processors cannot achieve at scale. These efficiencies are tangible. Dell’Oro reported a 40 percent year-over-year increase in server and storage component revenue in the third quarter of 2025, with growth driven largely by AI accelerator demand and related technologies such as high-bandwidth memory.

Hyperscale cloud providers now deploy accelerators at extraordinary scale, sometimes installing tens of thousands of GPUs or custom ASICs to support foundational models and enterprise AI applications. Their investment in proprietary silicon signals a lasting change. Accelerators are no longer optional enhancements but core infrastructure components. This shift reflects a broader recognition that CPU-centric architectures cannot scale AI workloads economically or technically.

Beyond GPUs: A Broadening Accelerator Landscape

Although GPUs remain dominant, the accelerator ecosystem now includes custom silicon designs, neural processing units, and other domain-specific processors. Companies outside the traditional GPU market are entering the space. For example, Qualcomm has introduced rack-scale inference accelerators aimed at data center workloads, expanding the competitive landscape.

This growing diversity reflects a key trend. Organizations are choosing accelerators based on workload requirements rather than brand. Training large generative AI models demands different capabilities than high-volume, low-latency inference. As a result, custom ASICs and NPUs optimized for specific tasks are gaining traction. A wider range of hardware options promotes innovation in performance, efficiency, and cost.

At the same time, rapid expansion presents risks. Some analysts caution that certain regions could face underutilized capacity if infrastructure deployment outpaces actual demand. This concern underscores the need for careful planning and accurate workload forecasting when investing in accelerator-heavy environments.

System Integration Matters as Much as Chip Design

Accelerators deliver value only when integrated effectively with memory, networking, and software systems. Technologies such as high-bandwidth memory are expanding alongside accelerators because they supply data at the speed required to keep compute units fully utilized.

Interconnect fabrics and high-speed networking are equally critical. As accelerator clusters scale, the ability to move data efficiently between nodes becomes a primary determinant of performance. Revenue growth in Ethernet back-end networks and switching markets reflects how AI clusters are pushing networking infrastructure to new limits.

Together, these developments highlight a shift toward holistic compute architectures. Performance is no longer defined by individual chips but by how well system components operate as an integrated whole.

Accelerator Economics: Capital Expenditures, Efficiency, and Cost Pressures

The move toward accelerator-driven infrastructure has significant financial implications. Capital spending on AI infrastructure has surged as organizations invest in dense compute clusters and advanced memory systems. Rising prices for components such as DRAM and high-bandwidth memory have increased overall spending even when unit growth has been moderate, complicating capital expenditure forecasts for major cloud providers. Analysts note that a substantial portion of recent increases in large technology company infrastructure spending stems from memory price inflation rather than pure hardware expansion.

This economic environment reinforces the importance of operational efficiency. Generating strong returns on AI infrastructure requires improvements not only in compute performance but also in energy efficiency, cooling, and software optimization. In response, hyperscalers are investing in custom silicon, liquid cooling technologies, and data center designs that reduce total cost of ownership over time.

Software Abstraction and Orchestration: The Next Frontier

Greater hardware diversity introduces operational complexity. As clusters combine GPUs, custom ASICs, and NPUs from multiple vendors, software layers must manage this heterogeneity effectively. Emerging orchestration platforms are becoming essential for allocating workloads based on performance requirements, cost considerations, and energy consumption.

Without intelligent orchestration, heterogeneous environments risk inefficiency and underutilization. In response, the industry is developing platforms that dynamically distribute workloads across different accelerator types, enabling interoperability and portability across environments. Over time, the strength of these software ecosystems may play a decisive role in determining which hardware platforms succeed.

What Comes After the Accelerator Boom

As investment in AI accelerators stabilizes in the coming years, the industry is likely to shift from an intense hardware expansion phase to one focused on balanced architecture. Organizations will design clusters that combine multiple accelerator types, each optimized for distinct workload categories, while relying on software orchestration to maximize utilization.

This approach will encourage the creation of specialized compute zones within data centers for large-scale model training, real-time inference, and high-performance computing. Orchestration layers will assign workloads to the most appropriate resource pools in real time, allowing infrastructure to respond dynamically to changing demand.

Such evolution promises improved efficiency and reduced idle capacity. It also signals a transition from prioritizing hardware volume to engineering systems that optimize AI performance, cost management, and sustainability.

Rethinking Infrastructure Strategy

Accelerators now stand at the center of data center growth, reshaping investment strategies, architectural decisions, and operational models. They influence compute capacity planning, cost structures, and system design. As the accelerator-driven expansion matures, attention will shift toward integrated architectures that balance performance, flexibility, and efficiency.

For infrastructure leaders and investors, the implication is straightforward. Long-term success depends less on acquiring more hardware and more on building adaptable systems in which compute, memory, networking, and software orchestration operate in concert. The data center of the future must be designed for complexity as well as scale to support the next wave of AI innovation.