Artificial intelligence does not sleep, and neither does the electricity that feeds it. AI clusters train models through the night, respond to inference calls within milliseconds, and sustain computation cycles that rival the energy demand of industrial facilities. As racks hum under relentless load, energy sourcing transforms from a procurement function into a structural design challenge. Many facilities still claim sustainability through annual renewable matching, yet their servers often run on fossil-heavy grids during critical hours. This mismatch exposes a credibility gap that grows as scrutiny over climate disclosures intensifies. The future of AI infrastructure depends on aligning energy supply with compute demand in real time rather than relying on abstract offsets. This reality makes a 24/7 carbon-free power strategy essential for the next generation of AI infrastructure.

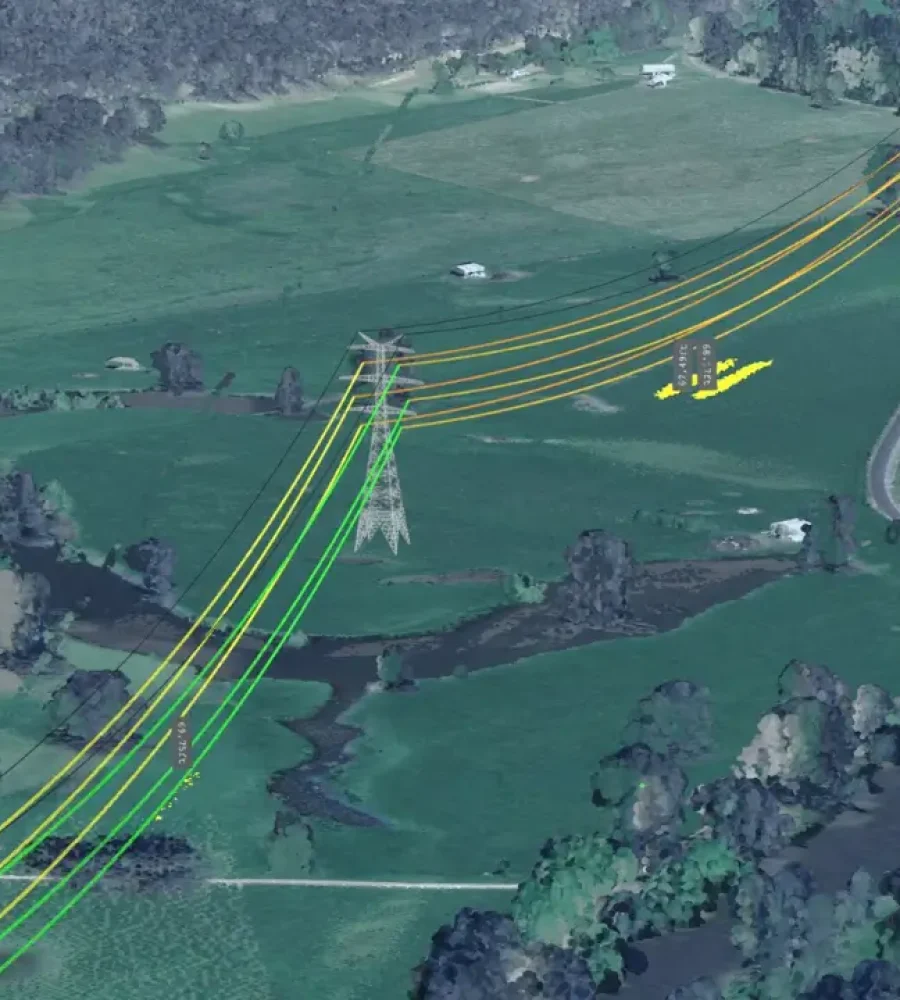

Electricity consumption from data centers continues to rise as model sizes expand and inference scales globally across industries. Grid operators now observe that hyperscale facilities can influence regional load curves during peak demand windows. Meanwhile, decarbonization pathways require deeper integration of renewables without destabilizing system frequency or reliability. These parallel pressures create a technical paradox: AI needs constant power, yet renewable generation fluctuates with weather and daylight. Solving that paradox requires architectural thinking across procurement contracts, grid interconnection, storage design, and workload orchestration. Achieving continuous clean power therefore demands coordination between energy markets and computational systems rather than symbolic accounting measures.

Beyond Renewable Credits: Redefining What “Carbon-Free” Really Means

Annual renewable energy certificates once served as a convenient mechanism to signal environmental responsibility. Companies could match total yearly consumption with an equivalent volume of renewable generation purchased elsewhere on the grid. However, this accounting approach overlooks the hourly mismatch between consumption peaks and renewable availability. When AI clusters draw maximum load during evening hours, solar-heavy portfolios cannot supply sufficient clean electricity without storage or complementary generation. As a result, fossil-fueled plants often fill the gap while sustainability reports still reflect net-zero claims on paper. This divergence has prompted a shift toward hourly carbon matching as a more rigorous benchmark of operational integrity.

Hourly alignment reframes the discussion from offsets to synchronization. Facilities that track carbon intensity signals across each hour can identify when grid power relies heavily on coal or gas generation. Such transparency encourages procurement strategies that prioritize clean energy delivery during high-emission intervals rather than averaging results across twelve months. Moreover, time-stamped energy attribute certificates now enable granular tracking of renewable output in relation to actual load. This evolution strengthens credibility because it reflects the real environmental footprint of computational activity. In turn, stakeholders gain clearer visibility into whether clean energy truly powers AI workloads at every moment of operation.

Moving beyond credits also demands new measurement frameworks that integrate grid emissions data, renewable production curves, and facility consumption patterns. Digital dashboards can visualize hourly supply-demand overlaps, revealing structural deficits that annual metrics conceal. These insights often show that carbon neutrality on paper masks operational exposure to carbon-intensive generation at night or during wind lulls. Therefore, energy strategy must address physical delivery constraints rather than abstract certificate balances. Advanced modeling tools can simulate how renewable additions shift hourly emissions intensity over time. Through this lens, carbon-free claims transform into engineering objectives that demand continuous optimization.

Hybrid Power Purchase Agreements as a Reliability Architecture

Hybrid power purchase agreements introduce structural diversity into clean energy procurement portfolios. Instead of relying solely on solar or wind, facilities can contract with multiple renewable sources that exhibit complementary generation profiles. Solar arrays often peak during midday hours, while wind farms can generate stronger output overnight in certain regions. By blending these resources within structured agreements, facilities reduce exposure to single-source intermittency risks. This portfolio approach smooths aggregate production curves and narrows the mismatch between renewable supply and compute demand. Consequently, procurement evolves into a reliability architecture rather than a simple cost negotiation exercise.

Geographic diversification further enhances resilience within hybrid agreements. Wind generation patterns in coastal areas may differ significantly from inland solar resources, creating temporal diversity across regions. Contracting across multiple grid zones can reduce correlated weather risks that disrupt single-location projects. Transmission constraints remain a factor, yet strategic siting near load centers improves deliverability. Structured PPAs can also integrate hydroelectric supply, which offers seasonal balancing capabilities that complement variable renewables. This multi-source blending constructs a carbon-free foundation capable of supporting sustained AI workloads without frequent recourse to fossil backup.

Financial structuring within hybrid PPAs plays a critical role in managing price volatility. Long-term fixed-price contracts can hedge against fuel price fluctuations that influence wholesale electricity markets. Portfolio layering allows different contract tenors to mature at staggered intervals, reducing concentration risk. Moreover, virtual PPAs can provide flexibility when physical delivery constraints limit direct power transfer. Risk modeling tools assess production variance and revenue certainty across blended renewable assets. Through disciplined structuring, hybrid agreements translate clean energy ambitions into stable operational cost frameworks for energy-intensive AI facilities.

Dispatchable Clean Energy: The Missing Link in AI Infrastructure

Variable renewables alone cannot guarantee uninterrupted power during prolonged weather anomalies. Dispatchable zero-carbon resources therefore emerge as stability anchors within renewable-heavy grids. Geothermal plants provide consistent baseload output because subterranean heat remains largely independent of atmospheric conditions. Advanced nuclear technologies also promise continuous generation with minimal carbon emissions. Flexible hydropower can ramp output quickly in response to sudden load fluctuations or renewable dips. Integrating these sources into clean energy portfolios strengthens reliability without reverting to fossil-based peaking plants.

Geothermal capacity, in particular, aligns well with round-the-clock compute demands. Its high capacity factor ensures steady electricity flow, reducing reliance on short-term market purchases during peak hours. However, resource availability depends on geological conditions, which limits universal deployment. Where feasible, geothermal integration can offset solar deficits during evening training cycles of large AI models. Meanwhile, next-generation nuclear designs aim to enhance safety and reduce construction timelines. These technologies offer the potential to supply firm carbon-free electricity that stabilizes renewable-dominated grids.

Hydropower contributes flexible ramping capabilities that support both frequency regulation and load balancing. Reservoir-based systems can store potential energy and release it when renewable output declines unexpectedly. Such dispatchability provides grid operators with tools to maintain stability during sudden demand surges from AI clusters. Environmental considerations require careful watershed management, yet modern facilities incorporate sustainability safeguards. By combining dispatchable resources with variable renewables, data centers can approach true temporal alignment between clean generation and computational demand. This blended strategy strengthens the structural backbone of low-carbon digital infrastructure.

Storage as a Strategic Bridge Between Generation and Demand

Energy storage systems act as temporal connectors within clean power architectures. Lithium-ion batteries can capture surplus solar generation during midday peaks and discharge it during evening compute spikes. This capability narrows the gap between intermittent supply and constant AI demand. Short-duration batteries excel at frequency regulation and peak shaving, enhancing grid stability. However, extended lulls in renewable production require long-duration storage solutions that exceed four-hour discharge windows. Strategic deployment of both short and long-duration systems strengthens resilience against variability.

Long-duration technologies include flow batteries, compressed air systems, and emerging thermal storage designs. These systems can sustain output for ten hours or more, bridging multi-day renewable deficits. Thermal storage also integrates with district cooling systems, which play a significant role in data center energy consumption. By shifting cooling loads to periods of abundant renewable supply, facilities reduce reliance on carbon-intensive electricity during peak intervals. Storage therefore evolves from a backup mechanism into an active enabler of clean energy continuity. Such integration reshapes the operational profile of AI campuses.

Battery deployment strategies must consider degradation rates, charging cycles, and economic trade-offs. Intelligent energy management software can optimize charging schedules based on carbon intensity forecasts and price signals. Coordinated dispatch reduces strain on grid infrastructure while maximizing clean energy utilization. Additionally, co-locating storage with renewable assets minimizes transmission losses and enhances efficiency. These design choices reinforce the strategic role of storage as an infrastructural bridge rather than an auxiliary add-on. Over time, declining storage costs may further accelerate adoption across high-load AI facilities.

Carbon-Aware Workload Scheduling: When Compute Becomes Grid-Responsive

Software orchestration now enters the energy conversation as a decisive factor in sustainability outcomes. AI workloads vary in latency sensitivity, training intensity, and regional deployment requirements. Non-urgent training tasks can shift to hours when renewable output peaks or grid carbon intensity declines. Real-time carbon data feeds enable schedulers to allocate compute tasks dynamically across geographically distributed clusters. This flexibility transforms compute infrastructure into a grid-responsive asset rather than a passive load. Consequently, digital systems begin to mirror demand response strategies long used in industrial energy management.

Geographic load balancing amplifies the impact of carbon-aware scheduling. Cloud regions in areas with abundant wind at night can absorb training cycles during those periods. Meanwhile, inference tasks that require low latency remain anchored near user populations but still benefit from local renewable integration. Data-driven orchestration platforms can weigh energy cost, carbon intensity, and performance constraints simultaneously. Such multidimensional optimization enhances both sustainability and operational efficiency. Over time, algorithmic scheduling may reduce peak strain on fossil-intensive grids without compromising service quality.

Machine learning models themselves can assist in forecasting renewable output and grid emissions trends. Predictive analytics can anticipate solar irradiance patterns or wind speeds hours in advance. Integrating these forecasts with workload management systems enables proactive scheduling adjustments. This approach elevates sustainability from a reporting metric to a runtime variable embedded within computational logic. By embedding energy awareness into the orchestration layer, AI infrastructure aligns its own intelligence with decarbonization objectives. The convergence of digital optimization and clean energy management signals a structural evolution in data center design.

Predictive carbon optimization requires granular telemetry across servers, cooling systems, and grid interfaces to function with precision. High-resolution metering can map the exact relationship between compute intensity and marginal emissions factors in each operating region. Integrating this telemetry into workload orchestration engines allows dynamic shifting that responds to hourly carbon forecasts rather than historical averages. Such synchronization reduces indirect emissions without interrupting service reliability or compromising latency-sensitive processes. Furthermore, automated policy engines can prioritize clean energy utilization during periods of constrained renewable supply. This convergence of data analytics and energy intelligence establishes a responsive infrastructure model capable of evolving with grid decarbonization trajectories.

Carbon-Aware Orchestration and Predictive Grid Intelligence

Advanced orchestration frameworks can incorporate carbon pricing signals alongside performance metrics to guide compute allocation decisions. When wholesale markets reflect higher emissions intensity through elevated marginal prices, scheduling systems can defer non-critical processing tasks. This economic alignment strengthens the financial rationale for sustainability while maintaining operational resilience. Carbon-aware compute strategies also complement storage dispatch, enabling coordinated charging and discharging cycles that reflect workload intensity. Over time, this synergy between software and hardware layers reduces dependency on fossil peaking resources during sudden load surges. Such integration positions AI campuses as adaptive nodes within increasingly renewable-dominated power systems.

The architecture of carbon-responsive computing extends beyond training schedules to encompass inference routing and redundancy planning. Distributed AI services can route queries to regions experiencing lower carbon intensity while maintaining acceptable response times. This routing logic requires sophisticated latency modeling to preserve user experience across geographies. Meanwhile, redundancy frameworks must account for renewable variability without triggering unnecessary fossil-backed failovers. Energy-aware replication policies can balance resilience with decarbonization objectives. Through coordinated orchestration, compute infrastructure begins to operate as an intelligent participant in grid balancing rather than an inflexible demand block.

Integration with grid operators further enhances the effectiveness of responsive AI campuses. Real-time data exchange protocols can communicate anticipated load shifts to system operators, improving overall network stability. Participation in ancillary service markets allows facilities to provide frequency response or demand reduction during grid stress events. These mechanisms convert flexible compute capacity into a reliability resource that supports renewable integration. Transparent reporting frameworks can document hourly emissions performance, reinforcing accountability across stakeholders. Such collaborative engagement aligns digital expansion with broader decarbonization pathways shaping global electricity systems.

Conclusion: From Carbon Neutral Claims to Carbon-Aligned Operations

True carbon alignment requires a coordinated architecture that integrates procurement strategy, dispatchable generation, storage capacity, and intelligent workload orchestration. Annual credit matching cannot capture the temporal realities of electricity consumption that continuous AI operations impose. Hybrid procurement portfolios introduce diversity, yet they require firm clean anchors to stabilize supply across unpredictable weather cycles. Storage systems bridge temporal mismatches, while predictive scheduling tools refine real-time alignment between compute and clean generation. Each layer reinforces the others, forming an interconnected framework rather than isolated sustainability initiatives. Through such integration, energy strategy becomes inseparable from infrastructure design.

Carbon-aligned operations also demand transparent metrics that reflect hourly performance rather than annual aggregates. High-resolution monitoring enables facilities to quantify gaps and refine strategies continuously. Dispatchable clean energy resources provide structural assurance during prolonged renewable deficits, reducing systemic reliance on fossil backup. Storage deployments absorb variability and extend renewable utilization across critical demand windows. Meanwhile, carbon-aware orchestration software ensures that computational flexibility translates into measurable emissions reductions. Together, these elements convert sustainability commitments into operational realities grounded in engineering discipline.

The path toward continuous clean power for AI infrastructure therefore hinges on systemic integration rather than symbolic offsetting. Every layer of the stack, from power contracts to scheduling algorithms, must align around temporal precision and resilience. As grids decarbonize further, facilities that embed energy intelligence into their core architecture will adapt more efficiently to evolving market dynamics. Infrastructure decisions made today will influence emissions trajectories for decades, given the lifespan of large-scale data center assets. Strategic foresight requires balancing technological feasibility with reliability imperatives that sustain uninterrupted computation. Ultimately, sustained alignment between clean energy supply and digital demand defines the credibility of future-ready AI ecosystems.