AI Is Stress-Testing the Rack

Cloud computing has always promised simplicity: hide complexity, let users focus on applications, and treat infrastructure as a flexible pool of resources. For traditional workloads, that promise worked well. Servers were modest, racks were passive containers, and data centers scaled by adding standardized modules.

Yet AI workloads are exposing the limits of this model. They are not only larger, they reshape the very structure of computing clusters. A single AI training cluster can demand many times the power and throughput of a conventional web server farm. Racks that once handled 5–10 kilowatts of power are now expected to sustain 80–100 kilowatts or more. As a result, physical limits of power delivery, thermal management, and operational predictability are being pushed to extremes.

This tension between abstraction and physical reality sets the stage for a new challenge: cloud architects can no longer focus solely on software and networking. They must now account for the material constraints of racks, power, and heat.

Cloud Architects: A Role Redefined by Physical Limits

Traditionally, cloud architects concentrated on software and network design while facilities engineers managed physical infrastructure- racks, power feeds, and cooling. Architects managed APIs, clusters, virtual machines, and service placement, assuming the underlying hardware could always keep up.

With AI, that assumption no longer holds. Workloads consume extraordinary power and generate intense heat, forcing architects to consider electricity, space, and rack density as core design factors. They must integrate software scheduling, rack-level constraints, and data center power realities into cohesive solutions.

In practical terms, this expanded role includes:

- Workload placement guided by physical rack limits

- Rack density and power envelopes as essential design constraints

- Operational reliability in environments where traditional static planning fails

In effect, the rack itself becomes a boundary that architects must design around. Understanding this shift is key to grasping why AI workloads are breaking conventional cloud design models.

What “Breaking the Rack” Really Means

The phrase “breaking the rack” captures three interrelated pressures that AI workloads impose. Understanding these pressures is essential for seeing how architects are adapting.

Power

AI racks draw far more power than traditional systems. Where legacy servers drew 5–10 kW per rack, AI clusters often exceed 80 kW and can reach 100 kW or more. Electrical infrastructure must now support higher-capacity busways, PDUs, and substations.

This also reveals limits in utility capacity and grid access. Deployments must now work within what is available rather than what is ideal.

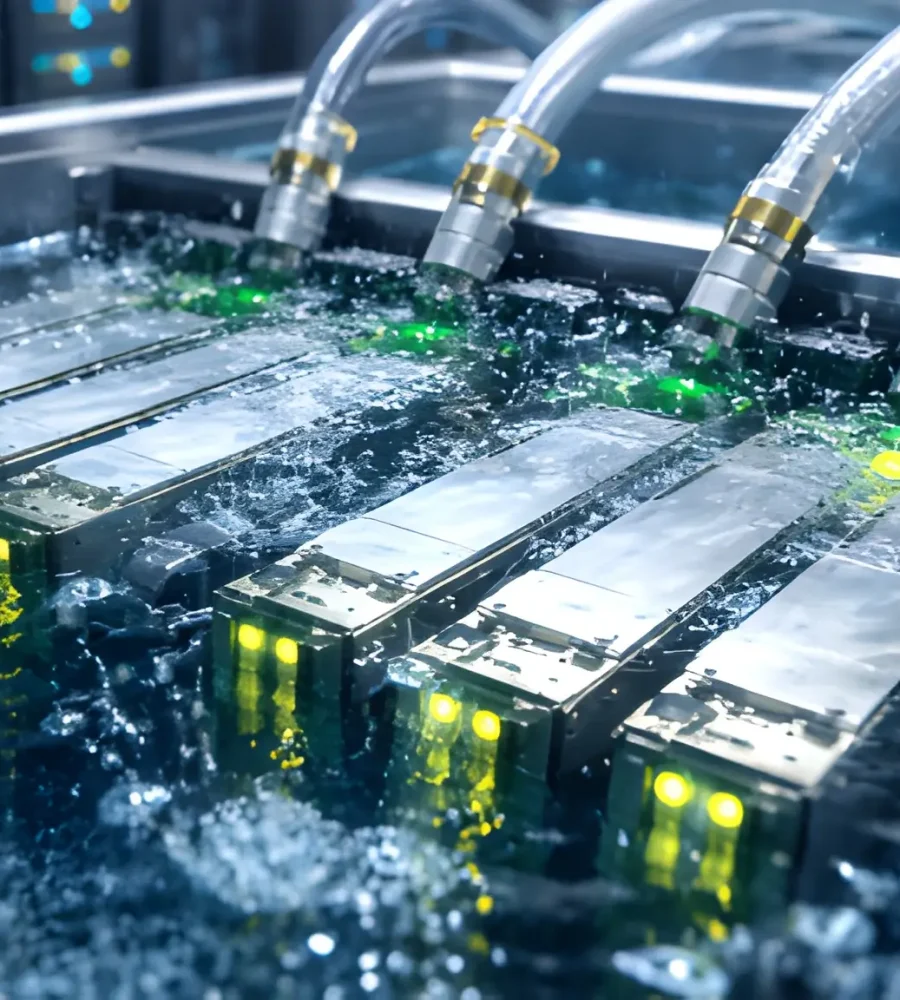

Thermal Envelope

High-density racks generate concentrated heat. Traditional designs relied on air cooling and predictable thermal distribution. At 80–100 kW per rack, those assumptions break down. Rack layout and enclosure design become critical constraints on workload placement.

Thermal management has therefore moved from a back-end concern to a factor that directly influences scheduling decisions. Architects must now ask not only how workloads run, but where they can run safely.

Variability

AI workloads behave differently from traditional web or batch systems. Instead of predictable peaks and troughs, AI runs continuously at full power and spikes unpredictably. Static capacity models fail when a rack can move from half load to full load in minutes.

Taken together, power, thermal density, and workload variability transform the rack into an architectural boundary, an active element shaping every deployment decision.

Reframing the Problem: From Abstraction to Bottlenecks

Historically, cloud architecture pushed physical constraints into facilities engineering. Compute, storage, and network were abstracted, hiding limits beneath layers of software.

AI workloads challenge that model. Abstraction now meets a hard boundary at the rack. Architects must identify bottlenecks, determine where abstraction cannot extend, and embed constraints into system-level policies.

This marks a shift in perspective. Solutions are no longer just about resource pools or application logic; they must account for racks, power, and thermal realities as integral parts of the design.

What Cloud Architects Are Doing About It

Faced with these challenges, architects are taking decisive steps to align infrastructure with the realities of AI workloads.

1. Designing AI-Specific Racks

Not all racks are created equal. Architects now design AI-specific racks with distinct engineering specifications, power feeds, and operational policies. This involves:

- Defining rack classes with clear constraints

- Allocating space and power for AI workloads separately from general compute

- Avoiding retrofitting AI workloads into traditional racks

By treating these racks as separate infrastructure domains, architects gain more control over performance, reliability, and operational risk.

2. Letting Hardware Constraints Guide Architecture

Uniform abstraction has given way to hardware-aware design. Architects now emphasize:

- Bare metal provisioning for high-density workloads

- Topology-aware service placement based on physical adjacency and power availability

- Reduced reliance on virtualization layers that obscure critical limits

This approach maximizes efficiency while respecting the physical realities of racks and power distribution.

3. Treating Thermal Limits as a Scheduling Factor

Thermal limits now determine both when and where workloads can run. Architects integrate rack thermal profiles into scheduling decisions, balancing workloads across available capacity while avoiding hotspots.

Predictive models, real-time telemetry, and system-level coordination allow workloads to run at full power without exceeding thermal thresholds.

4. Aligning Architecture with Power Availability

Power access now drives deployment decisions. Architects incorporate:

- Pre-deployment checks for available power

- Capacity planning based on utility and regional constraints

- Staged rollouts that match achievable power delivery

This ensures that system designs are grounded in reality rather than idealized planning assumptions.

Signals from Real-World Infrastructure

The architectural shifts described above are visible in major cloud and AI infrastructure deployments around the world. A number of hyperscale providers and AI‑focused data center operators are already designing and building facilities where high‑density racks and workload‑specific considerations drive decisions.

For example, Microsoft has deployed a supercomputer‑scale AI cluster on its Azure platform that places thousands of next‑generation GPUs into highly dense rack configurations. These racks deliver extraordinary performance and memory bandwidth for AI training and inference, and they represent clear departures from traditional server layouts and power envelopes.

In Taiwan, GMI Cloud announced a new AI data center project that will house around 7,000 GPUs distributed across nearly 100 high‑density racks and will draw roughly 16 megawatts of power. This project highlights how providers are designing facilities around high rack densities and significant power demand to support AI workloads.

Industry events and open standards activity also reflect the importance of rack‑level design. AMD recently introduced a rack‑scale AI infrastructure platform based on open rack specifications that is intended to support the scale and performance requirements of advanced AI workloads. Such platforms are not merely collections of servers; they are engineered around dense interconnects, power delivery, and structural integration at the rack scale.

Across the broader infrastructure landscape, data center operators are consistently planning for significantly higher rack densities than in the past. Reports indicate that average racks in many facilities now handle more than 10 kilowatts of load, with high‑performance AI racks exceeding that by an order of magnitude, reshaping power, cooling, and layout planning across facilities.

Looking Ahead: Beyond the Rack

The next frontier will push constraints even closer to the chip. For now, the key lesson is coordination. Software, hardware, and facilities must work together to achieve reliable, high-performance systems. Architects must balance abstraction with physical limits, ensuring that AI workloads can operate efficiently without exceeding the bounds of power, space, and thermal capacity.

AI workloads have closed the gap between cloud software and physical infrastructure. Racks are no longer neutral containers; they act as architectural boundaries, dictating where workloads can run, how power is delivered, and how heat is managed.

Cloud architects are redesigning systems to operate within these boundaries. They identify bottlenecks, make trade-offs between abstraction and physical realities, and create solutions capable of handling AI workloads at scale. The future of AI infrastructure is being determined at the rack level rather than in the software layer.