The economics of cloud computing are being rewritten by custom silicon. Hyperscale cloud providers such as Amazon Web Services, Google Cloud, Microsoft Azure, and Meta are investing billions in chips designed specifically for AI workloads. This shift marks a decisive move away from relying solely on off the shelf GPUs and CPUs from vendors like Nvidia and AMD. In its place is a new infrastructure model in which in house silicon becomes both a competitive lever and an economic advantage, reshaping how cloud providers manage costs, performance, and long term growth.

At the center of this transition is a straightforward insight. Traditional hardware components offer flexibility and broad compatibility, but they come with high price tags and are not perfectly aligned with the demands of large scale AI workloads. By designing their own silicon, hyperscalers can optimize performance, reduce long term costs, and gain greater control over critical supply chains. These chips are purpose built systems engineered around the operational realities of hyperscale data centers. Recent deployments across the industry show that these investments are already changing the competitive landscape.

Reducing Total Cost of Ownership

The most immediate driver behind custom chips is economic. Hyperscalers have historically paid significant premiums for high end GPUs from external vendors, a dynamic often described as the Nvidia tax. These premiums can erode margins and raise the cost of delivering AI services in the cloud.

Custom chips such as AWS Trainium and Inferentia or Google’s Tensor Processing Units are engineered to lower total cost of ownership across massive AI clusters. Because hyperscalers operate at extraordinary scale, even modest efficiency gains produce meaningful financial impact. A small percentage improvement in performance per dollar or performance per watt, when multiplied across thousands of servers and dozens of data centers, translates into substantial reductions in operating expenses.

Cloud providers are increasingly deploying their own chips in flagship AI services, signaling confidence in both cost efficiency and performance. As custom silicon becomes embedded in core offerings, it directly influences pricing models and competitive positioning.

Performance and Efficiency Tailored to AI

Cost savings are possible because these chips are built specifically for AI. Training and inference workloads rely heavily on matrix multiplications, tensor processing, and large scale vector operations. General purpose GPUs are powerful, but they are designed to handle a wide range of computing tasks. As a result, a portion of their silicon is dedicated to capabilities that AI workloads may not fully utilize.

Hyperscaler designed ASICs focus their transistor budgets on the operations that matter most for deep learning. By eliminating unnecessary logic and optimizing data pathways, they can deliver higher throughput and better energy efficiency for targeted workloads.

Google’s TPUs illustrate this approach. They are deeply integrated with Google’s software stack and tuned for large scale model training and inference. Meta has taken a similar path with its MTIA chips, targeting recommendation models and other AI driven systems that dominate its infrastructure. In both cases, purpose built silicon delivers measurable gains in performance and cost efficiency for specific workload classes.

Supply Chain Control and Resilience

Beyond cost and performance, custom silicon strengthens supply chain control. Recent GPU shortages exposed how dependent cloud providers were on external vendors. Limited availability slowed expansion plans and forced operators to absorb higher prices.

By investing in their own chips, hyperscalers gain more influence over manufacturing timelines, capacity planning, and procurement strategies. While fabrication still depends on external foundries, control over chip design and volume commitments creates greater alignment between infrastructure demand and silicon supply.

At hyperscale, even minor supply disruptions can translate into hundreds of millions of dollars in lost opportunity or additional expense. Custom silicon reduces that vulnerability and enables more predictable long term planning.

Competitive Differentiation

Custom hardware also functions as a strategic differentiator. When a cloud provider can offer AI infrastructure that is both lower cost and more efficient for certain workloads, it strengthens customer loyalty and platform stickiness.

Large enterprises deploying advanced models are highly sensitive to performance, latency, and cost. A provider that delivers optimized hardware for those needs gains a meaningful advantage. Over time, proprietary silicon can become part of a broader ecosystem strategy, attracting partners and customers whose systems are tuned to that infrastructure.

There is a risk of ecosystem fragmentation if workloads become tightly coupled to one provider’s chips. However, advances in open source frameworks and cross platform compilers are helping developers maintain portability across architectures. As tooling matures, the benefits of custom silicon are increasingly accessible without locking customers into rigid technical constraints.

Power Efficiency and Sustainability

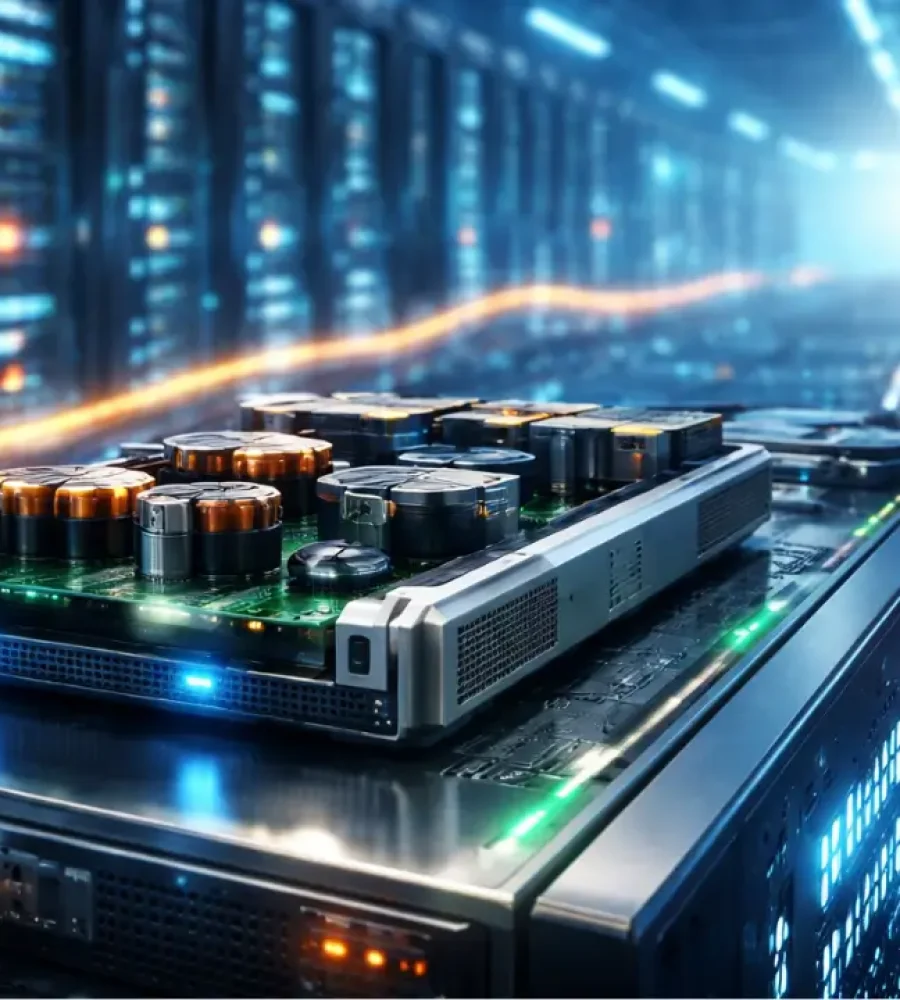

AI workloads are energy intensive, and as data centers expand, electricity consumption becomes a central operational concern. Energy efficiency affects both cost structure and sustainability commitments.

Custom chips enable hyperscalers to design for performance per watt rather than raw compute alone. By eliminating unused logic and optimizing execution paths, these ASICs reduce power draw and cooling requirements. The result is lower operating expense and a smaller environmental footprint.

For companies facing regulatory pressure and corporate sustainability goals, improvements in energy efficiency are not just financial advantages. They are strategic necessities.

A Long Term Bet on the AI Economy

Ultimately, custom silicon represents a long term strategic investment rather than a short term cost reduction tactic. Cloud providers that control their compute stack from hardware through software can innovate more rapidly and optimize more deeply. Tight integration between silicon and software unlocks performance gains that ripple across storage, networking, and higher level AI services.

The industry is entering an era in which control over compute architecture matters as much as control over software platforms. By investing heavily in custom chips, hyperscalers are positioning themselves to shape the economics of AI infrastructure rather than simply respond to vendor pricing and supply dynamics.

Hyperscalers are investing in custom chips because doing so reshapes cloud economics in their favor.

Custom silicon reduces costs at scale, delivers performance tailored to AI workloads, strengthens supply chain resilience, differentiates cloud platforms, and improves energy efficiency. As these investments mature, hardware design will become as central to competitive strategy as software innovation, defining the next phase of the cloud era.