Carbon Has a Clock: Why Timing Matters in AI Compute

Right at the threshold of tomorrow’s compute ecosystem lies a truth few engineers articulate loudly enough: carbon intensity changes with the tick of the clock, and every microsecond can affect emissions from AI workloads. Electricity grids operate on dynamic physics, shaped by generation mixes that fluctuate from fossil-heavy baselines at dawn to renewable-rich valleys at midday and back toward conventional sources at dusk. This means that an AI training job at 2 AM in one location could emit far more carbon than the same job scheduled at noon somewhere else, based on how much wind, solar, and hydro power is feeding the grid during those intervals.

By thinking of AI compute as an energy decision, not just a computational act, organizations unlock an opportunity to align AI workload timing with low-carbon energy windows and improve environmental impact without sacrificing performance. Intelligent scheduling systems now ingest real-time carbon intensity signals and grid forecasts to make these temporal decisions automatic, shifting batches and flexible jobs toward cleaner periods on the clock. Because of this, teams designing AI infrastructure need to treat time not as a static parameter but as a variable that directly affects sustainability. This temporal energy awareness transforms scheduling from a simple queue management task into a climate-aligned strategy that can significantly reduce emissions.

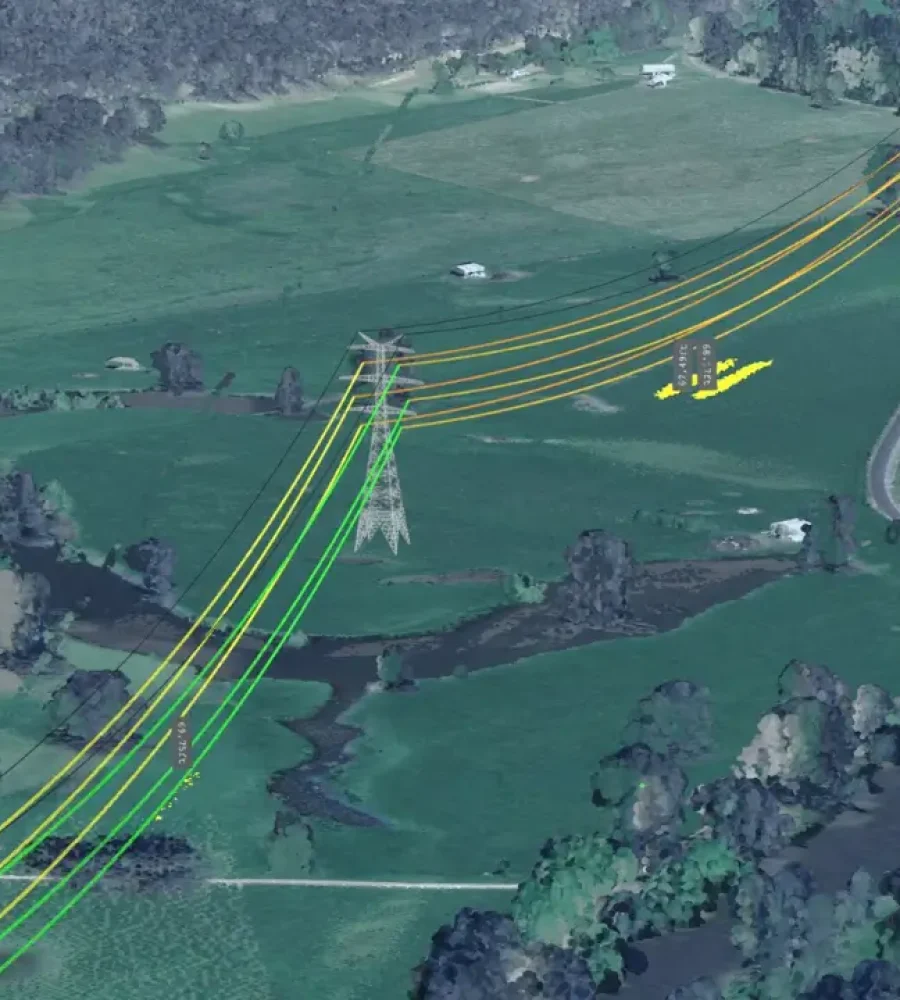

Grid carbon intensity varies not just by hour but by region and season, meaning that what constitutes “clean” power at 9 AM in one time zone may be conventionally generated in another. These shifts depend on local demand cycles, renewable availability forecasts, and transmission constraints, all of which feed into carbon intensity models powering scheduling decisions. When systems predict wind ramps or solar troughs, they can defer non-urgent AI tasks into lower-carbon slots, capturing cleaner energy without compromising reliability. As a result, AI compute transforms from a rigid consumption event into a flexible energy partner, tied to the rhythms of real-world electricity markets. That shift is why tomorrow’s sustainable compute architectures will prioritize temporal coordination at the same level they treat latency and throughput today.

Grid Variability and Predictive Carbon Intelligence

Temporal awareness also extends to fault tolerance and predictive optimization. Rather than simply reacting after the fact, advanced orchestration engines can learn patterns in grid carbon signals and anticipate low-emission windows days in advance. This predictive intelligence enables systems to plan workload placement with accuracy and avoid periods of peak emissions, turning scheduling into a forward-looking capability, not a reactive fallback. In doing so, compute infrastructure becomes more agile and resilient to external grid volatility while markedly lowering carbon footprints. Increasingly, research shows that flexible start and pause-resume strategies paired with temporal intelligence reduce cumulative emissions for both short and long AI jobs.

Temporal carbon strategy isn’t hypothetical, it’s becoming core to real-world orchestration engines. AI platforms are integrating APIs that report grid emissions in real time, enabling compute schedulers to dynamically align workloads with cleaner energy valleys. These systems treat carbon impact metrics as first-class scheduling inputs alongside cost and availability, allowing infrastructure to evolve from cost-centric to climate-centric workflows. In doing so, compute nodes stop being blind consumers of energy and instead become active participants in the energy ecosystem.

Temporal scheduling also has economic benefits because low-carbon hours often coincide with lower energy prices in renewable-dominant regions. This dual advantage reduces operational costs and aligns business incentives with environmental outcomes. By leveraging time-based carbon signals in scheduling decisions, organizations can meet performance targets while slashing emissions and operational expenses simultaneously.

Finally, scheduling with a clock mindset catalyzes a cultural shift within engineering teams. Awareness of when computing happens, not just how it happens encourages teams to treat energy sustainability as a design principle. Time-aware scheduling mathematically integrates energy patterns into compute planning and transforms AI infrastructure from a fixed cost center into an adaptable, climate-intelligent system.

Geography as a Sustainability Lever

The world’s energy landscape is uneven, with regions varying from grid systems powered predominantly by renewables to those still tethered tightly to fossil fuels. This creates a sustainability lever that goes beyond timing; it puts compute location and routing strategy at the heart of emissions reductions. By shifting workloads across geographic boundaries based on energy cleanliness, organizations can tilt the scales dramatically toward low-carbon compute. In such a model, regions abundant in clean energy such as hydro-rich Nordic countries or solar-rich desert zones become prime hosts for energy-intensive tasks. Conversely, less clean grids can prioritize latency-sensitive workloads that cannot tolerate relocation without performance penalties. With geo-aware scheduling, data flows and compute placement become climate-strategic decisions.

Research literature proposes cross-region workload routing based on carbon intensity differentials, and early implementations have demonstrated feasibility in controlled environments. However, large-scale production deployment of fully autonomous geo-carbon routing remains limited and continues to evolve. Practical constraints such as data sovereignty, latency requirements, and inter-region transfer costs influence real-world adoption. Consequently, most current implementations focus on time-shifting flexible workloads within available regions rather than aggressive global relocation. The concept remains technically viable, yet industry-wide standardization is still developing.

However, geographic routing must be tempered with ethical considerations. Simply sending compute to regions with “cleaner” grids doesn’t absolve responsibility for the downstream effects in the destination region. For example, exporting compute demand to a region with renewable capacity could stress local infrastructure or create unintended market distortions. Or it could divert renewable energy from local needs toward global compute demand, exacerbating local grid volatility. Responsible geo-orchestration therefore includes considerations around local energy equity, community impact, and sustainable procurement objectives.

Ethical and Operational Boundaries of Geo-Routing

In practice, multi-region cloud strategies can embed policies that balance sustainability with regional priorities. These might include quotas for local workloads, thresholds for carbon intensity triggers, and coordination with local grid operators to ensure that added compute demand does not hinder local renewable goals. By integrating these principles into routing logic, compute becomes a partner in achieving regional net-zero targets rather than a drain on energy resources.

Geo-aware scheduling also supports redundancy and resiliency. Workloads that are delay-tolerant can move across regions with wide variability in grid cleanliness, capturing the lowest emission windows globally. Meanwhile, latency-sensitive services can still operate closer to end users, provided carbon constraints are met or mitigated. This approach layers sustainability without sacrificing user experience, driving deeper integration of energy intelligence into infrastructure planning.

When combined with timing signals, geography amplifies emissions savings. For example, scheduling an AI training job to run midday in a solar-rich region during peak renewable output yields significantly lower emissions than running it in an area with a carbon-heavy grid at the same hour. These combined geo-temporal strategies are the frontier of sustainable compute design, enabling organizations to rethink location as a dynamic input just like memory and CPU within workload orchestration.

The Rise of Carbon-Aware Orchestration Engines

A quiet revolution now runs beneath the surface of AI infrastructure, where orchestration engines interpret energy signals with the same precision once reserved for performance telemetry. Modern schedulers no longer treat carbon as an externality because they embed it directly into placement algorithms that decide when and where workloads execute. These engines ingest live grid carbon intensity feeds, renewable generation forecasts, and workload metadata to construct a dynamic emissions profile before compute even begins. Instead of reacting after energy is consumed, they proactively shape execution paths around cleaner energy windows. As a result, orchestration becomes an act of environmental alignment rather than simple queue management. This shift redefines AI platforms as adaptive systems that respond to energy ecosystems in real time.

Under the hood, carbon-aware orchestration engines rely on layered intelligence that combines predictive modeling with policy enforcement. Forecasting modules analyze renewable output variability, while optimization layers evaluate workload flexibility and service level constraints. These components converge into a decision engine that ranks execution pathways according to emissions exposure, energy pricing, and performance sensitivity. Unlike static scheduling models, these systems recalculate optimal routes continuously as grid conditions evolve. Consequently, AI workloads adapt fluidly to changes in carbon intensity without manual intervention. Such automation transforms sustainability from a reporting function into an operational constant.

Present implementations of carbon-aware scheduling primarily apply to workloads with temporal flexibility, including batch processing, retraining cycles, and non-latency-critical tasks. Real-time inference services often operate under stricter latency constraints that limit relocation or delay options. Therefore, carbon-aware orchestration currently delivers the strongest impact where workload elasticity exists. Ongoing research continues to explore how broader classes of AI applications may incorporate similar flexibility without compromising performance guarantees.

Automation, Transparency, and Carbon Signal Integration

Carbon-aware orchestration engines also introduce transparency into AI infrastructure. Dashboards now expose real-time carbon metrics alongside utilization and latency indicators, offering visibility into the environmental footprint of each job. This transparency encourages data-driven refinement of scheduling policies, ensuring alignment with broader sustainability objectives. Rather than guessing the carbon impact of compute, teams observe and adjust with measurable precision. Hence, orchestration evolves into an accountable process grounded in continuous feedback. That accountability reinforces climate alignment as a structural attribute of AI systems.

Moreover, orchestration engines integrate with market signals from renewable energy providers. When wind generation surges or solar farms exceed baseline output, schedulers can seize those windows and accelerate eligible workloads. Conversely, when grids experience carbon-heavy spikes, systems can defer non-urgent jobs and maintain low-emission baselines. Through this bidirectional communication, compute infrastructure synchronizes with energy markets. The infrastructure therefore becomes a responsive participant in decarbonization rather than a passive energy sink. Such synergy underscores the potential of intelligent scheduling to amplify renewable utilization.

As orchestration engines mature, they increasingly adopt machine learning to refine their own decision heuristics. Feedback loops analyze past scheduling outcomes and improve predictive accuracy for future cycles. This self-learning capability ensures that carbon-aware compute becomes more efficient over time. Instead of relying solely on fixed thresholds, engines evolve as grid dynamics change. Consequently, sustainable AI infrastructure gains resilience against volatility in renewable supply. The orchestration layer thus emerges as the decisive force shaping ultra-low-emission compute.

Flexibility by Design: Making Workloads Delay-Tolerant

Flexibility stands as the architectural prerequisite for meaningful carbon alignment because rigid workloads cannot adapt to cleaner windows or geographic shifts. Designing AI systems with pause-resume functionality, modular task segmentation, and checkpointing capabilities enables workloads to migrate across time and space without losing integrity. This architectural shift reframes sustainability as a feature embedded at the code and infrastructure level. Instead of forcing the grid to accommodate compute, flexible workloads conform to energy conditions. Through this inversion of control, emissions reduction becomes feasible at scale. The design philosophy prioritizes adaptability as much as performance.

Checkpointing techniques play a central role in enabling delay tolerance. By periodically saving intermediate states during training processes, systems ensure that tasks can pause during carbon-intensive periods and resume when cleaner energy becomes available. This method preserves computational progress while optimizing environmental exposure. Consequently, long-running AI models gain the freedom to align with renewable peaks without sacrificing reliability. Such adaptability illustrates how architectural decisions directly influence sustainability outcomes. The infrastructure thus supports environmental intelligence at the deepest operational layers.

Batch processing also benefits from flexibility by design. Non-urgent analytics, retraining cycles, and background inference jobs can queue intelligently until carbon intensity drops. This delay does not degrade user experience because it applies only to workloads without strict latency requirements. By separating real-time and delay-tolerant tasks, organizations unlock a powerful emissions lever. Flexibility therefore acts as a structural enabler rather than an afterthought. It aligns computational ambition with environmental responsibility.

Architectural Portability and Adaptive Compute Patterns

Containerization and virtualization technologies further enhance delay tolerance. These tools encapsulate workloads into portable units that migrate seamlessly across data centers and cloud regions. Portability reduces friction in relocating tasks to cleaner grids or renewable-rich windows. As a result, architectural flexibility amplifies both temporal and geographic sustainability strategies. Infrastructure evolves into a fluid ecosystem where compute flows toward cleaner energy. This fluidity defines the foundation of climate-aligned AI operations.

Designing for flexibility also encourages developers to rethink AI model training strategies. Instead of monolithic training runs that demand uninterrupted power, segmented training sessions align more naturally with renewable cycles. This segmentation supports iterative progress while maintaining emissions awareness. Such strategies reflect a broader cultural transition toward environmental mindfulness in AI system design. By embedding flexibility at inception, organizations avoid retrofitting sustainability later. That proactive stance yields durable environmental benefits.

Ultimately, flexibility by design transforms the narrative around AI sustainability. Compute no longer appears as an uncontrollable force but as a manageable, adaptable process. By granting workloads temporal and spatial mobility, infrastructure gains the agility necessary for ultra-low-emission performance. This adaptability ensures that climate alignment remains feasible even as AI demand scales. The architectural foundation thus strengthens the viability of carbon-intelligent scheduling. Flexibility becomes the cornerstone of sustainable compute evolution.

The Infrastructure Layer: Data Centres as Active Energy Participants

Data centres increasingly function as intelligent nodes within energy ecosystems rather than isolated consumption hubs. Through advanced energy management systems, facilities can respond to grid conditions in near real time and adjust compute loads accordingly. This responsiveness enables alignment between energy supply fluctuations and workload demand. Instead of drawing power indiscriminately, data centres now modulate operations to support grid stability. This capability positions infrastructure as an ally in decarbonization efforts. The shift reframes compute facilities as dynamic energy participants.

Demand response programs further illustrate this transformation. When grids signal peak stress or high carbon intensity, participating facilities reduce or shift loads to maintain equilibrium. Conversely, when renewable generation surges, data centres increase activity to absorb surplus clean energy. Such bidirectional coordination integrates compute infrastructure into the operational rhythm of power systems. This collaboration strengthens grid resilience while lowering emissions. Infrastructure thus transcends passive consumption and enters a cooperative energy role.

Onsite renewable installations and battery storage systems enhance this responsiveness. Solar arrays, wind turbines, and energy storage units empower facilities to manage their own carbon exposure and smooth consumption patterns. When combined with carbon-aware orchestration engines, onsite assets enable localized optimization strategies. Facilities can store renewable energy during peak generation and deploy it strategically during compute surges. This integration elevates sustainability from a regional tactic to a facility-level capability. The infrastructure layer thereby reinforces systemic decarbonization.

Grid Responsiveness, Energy Storage, and Market Integration

Cooling systems also contribute to active participation. Advanced liquid cooling and thermal management technologies reduce energy waste and allow dynamic scaling according to workload intensity. When compute demand dips, cooling demand contracts proportionally, conserving energy. This adaptability aligns facility operations with the flexible scheduling paradigms discussed earlier. As a result, the entire stack from silicon to power feed moves in coordinated harmony with carbon signals. The data centre becomes an orchestrated instrument within the energy symphony.

Market integration completes this active participation model. Facilities engage with renewable procurement contracts, real-time pricing mechanisms, and carbon accounting frameworks. These engagements ensure that compute demand stimulates clean energy growth rather than fossil dependency. Infrastructure strategy therefore merges technical orchestration with energy market awareness. This convergence strengthens the credibility of ultra-low-emission compute narratives. The infrastructure layer anchors sustainability within tangible operational practices.

As data centres adopt these roles, they embody the philosophy that compute must coexist harmoniously with planetary systems. Responsiveness, adaptability, and intelligence converge to redefine what infrastructure represents. Rather than acting as static demand centers, facilities evolve into climate-aware platforms. This transformation underpins the broader movement toward intentional compute design. Infrastructure thus stands as a foundational pillar of sustainable AI evolution.

Regional Momentum and Market Leadership: The Nordic Acceleration Story

Regions with high renewable penetration, including countries such as Sweden and Denmark, are often referenced in industry discussions about low-carbon data centre siting. Their electricity systems include substantial contributions from hydro and wind resources, which can create favourable conditions for lower-emission compute. While no independent ranking confirms a singular regional leadership in carbon-aware orchestration, renewable-rich grids naturally present opportunities for aligning flexible workloads with cleaner energy supply. As renewable generation expands globally, similar opportunities may emerge in multiple geographies rather than being confined to a single region.

Within this landscape, companies such as Google have publicly documented investments in carbon-aware computing strategies. The organization has described efforts to align data centre operations with renewable energy procurement and hourly carbon-free energy matching across regions. Rather than relying solely on annual renewable accounting, the company has outlined ambitions to match electricity consumption with carbon-free energy on an hourly basis. This approach signals a transition from broad sustainability pledges toward more granular operational alignment. Publicly available documentation shows that carbon intensity signals increasingly influence workload placement decisions in specific contexts. These disclosures position carbon-aware scheduling as an emerging operational capability rather than a theoretical concept.

Sundar Pichai, CEO of Google, has publicly emphasized the ambition to operate on carbon-free energy around the clock, underscoring that climate responsibility must integrate directly into infrastructure planning rather than remain an offset mechanism. His commentary highlights a transition from compensatory strategies toward real-time decarbonization within compute operations. This perspective reinforces the philosophy that intelligent scheduling can synchronize AI demand with renewable supply more precisely than static energy contracts. By articulating a 24/7 carbon-free vision, leadership signals that orchestration intelligence serves as a strategic enabler of environmental commitments. The planning framework therefore prioritizes granular carbon measurement and adaptive workload routing. This clarity at the executive level has accelerated investment in carbon-aware orchestration research and infrastructure refinement.

Corporate Carbon Matching Strategies in Practice

Strategic deployment in Nordic regions amplifies this vision because abundant hydroelectric resources provide a stable backbone for low-emission compute. AI training jobs that require significant energy inputs can align with predictable renewable baselines, while flexible scheduling absorbs variability from wind generation cycles. The synergy between grid cleanliness and orchestration intelligence demonstrates how geography and timing coalesce into a unified sustainability model. Through continuous optimization, workloads distribute across facilities in ways that minimize exposure to fossil-intensive intervals. Such deployment patterns illustrate that infrastructure design, renewable procurement, and scheduling intelligence must operate cohesively. The Nordic acceleration story therefore embodies a template for other regions pursuing climate-aligned compute growth.

Beyond energy procurement, this scaling strategy influences broader ecosystem development. Renewable developers gain stable demand signals from compute facilities, encouraging further clean energy expansion. Simultaneously, advanced grid forecasting tools evolve to support carbon-intelligent routing decisions. This mutual reinforcement strengthens both digital and energy infrastructure, forming a virtuous cycle of decarbonization. The highest scaling players in this domain thus shape not only their own emissions profiles but also regional sustainability trajectories. In this unfolding narrative, orchestration intelligence emerges as both technological innovation and climate catalyst.

While the Nordic region currently leads in visible deployment, similar momentum appears in regions with expanding renewable portfolios such as parts of Canada and Spain. These areas combine renewable abundance with modern data centre infrastructure, enabling rapid adoption of carbon-aware scheduling practices. As renewable penetration deepens globally, more regions will likely replicate this model. The regional storyline thus illustrates how environmental conditions, corporate strategy, and orchestration technology intersect. This convergence defines the frontier of sustainable AI compute.

From Efficient to Intentional: The Future of Climate-Aligned Compute

A profound shift now unfolds within AI infrastructure, where sustainability no longer depends solely on hardware efficiency gains. Compute performance continues to advance, yet emissions reduction increasingly hinges on intelligent coordination rather than silicon optimization alone. Timing strategies, geographic routing, orchestration engines, and flexible workload design collectively redefine what responsible compute entails. Each layer of this stack contributes to a cohesive emissions reduction framework. Efficiency remains necessary, yet intentional orchestration delivers transformative impact. The future therefore rests on harmonizing computation with the rhythms of renewable energy systems.

Intentional compute requires that carbon considerations enter decision matrices at every operational tier. Scheduling algorithms evaluate energy cleanliness alongside latency and cost, while infrastructure planning incorporates renewable proximity and grid transparency. This integrated mindset dissolves the historical separation between energy procurement and digital operations. Instead of treating sustainability as an external compliance objective, organizations embed it within system logic. Such integration ensures that environmental performance evolves dynamically rather than through sporadic reporting cycles. The transformation signifies a maturation of AI infrastructure governance.

Moreover, climate-aligned compute reshapes how innovation unfolds. Researchers and engineers design AI models with checkpointing, segmentation, and portability in mind from the outset. Orchestration platforms continuously refine decision heuristics based on grid signals and renewable forecasts. Infrastructure facilities synchronize cooling, storage, and demand response systems with workload schedules. This multilayered coordination transforms sustainability into a structural attribute of digital ecosystems. Intentionality therefore permeates architecture, software, and energy strategy alike.

Designing for a Carbon-Intelligent AI Era

The implications extend beyond emissions metrics because carbon-intelligent scheduling enhances resilience against energy volatility. By aligning compute with renewable peaks and grid stability signals, systems reduce vulnerability to fossil fuel price fluctuations and regulatory uncertainty. This resilience strengthens long-term operational continuity while advancing decarbonization objectives. Consequently, intentional compute delivers both environmental and strategic dividends. The alignment between climate goals and infrastructure stability becomes increasingly evident. Such synergy underscores the viability of ultra-low-emission AI expansion.

As renewable energy penetration grows worldwide, opportunities for carbon-aware orchestration will expand in parallel. Regions investing in wind, solar, and hydro infrastructure create fertile ground for sustainable workload routing. AI platforms capable of interpreting these signals will capitalize on cleaner energy windows with increasing precision. Over time, this convergence could redefine competitive differentiation within cloud and AI services. Environmental intelligence may become as critical as computational throughput. The future of AI therefore intertwines inseparably with the future of energy systems.

Ultimately, the journey from efficient to intentional compute reflects a broader philosophical evolution within digital infrastructure. Rather than merely consuming energy more efficiently, AI systems now aspire to synchronize actively with planetary rhythms. Orchestration intelligence, flexible architectures, and responsive data centres collectively enable this synchronization. Each decision about when and where workloads execute carries environmental consequence, and intelligent scheduling transforms that consequence into opportunity. Sustainable compute thus emerges not as a peripheral aspiration but as a defining characteristic of next-generation AI systems. The future of climate-aligned compute will depend less on faster processors and more on smarter coordination with the energy landscapes that power them.