The era of AI data centers has transformed thermal management into a critical design decision. Engineers and operators now evaluate cold plates vs immersion in AI data centers to manage rising heat output and maintain efficiency. Power densities are increasing rapidly, and traditional air cooling cannot keep up. Teams must adopt advanced liquid cooling methods that ensure performance, reliability, and scalability across modern AI workloads.

Cold plates and immersion cooling offer two fundamentally different solutions to these thermal challenges. Engineers deploy cold plates to extend familiar air-assisted liquid systems, while immersion requires teams to redesign servers, maintenance workflows, and facility layouts from the ground up. Industry discussions highlight operational control, risk perception, and team adaptability, showing clearly how human factors influence technology adoption.

Cooling Is No Longer a Supporting System

In the earliest data centers, air cooling with raised floors and circulating fans was sufficient to handle distributed workloads with modest heat output. Those systems worked well for web hosting and general enterprise computing. But as AI accelerated into mainstream enterprise and hyperscale deployment, the spatial relationship between compute density and cooling became non‑linear. Simply adding more fans or larger air handlers could not compensate for the near‑vertical increase in thermal output per rack. At 40kW per rack and climbing, air cooling lost relevance. Liquid‑based solutions from conventional cold plates to immersion are now mainstream considerations in every greenfield and retrofit project aiming to support dense AI clusters.

Data center thermal design is no longer delegated to mechanical engineers after the fact; it is considered during the earliest architectural decisions. Facility layout, pod spacing, containment design, and even the physics of power distribution tie directly into how heat will be extracted and where waste heat is discharged. These functions now sit alongside networking and storage on the whiteboard when planning new builds or expansions. That shift reflects not a preference but a necessity driven by AI workloads and sustainability mandates. As cooling strategies dictate the physical configuration and the total cost of ownership, leaders have realized that thermal management defines an AI data center just as much as compute capacity.

Two Philosophies, One Problem

Despite a shared objective, removing heat effectively from dense compute, cold plate and immersion cooling stem from fundamentally distinct design philosophies. Cold plates bundle coolant channels into metallic blocks that clamp onto CPUs, GPUs, and other high‑heat components. Here, engineers retain precise control at the component level: they know exactly where each joule of thermal energy is absorbed and how it exits the hardware. Cold plate cooling can be integrated with existing infrastructure, making it an appealing evolution of traditional liquid systems rather than a complete reinvention.

By contrast, immersion submerges entire servers or racks in dielectric coolant baths, allowing uniform thermal interaction at every surface within the enclosure. This holistic approach treats cooling as an environmental property rather than a point‑specific attachment. Instead of directing coolant only to problem spots, immersion cooling envelops all components, enabling simpler thermal paths and often reducing the need for secondary airflow. The mentality here is systemic rather than surgical, as design considerations extend beyond chip boundaries to include memory, power supplies, and entire motherboard layouts.

These divergent philosophies reflect deeper engineering mindsets. Cold plate advocates emphasize modular control, compatibility with existing server designs, and component‑level optimization. Immersion champions focus on simplicity at scale, uniformity in thermal extraction, and potential reductions in energy usage and water consumption. Each approach brings unique trade‑offs that ripple through operations, supply chains, and facilities strategy.

Cold Plates: Extending the Familiar

For data center operators and engineers raised on air and rack liquid cooling, cold plates represent a familiar progression, not a paradigm shift. Cold plates operate on principles similar to liquid cooling but increase contact area and improve thermal transfer directly at the source. This incremental improvement makes the technology easier to integrate with existing hardware and avoids the cultural shock that immersion systems can introduce.

Many legacy facilities are optimized around a chilled water loop feeding heat exchangers and coolant distribution units (CDUs). Cold plate systems leverage this existing topology, connecting to the same loops with limited changes to plant infrastructure. Schneider Electric, for example, positions direct‑to‑chip cold plates as a scalable step in liquid cooling that supports high‑performance workloads while minimizing disruption to existing data center design norms.

This compatibility allows teams to repurpose skills developed over years managing chilled water, CDUs, and coolant flows. Engineers retain familiar monitoring and control frameworks while treating cold plates as an upgrade rather than a replacement. As a result, organizations can maintain continuity in operations and planning while addressing rising thermal requirements head-on.

Cold plates also present a psychological comfort for operators: heat is extracted from defined points, not blanketed across an entire system. This alignment with established mental models reduces perceived risk and eases buy‑in during technology decisions.

Immersion Cooling: Rethinking the Entire Stack

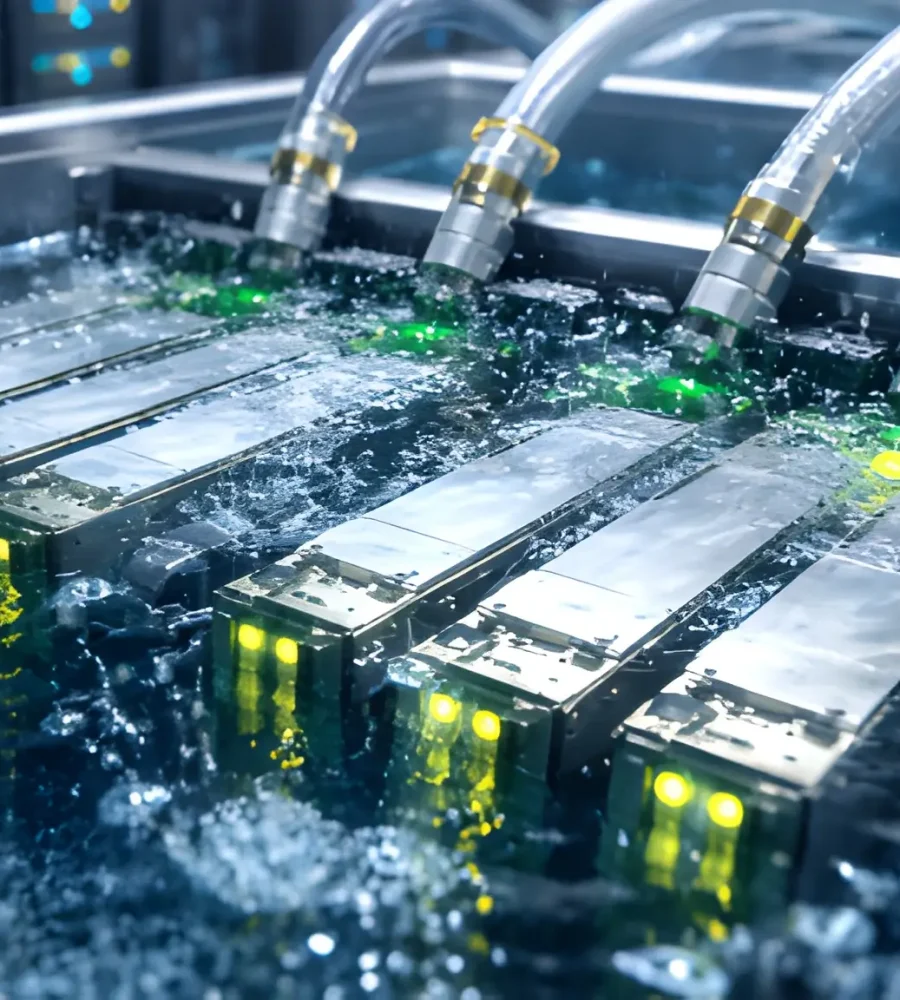

Immersion cooling doesn’t just change cooling hardware , it changes the interaction between humans and machines. In an immersion environment, servers rest within tanks filled with dielectric fluid. Traditional hot aisles and cold aisles lose relevance as thermal convection takes place uniformly in fluid rather than air. This shift disrupts decades of facility planning and prompts a rethink of data center layout, access strategies, and service workflows.

More importantly, immersion impacts daily operational behaviors. In traditional environments, swapping a server, diagnosing an airflow issue, or replacing components doesn’t carry the same procedural overhead as in an immersion tank, where fluid displacement and handling protocols must be managed meticulously. Safety and fluid cleanliness become key vectors of operational resilience, requiring training and tooling that differ significantly from the norms in rack‑based air or liquid systems.

By reframing the server as a fluid‑immersed entity rather than a machine surrounded by air, immersion also compels hardware vendors to consider entire chassis and board designs differently. Connectors, ports, and materials must accommodate dielectric contact, and service ports may need to be positioned to avoid fluid traps, all of which reverberate through manufacturing and service supply chains.

In many ways, immersion blurs the distinction between compute and cooling, it treats the server environment as a thermodynamic system rather than a stack of discrete parts with heat sinks attached. This systemic view is powerful, yet it demands willingness to shift long‑held design principles and operational routines.

Control Versus Surrender

Within the engineering corridors of major AI compute campuses, a subtle but powerful debate rages whether to control heat with targeted precision or surrender the entire system to its cooling environment. Proponents of cold plates often speak in terms of predictability: coolant channels bond tightly to GPU and CPU heat sources, enabling predictable, measurable extraction of thermal energy exactly where it’s generated. This precision appeals to operators conditioned on decades of managing point‑specific cooling devices and fans, where each watt of heat has a known path from silicon to coolant. Cold plate systems, in this mindset, are tangible extensions of familiar thermal design practices rather than radical departures from them.

By contrast, immersion cooling requires operators to think holistically rather than surgically. Rather than dissipating heat from individual components, immersion systems envelop an entire server in dielectric fluid that conducts and convects heat away uniformly. At first glance, this appears to cede the fine‑grained control that seasoned data center teams are accustomed to, provoking an instinctive resistance rooted in “what I can see and measure, I can manage.” The shift to treating servers as thermodynamic systems rather than assemblies of parts challenges cognitive defaults ingrained over years of traditional mechanical cooling strategies.

The psychological divide

This psychological divide often determines which teams feel comfortable embracing a technology, regardless of its potential merits. Engineers who have spent careers tuning airflows, chiller curves, and fluid dynamics within well‑defined loops sometimes perceive immersion as relinquishing control to a fluid environment where behavior is governed by bulk thermodynamics rather than granular thermal pathways. This perception influences decision cycles as much as, if not more than, empirical performance data.

Thus, the choice between control and surrender is not merely about thermal performance metrics; it is also about cognitive comfort, risk tolerance, and organizational psychology. Teams that believe they can trust a fluid to manage heat at scale are more likely to champion immersion, while teams that derive confidence from point‑specific telemetry and known fluid paths tend to favor cold plates. Ultimately, this psychological dimension underscores how thermal management isn’t just an engineering problem, it’s a human decision filtered through experience, risk perception, and organizational training, setting the stage for operational muscle memory to significantly shape technology adoption.

Operational Muscle Memory and Resistance to Change

Operational muscle memory refers to the ingrained habits and procedures that data center teams rely on when responding to equipment failures, maintenance cycles, and performance optimizations. These practices become second nature over years of hands-on experience with air cooling and traditional liquid cooling loops. When faced with a new technology like immersion cooling, this muscle memory can become an invisible force resisting adoption. Standard operating procedures (SOPs) for diagnosing hotspots, replacing hardware, and balancing fluid dynamics are built around known workflows that minimize surprises. Altering these workflows requires retraining, new tooling, and updated safety protocols, efforts that many organizations are reluctant to undertake unless the benefit clearly outweighs the disruption.

Cold plate systems carry a distinct advantage here: they feel familiar. By integrating with existing coolant loops, pump assemblies, and monitoring dashboards, they tap into the existing muscle memory of operators who already tune liquid flow rates, check differential pressures, and analyze thermal gradients. Because these tasks align with established workflows, teams can adopt cold plates with minimal behavioral change, reducing the cognitive friction often associated with technological shifts.

Why existing teams, training, and habits outweigh technology in cooling decisions

By contrast, immersion cooling demands a deeper operational rethink. Servers immersed in fluid require new procedures for hardware swap‑outs, fluid handling, leak prevention, and equipment isolation. Technicians must train for a world where replacing a DIMM or CPU isn’t a simple drawer‑out and plug‑in but involves careful fluid displacement and re‑sealing protocols. The mental model of maintenance changes from component to system, and these shifts are often met with hesitation, not because immersion is inferior, but because it interrupts established reflexes that technicians depend on in high‑pressure scenarios.

This resistance becomes particularly acute in organizations where staff turnover is low and institutional knowledge runs deep. When veteran operators advocate for incremental improvements rather than wholesale change, executives often heed that counsel, emphasizing continuity over disruption. As a result, cold plates become the least risky path for teams seeking to advance cooling capabilities without destabilizing operational norms.

The inertia of past practices also influences training programs and vendor partnerships. Certifications, vendor courses, and tooling investments are often tied to traditional cooling frameworks. Transitioning to immersion means building new curricula, investing in different equipment, and reevaluating supply chain relationships investments that carry upfront cost and long timelines.

Thus, operational muscle memory and resistance to change are not merely cultural artifacts; they are strategic realities that shape how cooling technologies are evaluated, adopted, and scaled, showing that who you are today influences where you’re willing to go tomorrow.

Maintenance, Access, and Human Interaction

Imagine a technician tasked with replacing a failed GPU in an AI rack cooled with cold plates. They slide out the chassis, identify the faulty unit, shut down its coolant loop briefly, and swap the board before re‑sealing and rebalancing the flow, steps that align with decades of rack‑level service practice. Cold plates localize maintenance interactions, enabling teams to work component‑by‑component with minimal disruption to neighboring systems. The tactile experience remains familiar, opening cabinets, accessing hardware bays, and using tools that have been standard for years.

In contrast, immersion cooling transforms these interactions dramatically. Technicians encounter a pool of dielectric fluid when accessing equipment, requiring special safety gear, spill containment solutions, and distinct handling processes. Hardware swaps involve draining fluid, lifting servers out of their bath, and reintroducing them once maintenance is complete. These steps add procedural layers that lengthen maintenance windows and require meticulous planning.

These differences are not trivial. In high‑throughput AI facilities where hardware failure and replacement events occur frequently, the cost of maintenance protocols becomes a strategic consideration. Cold plates by preserving a workflow similar to historical practices reduce friction and support rapid turnarounds. Immersion demands disciplined process control and carries

operational risk if procedures aren’t followed precisely, impacting system availability.

How each cooling model reshapes day‑to‑day operations

Human interaction with sensors, monitoring systems, and diagnostic tools also diverges. Cold plate solutions typically integrate sensors at key junctions temperature, pressure, and flow meters giving engineers point‑specific insight into thermal behavior. Immersion systems rely on bulk fluid metrics and thermal models that emphasize overall system behavior rather than component‑specific signals. Operators must interpret a different set of indicators, often with new dashboards and alerting logic, to maintain system health.

Importantly, the experience of troubleshooting varies as well. A cold plate system may expose a blockage in a line or an imbalance in flow through clear telemetry and modular hardware replacement. In immersion, where fluid surrounds all components, isolating a thermal anomaly to a single source becomes less straightforward, requiring advanced analytics and sometimes even predictive maintenance AI tools.

Ultimately, maintenance and human interaction are not just operational details; they are strategic levers that influence downtime, staffing needs, safety protocols, and even hiring practices, shaping the everyday reality of cooling choices across AI data centers.

Vendor Ecosystems and Design Gravity

Cooling decisions don’t happen in a vacuum; they are heavily influenced by vendor ecosystems and the gravitational pull of reference architectures. Original equipment manufacturers (OEMs), silicon vendors, and data center infrastructure partners shape design norms by packaging cooling solutions alongside compute platforms. When a dominant GPU or AI accelerator supplier certifies a specific cooling approach, that choice ripples through OEM partners and system integrators, making adoption more likely simply because the compatibility path is clearer. For example, Nvidia’s recent support and co‑engineering efforts with liquid cooling partners signal where part of the AI infrastructure market is heading, especially in high‑density clusters designed for large‑scale training. This ecosystem effect accelerates adoption because operators can source validated designs from partners rather than inventing integrations from scratch.

The design gravity is particularly strong when hyperscale cloud platforms, ODMs (original design manufacturers), and thermal engineering firms align on a reference platform that blends compute and cooling. Taiwan’s TSMC, a leading silicon foundry, has invested in direct‑to‑silicon cooling systems and collaborates with ecosystem partners to optimize them, creating templates that OEMs can adopt more rapidly. Such reference stack frameworks make it easier for facilities teams to predict performance, submit budget forecasts, and lock in supply agreements, reducing perceived risk and time‑to‑deployment.

How OEM alignment and supply chains pull data centers toward one cooling path

These vendor ecosystems also influence supply‑chain comfort, which plays a significant role in procurement cycles. Organizations that have long‑standing relationships with OEMs offering cold plates integrated into their server platforms find it simpler to scale incrementally. In contrast, immersion systems may require new partnerships with immersion tank vendors, dielectric fluid suppliers, and specialized service teams creating an entirely new supply chain that must be managed. The inertia of existing contracts, service level agreements, and vendor familiarity subtly biases decisions toward approaches that fit established procurement rhythms.

Regionally, these ecosystem effects are most pronounced in North America, Europe, and East Asia where both OEM headquarters and major hyperscale deployments concentrate. In these regions, the supply‑chain density and proximity to silicon designers, cooling innovators, and system integrators accelerate iteration cycles. Companies in these geographies also benefit from dense partner networks that make tests, pilots, and proofs of concept easier to execute.

Ultimately, vendor ecosystem alignment effectively creates design gravity, a force that makes certain cooling pathways feel natural because they match the collective direction of trusted partners rather than purely intrinsic performance advantages. This ecosystem effect often determines which cooling models gain traction first and where operators place their bets in evolving thermal architectures.

Risk Perception vs Actual Exposure

In every discussion among engineers evaluating cold plates and immersion systems, risk perception consistently outsizes actual operational exposure. Many data center managers instinctively fear leaks, fluid spills, or catastrophic failure modes, especially when liquids are involved. This aversion stems from decades of operating air‑cooled facilities where no visible liquid created a psychological sense of safety, even if air systems carry their own risks, such as dust accumulation and unexpected thermal throttling. The visceral fear of dielectric fluid leaking into electronics despite the fluid’s non‑conductive properties often weighs more heavily in deliberations than empirical reliability data.

Executive leadership, who bear accountability for uptime metrics and financial risk, often internalize these perceptions. If a critical executive like a CTO or VP of Infrastructure speaks with visible concern about introducing fluid into the core compute layer, that concern trickles down into procurement specifications, risk assessments, and board presentations. As of 2025, several industry leaders have publicly spoken about this tension: for instance, the CTO of a major cloud provider described immersion systems as compelling on paper, but we need longer operational track records and robust fail‑safe mechanisms before we commit at scale, highlighting the psychological weight of risk tolerance in corporate strategy.

Why fears around leaks and failure modes shape decisions more than real data

Importantly, real operational data tells a less dramatic story. Independent case studies from facilities using immersion for thousands of hours demonstrate that dielectric fluids perform reliably, with failure rates comparable to traditional liquid cooling when systems are designed and maintained correctly. Moreover, leak detection systems, secondary containment, and fluid‑handling protocols mitigate most credible leak scenarios. Yet these facts often play second fiddle to the emotional impact of visualizing fluid around high‑value compute gear.

Cold plates benefit from this dynamic. Because they physically resemble familiar cooling blocks and integrate into known fluid infrastructure, they seem less risky even though they still involve advanced fluid management with potential leak points. This cognitive asymmetry where perception assigns disproportionate weight to certain failure modes influences RFP outcomes, executive presentations, and even risk registers in formal governance frameworks.

Thus, while actual exposure to failures in immersion systems is being documented as low in modern deployments, the perception of risk continues to shape which cooling models feel safe enough to adopt. This disconnect between fear and data highlights how human psychology, not just engineering performance, steers strategic infrastructure choices.

Flexibility, Lock‑In, and Long‑Term Optionality

Cooling strategy directly affects hardware flexibility. Cold plates allow operators to upgrade modules without overhauling racks. Immersion systems require careful design to maintain upgrade pathways. Operators must balance immediate efficiency against future adaptability. Hybrid deployments combine both methods to achieve flexibility and density. Teams ensure proper planning prevents cooling from becoming a bottleneck as AI workloads grow. Strategic foresight helps teams maintain efficiency and operational control.

Vendor ecosystems shape flexibility. Reference designs encourage adoption of specific approaches. Companies that implement cold plate systems integrate GPUs incrementally. Operators who adopt immersion gain maximum density but must adjust workflows for hardware swaps. Operational and strategic decisions shape design constraints. Teams evaluate technical and business trade-offs to maximize long-term performance.

Long-term optionality remains critical for AI workloads. Model sizes grow rapidly, changing thermal profiles. Cooling systems must handle higher densities without constant redesign. Operators who plan for scale extend system life. Teams consider long-term optionality critical for workload resilience. Strategic selection today improves efficiency, maintenance, and workload flexibility tomorrow

How cooling choices influence future architectural freedom

Executives thinking strategically about optionality often ask a simple question: Is the chosen cooling approach the winner for the next decade, or does it hamper adaptability as workloads and hardware evolve? Cold plates appeal because they retain links to established architectures, making them more adaptable in mixed environments where air, liquid, and immersion might coexist. Immersion, by contrast, demands commitment to a distinctive ecosystem, requiring confidence that the long‑term benefits outweigh the architectural constraints.

This strategic tension matters most in regions with rapid AI adoption, such as North America, China, and Western Europe. In these markets, purchase decisions weigh not only performance today but also architectural flexibility for future use cases from generative AI to high‑performance computing (HPC) and edge deployments. Organizations with diverse workloads often prefer cooling platforms that support heterogeneous equipment over time rather than committing to a single modality.

Thus, cooling choices aren’t just thermal trade‑offs; they are long‑term architectural bets that influence upgrade cycles, asset management, and strategic flexibility over decades.

AI Workloads Are Changing the Rules Quietly

Traditional data center cooling assumptions grew out of workloads characterized by fluctuating CPU loads, predictable memory access patterns, and moderate sustained power consumption. AI workloads, especially large‑scale training break these assumptions. They push GPUs and TPUs into prolonged, high‑power states where thermal output is not only high but steady, creating continuous thermal loads that conventional air and cooled‑air systems struggle to dissipate efficiently. These workloads demand consistent, high‑capacity heat removal rather than cooling spikes, changing the physics of thermal management in fundamental ways.

This shift in workload profile has elevated the importance of liquid‑based cooling whether cold plates or immersion because liquids transport heat more efficiently than air. However, the homogeneity of heat output and the density at which it is generated create new optimization problems.These requirements extend beyond simple heat removal into nuanced considerations of fluid dynamics, pump efficiency, and thermal modeling areas where both cold plate and immersion systems provide distinct advantages but through different mechanisms.

Importantly, as AI models continue to grow in size and complexity, thermal demands escalate at a pace that outstrips Moore’s Law. This escalation forces engineers to rethink cooling assumptions that held when compute density increased incrementally. Today’s physics requires systems that handle both peak and sustained thermal loads without overspending on energy or compromising throughput, a shift that underpins the rising interest in both cold plate direct cooling and immersion’s systemic approach.

As a result, the cooling debate has moved beyond simply managing heat to addressing the thermal behavior of compute workloads themselves. These behaviors influence placement decisions, rack spacing, coolant loop design, and even the choice of dielectric fluids or coolant chemistries all of which form part of the evolving thermal stack for AI data centers.

Regional, Regulatory, and Cultural Influence

The adoption of cold plate versus immersion cooling is heavily shaped by regional, regulatory, and cultural factors. Europe presents a slightly different dynamic. Regulatory emphasis on sustainability, carbon reduction, and energy reuse encourages operators to consider immersion for its superior thermal efficiency and lower water usage. Yet cultural attitudes toward operational risk also play a role; some European operators remain cautious about immersion due to perceived complexity and maintenance challenges. Germany and the Netherlands, for example, see pilot immersion deployments at research-intensive facilities while mainstream adoption remains measured

In East Asia, particularly Taiwan and China, aggressive AI growth and high-density facilities push both cold plate and immersion systems into the spotlight. Taiwan Semiconductor Manufacturing Company (TSMC) leads in direct-to-chip cold plate adoption for AI compute centers, reflecting a cultural comfort with incremental technological improvements tightly integrated with existing fabrication and thermal practices. TSMC’s CTO has publicly noted that direct-to-chip solutions allow us to scale compute density while leveraging decades of thermal expertise, highlighting how corporate strategy and operational culture intertwine with cooling choices.

How geography, compliance, and organizational culture affect cooling choices

Meanwhile, immersion deployments in China often occur in new-build, purpose-designed AI data centers where operators are less constrained by legacy infrastructure. Fluid handling and tank-based architectures are incorporated from the ground up, leveraging regulatory approval pathways for industrial fluid management and energy efficiency. This regional alignment between policy, cultural willingness to adopt novel technology, and greenfield facilities creates conditions favorable for immersion to demonstrate its benefits at scale.

Cultural factors extend beyond government policy. Organizational mindset, leadership risk appetite, and historical familiarity with certain cooling practices strongly shape adoption patterns. Teams with long experience in modular air and liquid cooling favor incremental adoption like cold plates. Organizations that prioritize performance per watt and are willing to redesign operational workflows embrace immersion. This human dimension ensures that cooling adoption is never solely a technical decision; it is a reflection of risk tolerance, regulatory compliance, and culture in action.

Not a Winner-Takes-All Outcome

Current trends indicate coexistence rather than dominance. Cold plates excel in incremental upgrades and modular deployment. Immersion supports high-density new builds. Hyperscalers combine both methods based on workload, density, and energy constraints. Operators gain flexibility by applying the right technology to each scenario.

Operational factors reinforce coexistence. Muscle memory, vendor support, and local regulations shape choices. Hybrid strategies encourage coexistence. Operators match technology to facility type, region, or workload. Coexistence improves resilience and prevents reliance on a single system.

Major companies provide examples of coexistence. Google integrates cold plates for GPU clusters and tests immersion tanks for AI training workloads. Microsoft Azure balances cold plates with immersion in new builds. Hybrid deployment demonstrates the importance of operational context. Coexistence supports innovation, supply chain diversity, and energy efficiency.

Why coexistence, not dominance, may define the future

Major companies illustrate coexistence. Google integrates cold plates for GPU clusters and tests immersion tanks for AI training workloads. Microsoft Azure balances modular cold plates with immersion in greenfield builds. Hybrid deployment demonstrates that operational context matters more than choosing a single “winner.” Coexistence supports innovation, supply chain diversity, and energy efficiency goals.

This coexistence benefits the broader AI ecosystem. Hardware vendors can iterate faster, supply chains diversify, and operators are not locked into a single technological path that may become obsolete. As AI models evolve and density requirements escalate, having multiple cooling strategies available ensures resilience, operational flexibility, and alignment with evolving energy efficiency goals.

In short, the future of AI data center cooling is pluralistic. Rather than a battle won by one approach, success depends on matching technology to operational needs, geographic realities, and long-term strategic priorities, with coexistence emerging as the natural equilibrium of innovation, adoption, and risk management.

Cooling Dominance Will Be Decided by Mindset, Not Medium

Operators’ mindset drives cooling success. Aligning strategy with operational realities, team expertise, and vision delivers superior outcomes. Cold plate or immersion alone cannot guarantee performance.

Executives emphasize integration and scaling. TSMC’s CTO said, “Technology alone does not dictate performance, how operators integrate, maintain, and scale solutions determines real impact.” This shows that culture, procedures, and planning influence success as much as efficiency.

Mindset shapes risk perception. Teams comfortable with data and experimentation adopt immersion confidently. Teams relying on modular familiarity prefer cold plates. Execution and discipline drive results more than the cooling medium itself

Operational realities reinforce this principle. Maintenance workflows, human interaction, supply chain partnerships, and regional regulations shape technology deployment. Cold plates fit existing liquid loops, enabling modular upgrades. Immersion requires redesigns of facilities and training programs. Organizations that assess these factors thoroughly maintain uptime and optimize total cost of ownership.

A closing reflection on adaptability, operational reality, and long-term vision

Operational realities determine deployment outcomes. Cold plates integrate into existing loops, enabling modular upgrades. Immersion requires redesigns and staff training. Careful assessment maintains uptime and reduces costs. Teams that plan thoroughly adapt more easily to evolving AI workloads. Strategic vision preserves long-term flexibility. AI models grow rapidly. Cooling systems must handle higher densities without constant redesign. Operators planning for scale extend system life and reduce capital costs. Strategic choices today affect future performance, maintenance, and workload adaptability.

The human element remains critical. Teams must operate systems efficiently and troubleshoot effectively. Mindset shapes training, workflow adaptation, and operational culture. Properly aligned operators translate technology into measurable results. Cooling dominance depends on vision, discipline, and adaptability, not the medium itself.