The Growing Case for AI Data Centers in Space

We are observing AI data centers in space emerge as a serious frontier for global compute infrastructure. As artificial intelligence adoption accelerates, the constraints facing terrestrial data centers are becoming increasingly structural. Power availability, cooling limitations, land scarcity, and prolonged permitting timelines are now shaping how and where future AI infrastructure can scale. Against this backdrop, orbital deployment is transitioning from conceptual exploration into early-stage experimentation.

This shift reflects a growing recognition that Earth-based systems may struggle to sustain long-term AI compute growth. By relocating compute workloads beyond terrestrial constraints, organizations are assessing whether space-based infrastructure can deliver stable power access, passive cooling, and scalable deployment conditions unavailable on the ground.

Why AI Data Centers in Space Are Gaining Attention

On Earth, electricity and cooling dominate data center operating costs. For traditional CPU facilities, power accounts for roughly 20% of expenses. However, AI-focused data centers can see combined power and cooling costs rise to 40–60% due to the thermal demands of GPU-intensive workloads.

In orbit, this equation changes materially. Satellites operating in sun-synchronous orbits can capture near-continuous solar energy throughout their lifecycle. This potentially lowers long-term energy costs while avoiding grid congestion, regional shortages, and weather-related disruptions.

Cooling also becomes fundamentally different. The vacuum of space enables passive radiative cooling, eliminating the need for chillers, fans, water usage, and large cooling plants. As a result, energy overhead is significantly reduced, and operational complexity declines.

Alongside SpaceX, Google, and Nvidia-supported Starcloud, organizations such as Axiom Space and OrbitsEdge are also investing in early-stage orbital computing and infrastructure experimentation.

Performance Advantages of Orbital AI Infrastructure

Scalable compute without terrestrial limits

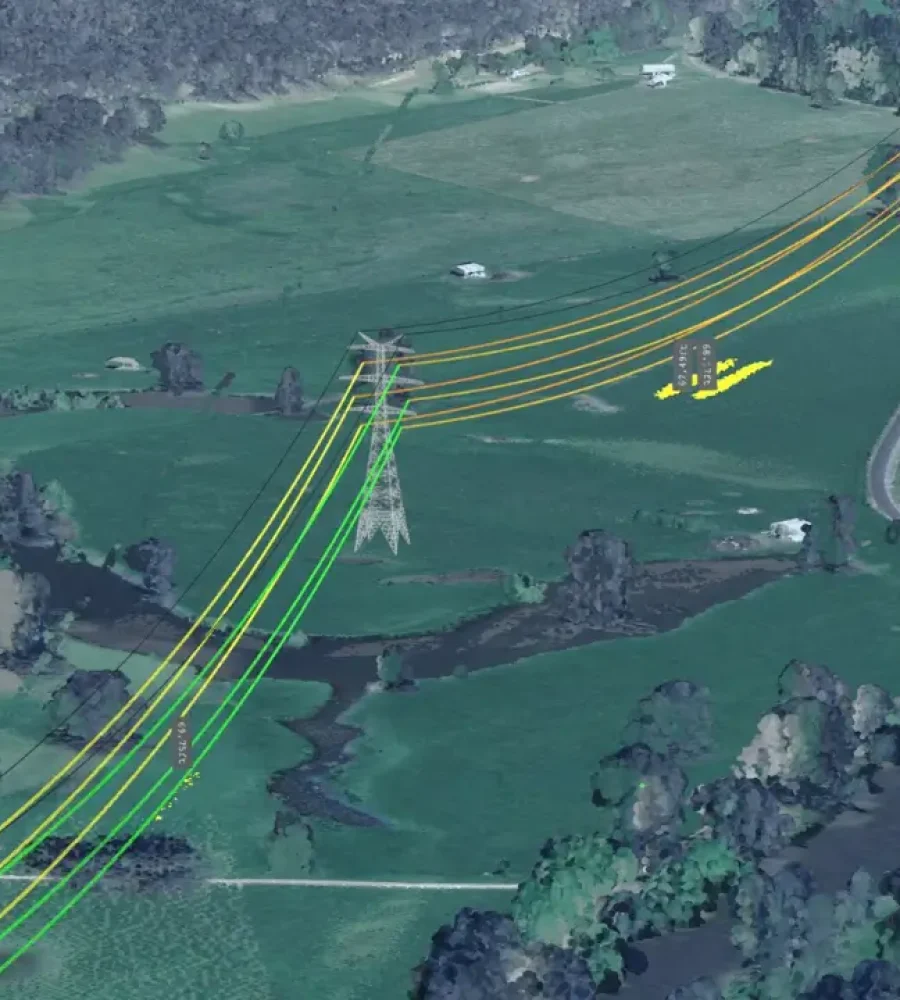

Without dependence on land availability or congested power grids, fleets of compute-enabled satellites can scale incrementally. Near-constant solar exposure allows systems to operate independently of regional grid reliability concerns.

Higher-density AI processing

Passive cooling removes many thermal constraints present on Earth. This enables AI accelerators to be packed more densely and operate within stable thermal envelopes, improving efficiency per unit of deployed hardware.

Built-for-space networking architectures

Laser-based inter-satellite links allow high-speed communication across distributed orbital clusters. Processing data directly in orbit also reduces the need for repeated downlink transfers to Earth-based facilities.

Structural and Technical Constraints

Despite its promise, AI data centers in space face non-trivial limitations.

Latency remains a defining constraint. Signal travel times make orbital compute unsuitable for real-time applications, financial trading, or latency-sensitive workloads.

Radiation exposure presents another challenge. Continuous bombardment gradually degrades chips and increases error rates, shortening effective hardware lifespan.

Maintenance constraints are also absolute. Once deployed, orbital hardware cannot be repaired or upgraded, meaning technology cycles move faster than refresh timelines.

Google and SpaceX as Central Enablers

The viability of AI data centers in space depends on both technical validation and economic feasibility. In this landscape, Google and SpaceX represent two complementary pillars enabling progress.

Google’s Project Suncatcher

Google, part of Alphabet, is advancing Project Suncatcher, with prototype launches targeted around 2027. The initiative focuses on testing standard TPU accelerators in orbit, not for peak performance, but for survivability.

Key validation areas include radiation tolerance, thermal stability, fault management, and optical networking in space environments. Google is collaborating with Planet Labs to develop and deploy two prototype satellites.

Google’s position is reinforced by its end-to-end control of the AI stack. It designs custom accelerators, operates distributed systems built for constant hardware failures, and has extensive experience extracting reliability at hyperscale.

SpaceX and launch economics

SpaceX addresses the economic dimension of orbital computing. Historically, launch costs limited space-based infrastructure to experimental payloads. Its fully reusable Starship system aims to change that equation.

By significantly reducing launch costs, SpaceX enables the possibility of deploying hundreds or thousands of compute-capable satellites. The company’s Starlink constellation further strengthens this model by offering an existing global, high-bandwidth communications layer.

With large-scale manufacturing, launch cadence, and constellation management experience, SpaceX is positioned as a key economic gatekeeper for orbital compute commercialization.

Key Proof Points to Monitor

Several milestones will shape whether AI data centers in space transition beyond experimentation.

Project Suncatcher execution

A critical signal will be Google’s ability to successfully deploy and operate its space-based AI compute prototypes with Planet Labs, demonstrating that commercial AI chips can function reliably in orbit.

AI compute within Starlink

Further integration of onboard AI processing into future Starlink satellites would indicate that meaningful compute workloads can be handled directly in space.

Declining launch costs

Current launch costs remain in the thousands of dollars per kilogram. SpaceX’s Falcon 9 averages $1,600–$2,000 per kg. Projections suggest Starship could reduce costs below $200 per kg by the 2030s, a shift that would materially alter the economics of orbital data centers.