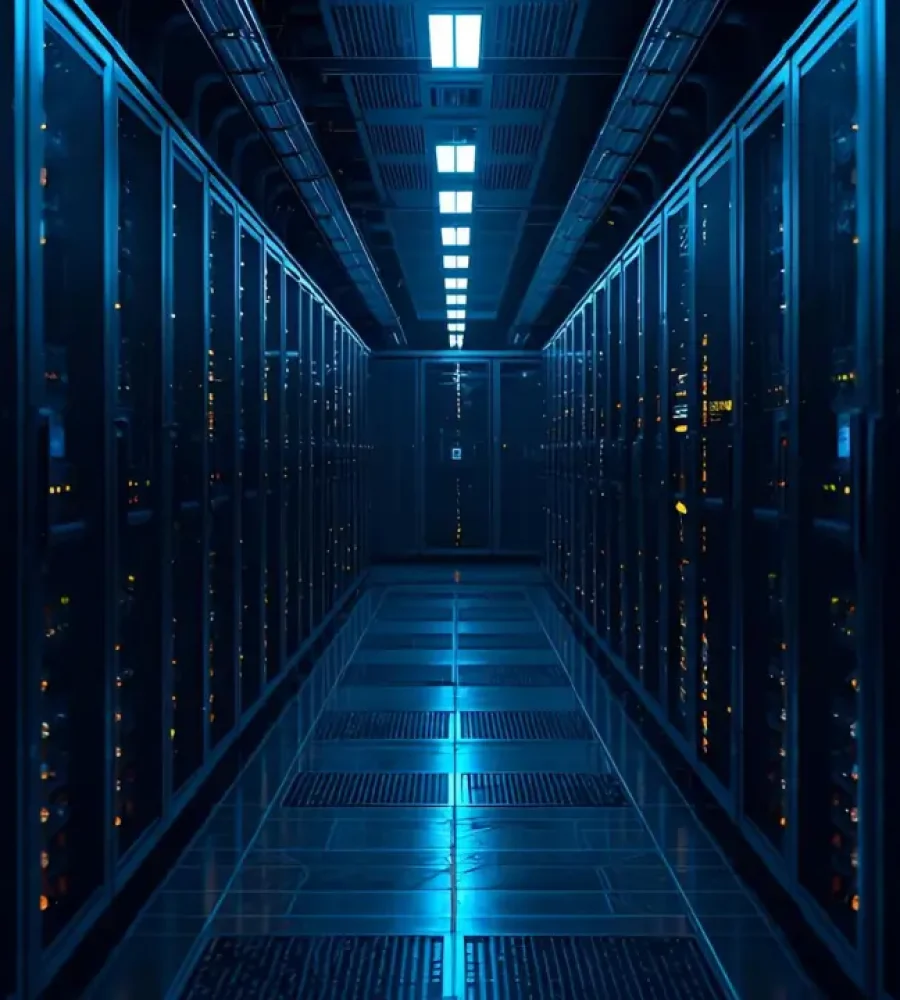

Anthropic has announced plans to significantly expand its use of Google Cloud technologies, including up to one million Tensor Processing Units (TPUs). This major expansion, valued at tens of billions of dollars, will substantially increase the company’s computing capacity and is expected to add over a gigawatt of power by 2026. The move underscores Anthropic’s commitment to advancing AI research and product development at scale.

“Anthropic’s choice to significantly expand its usage of TPUs reflects the strong price-performance and efficiency its teams have seen with TPUs for several years,” said Thomas Kurian, CEO at Google Cloud. “We are continuing to innovate and drive further efficiencies and increased capacity of our TPUs, building on our already mature AI accelerator portfolio, including our seventh generation TPU, Ironwood.”

Anthropic currently serves more than 300,000 business customers, with the number of large accounts, those contributing over $100,000 in annual run-rate revenue, growing nearly sevenfold in the past year. The expanded computing resources will enable Anthropic to better meet this rising customer demand while supporting advanced testing, alignment research, and responsible AI deployment at scale.

“Anthropic and Google have a longstanding partnership and this latest expansion will help us continue to grow the compute we need to define the frontier of AI,” said Krishna Rao, CFO of Anthropic. “Our customers—from Fortune 500 companies to AI-native startups—depend on Claude for their most important work, and this expanded capacity ensures we can meet our exponentially growing demand while keeping our models at the cutting edge of the industry.”

Anthropic’s compute strategy remains diversified across three major chip platforms, Google’s TPUs, Amazon’s Trainium, and NVIDIA’s GPUs. This multi-platform approach allows the company to advance Claude’s capabilities while maintaining strong industry partnerships. Anthropic also continues to collaborate closely with Amazon, its primary training partner and cloud provider, on Project Rainier, a large-scale compute cluster featuring hundreds of thousands of AI chips distributed across multiple U.S. data centers.

The company reaffirmed its commitment to ongoing investments in compute infrastructure to ensure its models and AI capabilities remain at the forefront of technological innovation.