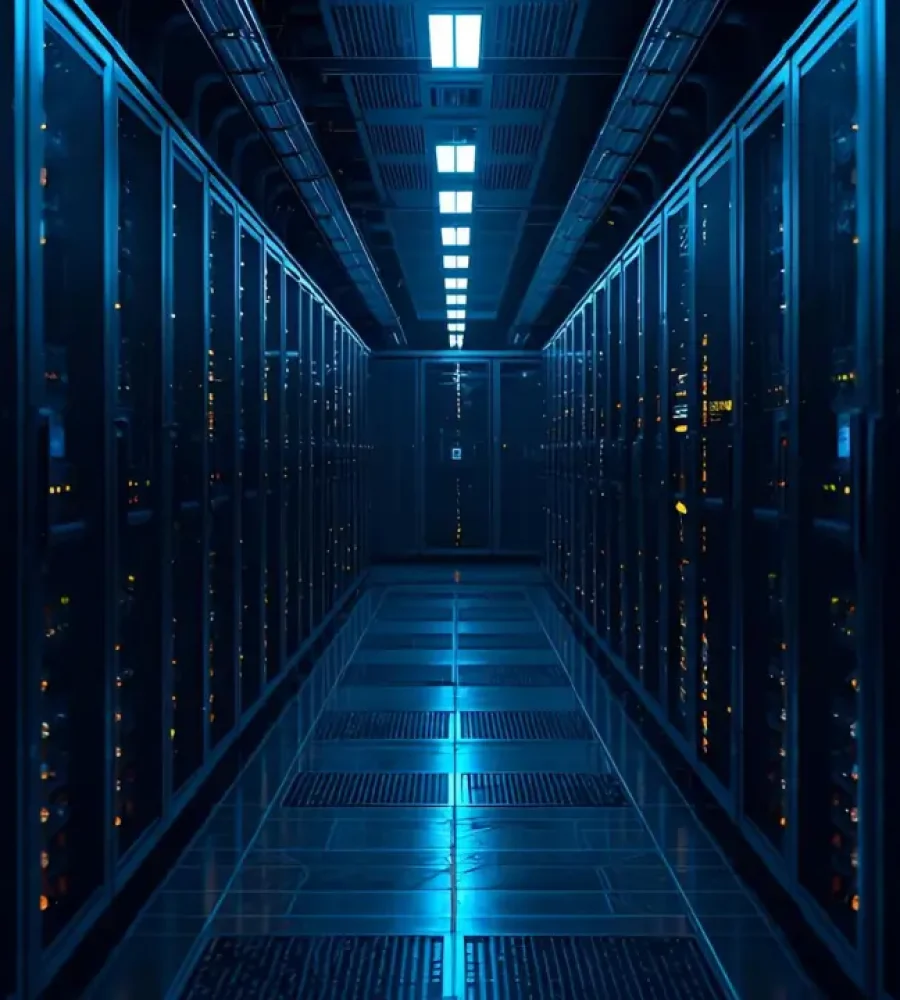

Workers calibrate and install the China’s independently developed third-generation superconducting quantum computer. Photo:Courtesy: Anhui Quantum Computing Engineering Research Center

Chinese scientists have pulled off a world first- successfully fine-tuning a billion-parameter AI model using their own superconducting quantum computer, Origin Wukong, the Global Times learned from the Anhui Quantum Computing Engineering Research Center.

Powered by Wukong, a 72-qubit indigenous superconducting quantum chip, the quantum computer is one of the nation’s most advanced programmable and deliverable super-conducting quantum computers.

On the quantum chip of Origin Wukong, a single batch of data can generate hundreds of quantum tasks for parallel processing. Experimental data shows that the optimized model achieved a 15-percent reduction in training loss on a psychological counseling dialogue dataset, and the accuracy for the mathematical reasoning task increased by 68 to 82%, the research center noted.

The Global Times points out that the Industry analysts said that this experiment has not only validated the feasibility of using quantum computing to achieve lightweighting of large language model (LLMs) but also paved a new way to address the “computing power anxiety”.

“It’s like equipping a classical model with a ‘quantum engine’, allowing the two to work in synergy,” Dou Menghan, vice president of Origin Quantum Computing Technology Co and deputy director of the Anhui Quantum Computing Engineering Research Center, told the Global Times on Monday.

According to the research center, the experiment demonstrated that even with a 76-percent reduction in parameters, training effectiveness improved by 8.4 percent.

“This is a huge step forward in the field of quantum computing,” said Chen Zhaoyun, a deputy researcher at the Institute of Artificial Intelligence, Hefei Comprehensive National Science Center, adding that it marks the first time quantum computing has been used in real-machine operations for large model tasks, proving that the existing hardware is capable of supporting the fine-tuning of LLMs.

Fine-tuning

Fine-tuning refers to the process of taking a pre-trained model- typically a large language or foundation model and customizing it for specific applications like medical diagnosis or financial risk assessment. This technique enhances the model’s accuracy and relevance for targeted business needs. Since its launch on January 6, 2024, Origin Wukong has executed over 350,000 quantum computing tasks across industries such as computational fluid dynamics, finance, and biomedicine. The company stated that users from 139 countries and regions have accessed the system remotely.