A Federal Reset for US AI Regulation

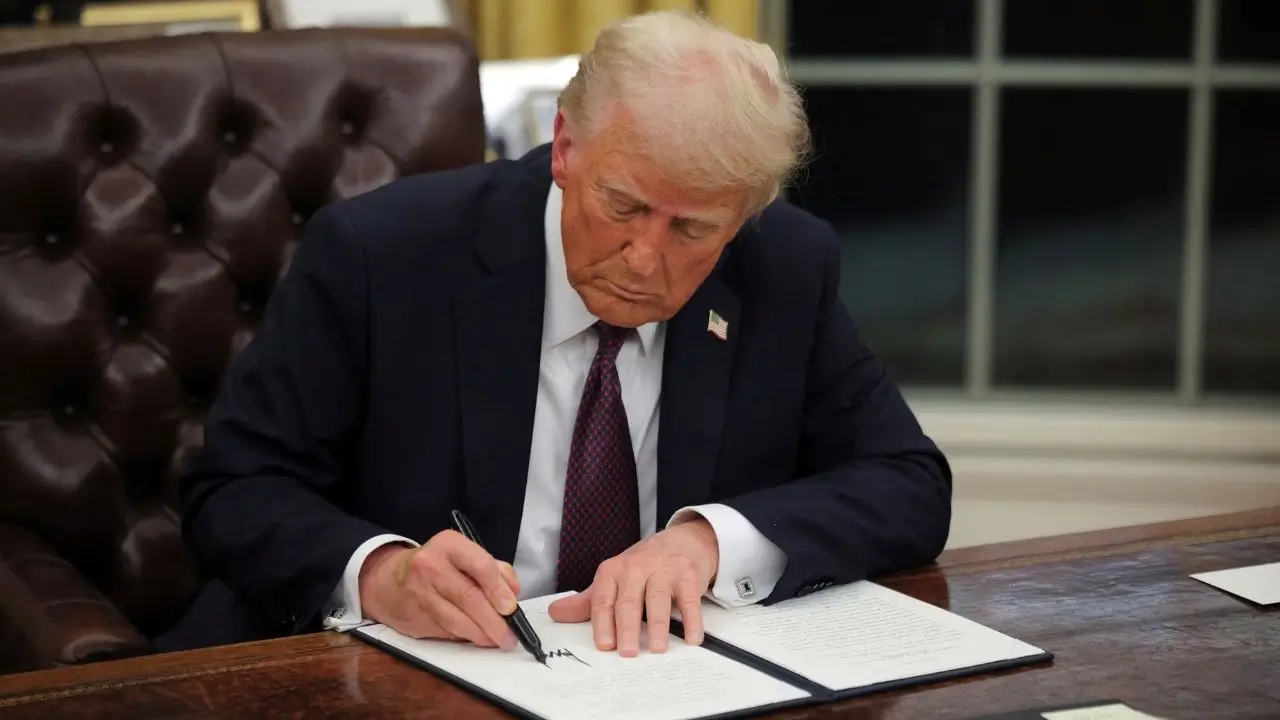

We are witnessing a defining moment for US AI regulation as President Donald Trump signs an executive order designed to block individual states from enforcing their own artificial intelligence laws. From our organizational perspective, this move signals a decisive push toward centralizing authority over AI governance at the federal level, reshaping how innovation, oversight, and compliance may unfold across the country.

Speaking from the Oval Office, President Donald Trump framed the decision as a need for uniformity, stating that the administration wants “one central source of approval.” The intent, as articulated by the White House, is to prevent what it views as fragmented and overly burdensome state-level frameworks that could slow national progress in artificial intelligence.

At its core, this executive order positions US AI regulation as a federal priority rather than a patchwork of local rules. For an industry evolving at extraordinary speed, the implications are significant not only for policymakers, but for companies, researchers, and citizens navigating AI’s expanding role in daily life.

Why the White House Is Pushing Back on State AI Rules

According to White House AI adviser David Sacks, the order equips the administration with tools to counter the most “onerous” state regulations. At the same time, he emphasized that the federal government will not oppose AI rules focused on children’s safety, an area that continues to raise bipartisan concern.

From where we stand, this reflects a balancing act within US AI regulation, reducing regulatory friction for developers while preserving safeguards in sensitive areas. Technology leaders have long argued that complying with dozens of different state laws could dilute innovation and weaken the United States’ ability to compete globally, particularly against China, which is investing aggressively in AI capabilities.

The executive order has therefore been welcomed by many in the technology sector as a step toward regulatory clarity, even as it intensifies debates about federal versus state authority.

The Scale of State-Level AI Activity

The backdrop to this decision is the sheer volume of state action on AI. While the US has no comprehensive national AI law, the White House notes that more than 1,000 AI-related bills have been introduced across state legislatures.

This year alone, 38 states,including California, home to many of the world’s largest technology firms, have enacted around 100 AI regulations, according to the National Conference of State Legislatures. These measures vary widely in scope, ambition, and enforcement mechanisms, underscoring the fragmented landscape that the administration now seeks to override.

For us, this highlights the complexity surrounding US AI regulation: innovation is accelerating faster than governance structures can easily adapt.

How State AI Laws Differ Across the Country

California has been among the most active states. One law requires platforms to regularly inform users when they are interacting with a chatbot, aiming to protect children and teenagers from potential harm. Another mandates that the largest AI developers outline strategies to mitigate catastrophic risks associated with their models.

Other states have taken different approaches. In North Dakota, a new law restricts the use of AI-powered robots for stalking or harassment. Arkansas has barred AI-generated content from infringing on intellectual property or existing copyright. Oregon has prohibited “non-human entities,” including those powered by AI, from using licensed medical titles such as registered nurse.

These examples illustrate why federal officials argue that a unified US AI regulation framework could simplify compliance while still addressing real-world risks.

Growing Opposition and Constitutional Concerns

Despite industry support, the executive order has sparked sharp criticism. Opponents argue that state governments are stepping in precisely because meaningful federal guardrails do not yet exist.

“Stripping states from enacting their own AI safeguards undermines states’ basic rights,” said Julie Scelfo of advocacy group Mothers Against Media Addiction, warning that residents could be left vulnerable in the absence of strong national protections.

California Governor Gavin Newsom, a vocal critic of the president, accused the administration of prioritizing technology allies over public interest. He argued that the order seeks to preempt state laws designed to protect Americans from unregulated AI technologies.

From our standpoint, these reactions underscore a central tension in US AI regulation: whether speed and uniformity should outweigh local autonomy and precaution.

Industry Voices Call for One Federal Standard

Major AI developers, including OpenAI, Google, Meta, and Anthropic did not immediately comment on the executive order. However, the move was praised by technology lobby group NetChoice.

Its director of policy, Patrick Hedger, said the group looks forward to working with the White House and Congress to establish nationwide standards and a clear rulebook for innovators. This sentiment reflects a broader industry concern about navigating conflicting legal obligations across jurisdictions.

Legal scholars have echoed this view, cautiously. Michael Goodyear, an associate professor at New York Law School, noted that while a single federal law would be preferable to a patchwork of state rules, its effectiveness depends entirely on the quality of that legislation.

What This Means for the Future of US AI Regulation

As we see it, this executive order does not end the debate,it intensifies it. The administration’s move places renewed urgency on Congress to develop comprehensive federal AI legislation that balances innovation, safety, and accountability.

Until such a framework exists, US AI regulation remains in flux. What is clear, however, is the question of who governs artificial intelligence and how it has become a central issue shaping America’s technological future.