NVIDIA has launched NVIDIA Rubin, a groundbreaking AI platform that integrates six specialized chips to power its next-generation AI supercomputer. With this release, the company is aiming to reduce training times, lower inference costs, and accelerate enterprise adoption of advanced AI systems. Moreover, Rubin sets a new standard for deploying agentic AI at scale.

Extreme Codesign for Unmatched Efficiency

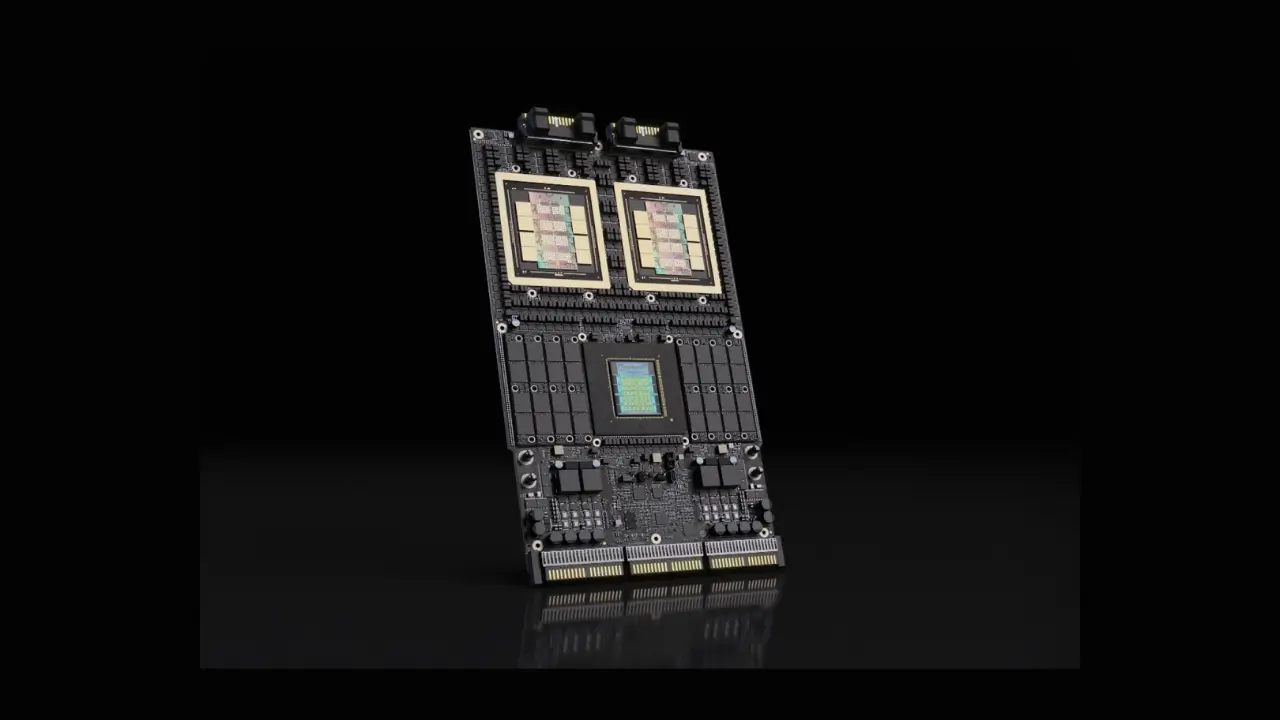

The Rubin platform combines the NVIDIA Vera CPU, Rubin GPU, NVLink 6 Switch, ConnectX-9 SuperNIC, BlueField-4 DPU, and Spectrum-6 Ethernet Switch. Together, these components form a highly integrated system that improves performance. Consequently, training mixture-of-experts models requires four times fewer GPUs than previous architectures. In addition, inference costs per token drop up to tenfold, making AI workloads far more affordable.

CEO Jensen Huang explained that Rubin arrives at a critical moment. As demand for AI training and inference rises sharply, extreme codesign across six chips ensures faster, more efficient computation. Furthermore, the platform supports agentic AI reasoning, advanced model inference, and large-scale deployment.

Addressing AI Adoption Challenges

AI enterprises face rising GPU costs, fragmented agent development environments, and growing security concerns. Therefore, Rubin introduces several innovations to tackle these obstacles. For instance, third-generation Transformer Engines, Confidential Computing, and the RAS Engine improve efficiency while maintaining data security. In addition, the NVIDIA Vera CPU delivers 88 custom Olympus cores and ultrafast NVLink-C2C connectivity.

Rubin GPUs provide 50 petaflops of NVFP4 compute for inference. Meanwhile, NVLink 6 ensures seamless inter-GPU communication, and BlueField-4 with Spectrum-6 optimizes secure storage and low-latency networking. As a result, multi-tenant and bare-metal deployments achieve predictable performance without compromise.

Industry Leaders Endorse Rubin

Several top AI labs and cloud providers are already preparing to deploy Rubin. Among them are AWS, Microsoft, Google Cloud, Oracle, OpenAI, Anthropic, Meta, and xAI. Sam Altman of OpenAI noted that scaling intelligence requires scalable compute. Likewise, he emphasized that Rubin enables models to tackle more complex problems efficiently.

Dario Amodei of Anthropic added that Rubin’s efficiency gains allow longer memory, better reasoning, and more reliable outputs. Similarly, Mark Zuckerberg described the platform as a step-change in performance for models serving billions of users. Satya Nadella from Microsoft highlighted Rubin’s role in powering next-generation AI data centers with unmatched efficiency. Together, these endorsements indicate that NVIDIA Rubin is a strategic infrastructure upgrade, not just a hardware launch.

Preparing AI Workloads for the Future

Rubin incorporates AI-native storage through the Inference Context Memory Storage platform. In addition, Advanced Secure Trusted Resource Architecture (ASTRA) provides a single point to securely manage workloads. Consequently, enterprises can scale multi-turn reasoning and share inference context across large systems.

The platform also supports NVIDIA DGX SuperPOD™, HGX Rubin NVL8 boards, and CoreWeave Mission Control. Production begins in the second half of 2026. As a result, cloud providers and AI labs can integrate Rubin efficiently, scaling thousands of nodes while maintaining security and performance.

Redefining AI Infrastructure

The launch of Rubin highlights a broader trend: AI hardware and software are increasingly co-designed to reduce costs and improve scalability. By integrating six chips into a single system, NVIDIA Rubin delivers not only raw power but also operational efficiency. Moreover, enterprises can adopt agentic AI with confidence while lowering infrastructure complexity.

BlueField-4 and Spectrum-6 Ethernet further support secure, high-performance networking. Meanwhile, AI-native storage allows for predictable scaling of workloads. Consequently, Rubin is designed for the next generation of AI factories, supporting multi-turn reasoning, agentic decision-making, and long-context inference.

Future-Ready AI

NVIDIA Rubin demonstrates that integrated design is essential for the AI era. By lowering costs, improving efficiency, and enhancing security, Rubin provides a blueprint for large-scale AI supercomputing. Furthermore, early adoption by top AI labs and cloud providers shows that the platform will influence AI infrastructure standards.